I attended the “Holographic Academy” during Microsoft’s Build conference in San Francisco. It was aimed at developers, and we got a hands-on experience of coding a simple HoloLens app and viewing the results. We were forbidden from taking pictures so you will have to make do with my words; this also means I do not have to show myself wearing a bulky headset and staring at things you cannot see.

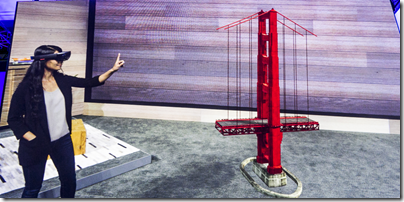

First, a word about HoloLens itself. The gadget is a headset that augments the real world with a 3D “projected” image. It is not really a hologram, otherwise everyone would see it, but it is a virtual hologram created by combining what you see with digital images.

The effect is uncanny, since the image you see appears to stay in one place. You can walk around it, seeing it from different angles, close up or far away, just as you could with a real image.

That said, there were a couple of issues with the experience. One is that if you went too close to a projected image, it disappeared. From memory, the minimum distance was about 18 inches. Second, the viewport where you see the augmented reality was fairly small and you could easily see around it. This is detrimental to the illusion, and sometimes made it a struggle to see as much of your hologram as you might want.

I asked about both issues and got the same response, essentially “no comment’’. This is prototype hardware, so anything could change. However, according to another journalist who attended a hands-on demo in January, the viewport has gotten smaller, suggesting that Microsoft is compromising in its effort to make the technology into a commercially viable product.

Another odd thing about the demo was that after every step, we were encouraged to whoop and cheer. There was a Microsoft “mentor” for every pair of journalists, and it seemed to me that the mentors were doing most of the whooping and cheering. It is obvious that this is a big investment for the company and I am guessing that this kind of forced enthusiasm is an effort to ensure a positive iimpression.

Lest you think I am too sceptical, let me add that the technology is genuinely amazing, with obvious potential both for gaming and business use.

The developer story

The development process involves Unity, Visual Studio, and of course the HoloLens device itself. The workflow is like this. You create an interactive 3D scene in Unity and build it, whereupon it becomes a Visual Studio project. You open the project in Visual Studio, and deploy it to HoloLens (connected over USB), just as you would to a smartphone. Once deployed, you disconnect the HoloLens and wear it in order to experience the scene you have created. Unity supports scripting in C#, running on Mono, which makes the development platform easy and familiar for Windows developers.

Our first “Holo World” project displayed a hologram at a fixed position determined by where you are when the app first runs. Next, we added the ability to move the hologram, selecting it with a wagging finger gesture, shifting our gaze to some other spot, and placing it with another wagging finger gesture. Note that for this to work, HoloLens needs to map the real world, and we tried turning on wire framing so you could see the triangles which show where HoloLens is detecting objects.

We also added a selection cursor, an image that looks like a red bagel (you can design your own cursor and import it into Unity). Other embellishments were the ability to select a sphere and make it fall to the floor and roll around, voice control to drop a sphere and then reset it back to the starting point, and then “spatial audio” that appears to emit from the hologram.

All of this was accomplished with a few lines of C# imported as scripts into Unity. The development was all guided so we did not have to think for ourselves, though I did add a custom voice command so I could say “abracadabra” instead of “reset scene”; this worked perfectly first time.

For the last experiment, we added a virtual underworld. When the sphere dropped, it exploded making a virtual pit in the floor, through which you could see a virtual world with red birds flapping around. It was also possible to enter this world, by positioning the hologram above your head and dropping a sphere from there.

HoloLens has three core inputs: gaze (where you are looking), gesture (like the finger wag) and voice. Of these, gaze and voice worked really well in our hands on, but gesture was more difficult and sometimes took several tries to get right.

At the end of the session, I had no doubt about the value of the technology. The development process looks easily accessible to developers who have the right 3D design skills, and Unity seems ideally suited for the project.

The main doubts are about how close HoloLens is to being a viable commercial product, at least in the mass market. The headset is bulky, the viewport too small, and there were some other little issues like lag between the HoloLens detection of physical objects and their actual position, if they were moving, as with a person walking around.

Watch this space though; it is going to be most interesting.

>Another odd thing about the demo was that after every step, we were encouraged to whoop and cheer.

That’s hilarious.

Given how many people have complained about the limited field-of-view, I wonder how long MS will stick with the “no comment” approach. If MS actually answered the question, are they afraid too much of the aura would disappear? If people were faced with the actual limitations, they might conclude it’s just a long-shot “science-project” which won’t be practical for another 5-10 years? Which is probably what it is.