Finding time to write everything up is a struggle, so rather than risk not doing so at all, here is a quick-fire reflection on the event.

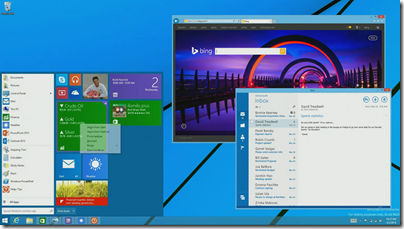

Microsoft’s Windows 10 was part of it of course; I’ve covered this in a separate post.

I attended MediaTek’s press event. This Taiwan SoC company announced the Helio X10 64-bit 8-core chip and had some neat imaging demos. Helio is its new brand name. I was impressed with the company’s presentation; it seems to be moving quickly and delivering high-performance chips.

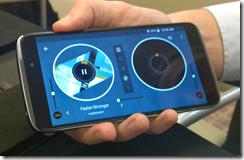

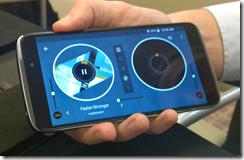

Alcatel OneTouch showed me its latest range. The IDOL 3 smartphone includes a music mixing app which is good fun.

There is also a watch of course:

Despite using Android for its smartphones, Alcatel OneTouch says Android Wear is too heavyweight for its watches.

The Alcatel OneTouch range looks good value but availability in the UK is patchy. I was told in Barcelona that the company will address this with direct sales through its own ecommerce site, though currently this only sells accessories, and trying to get more retail presence as opposed to relying on carrier deals.

I attended Samsung’s launch of the Galaxy S6. Samsung is a special case at MWC. It has the largest exhibits and the biggest press launch (many partners attend too). It is not just about mobile devices but has a significant enterprise pitch with its Knox security piece.

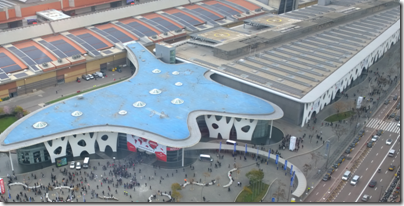

So to the launch, which took place in the huge Centre de Convencions Internacional, unfortunately the other side of Barcelona from most of the other events.

The S5 was launched at the same venue last year, and while it was not exactly a flop, sales disappointed. Will the S6 fare better?

It’s a lovely phone, though there are a few things missing compared to the S5: no microSD slot, battery not replaceable, not water resistance. However the S6 is more powerful with its 8-core processor and 1440×2560 screen, vs quad-core and 1920×1080 in the S5. Samsung has also gone for a metal case with tough Gorilla Glass front and back, versus the plastic and glass construction of the S5, and most observers feel this gives a more premium feel to the newer smartphone.

I suspect that these details are unimportant relative to other factors. Samsung wants to compete with the iPhone, but it is hardly possible to do so, given the lock which the Apple brand and ecosystem holds on its customers. Samsung’s problem is that the cost of an excellent smartphone has come down and the perceived added value of a device at over £500 or $650 versus one for half the price is less than it was a couple of years ago. Although these prices get hidden to some extent in carrier deals, they still have an impact.

Of particular note at MWC were the signs that Samsung is falling out with Google. Evidence includes the fact that Samsung Knox, which Google and Samsung announced last year would be rolled into Android, is not in fact part of Android at Work, to the puzzlement of Samsung folk I talked to on the stand. More evidence is that Samsung is bundling Microsoft’s Office 365 with Knox, not what Google wants to see when it is promoting Google Apps.

Google owns Android and intends it to pull users towards its own services; the tension between the company and its largest OEM partner will be interesting to watch.

At MWC I also met with Imagination, which I’ve covered here.

Jolla showed its crowd-sourced tablet running Sailfish OS 2.0, which is based on the abandoned Nokia/Intel project called MeeGo. Most of its 128 employees are ex-Nokia.

Jolla’s purpose is not so much to sell a tablet and phone, as to kick-start Sailfish which the company hopes will become a “leading digital content and m-commerce platform”. It is targeting government officials, businesses and “privacy-aware consumers” with what it calls a “security strengthened mobile solution”. Its business model is not based on data collection, says the Jolla presentation, taking a swipe at Google, and it is both independent and European. Sailfish can run many Android apps thanks to Myriad’s Alien Dalvik runtime.

The tablet looks great and the project has merit, but what chance of success? The evidence, as far as I can tell, is that most users do not much object to their data being collected; or put another way, if they do care, it does not much affect their buying or app-using decisions. That means Sailfish will have a hard task winning customers.

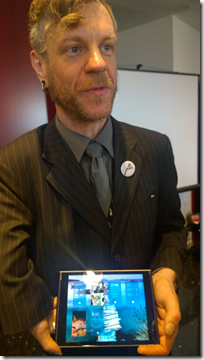

China based ZTE is differentiating its smartphones with eye-scanning technology. The Grand S3 smartphone lets you unlock the device with Eyeprint ID, based on a biometric solution from EyeVerify.

Senior Director Waiman Lam showed me the device. “It uses the retina characteristic of your eyes for authentication,” he said. “We believe eye-scanning technology is one of the most secure biometric ways. There are ways to get around fingerprint. It’s very very secure.”

Talking of sensors, I must also mention San Francisco based Boyd Sense, a startup, which has a smell sensor. I met with CEO Bruno Thuillier. “The idea we have is to bring gas technology to the mobile phone,” he said. Boyd Sense is using technology developed by partner Alpha MOS.

The image below shows a demo in which a prototype sensor is placed into a jar smelling of orange, which is detected and shown on the connected smartphone.

What is the use of a smell sensor? What we think of as smell is actually the ability to detect tiny quantities of chemicals, so a smell sensor is a gas analyser. “You can measure your environment,” says Thuillier. “Think about air quality. You can measure food safety. You can measure beverage safety. You can also measure your breath and some types of medical condition. There are a lot of applications.”

Not all of these ideas will be implemented immediately. Measuring gas accurately is difficult, and vulnerable to the general environment. “The result depends on humidity, temperature, speed of diffusion, and many other things,” Thuillier told me.

Of course the first thing that comes to mind is testing your breath the morning after a heavy night out, to see if you are safe to drive. “This is not complicated, it is one gas which is ethanol,” says Thuillier. “This I can do easily”.

Analysing multiple gasses is more complex, but necessary for advanced features like detecting medical conditions. Thuillier says more work needs to be done to make this work in a cheap mobile device, rather than the equipment available in a laboratory.

I had always assumed that sampling blood is the best way to get insight into what is happening in your body, but apparently some believe breathe is as good or better, as well as being easier to get at.

For this to succeed, Boyd Sense needs to get the cost of the sensor low enough to appeal to smartphone vendors, and small enough not to spoil the design, as well as working on the analysis software.

It is an interesting idea though, and more innovative than most of what I saw on the MWC floor. Thuillier is hoping to bring something to the consumer market next year.

Finally, one of my favourite items at MWC this year was Ford’s electric bikes.

Ford showed two powered bicycles at the show, both prototypes and the outcome of an internal competition. The idea, I was told, is that bikes are ideal for the last part of a journey, especially in today’s urban environments where parking is difficult. You can put your destination into an app, get directions to the car park nearest your destination, and then dock your phone to the bike for the handlebar by handlebar directions.

I also saw a prototype delivery van with three bikes in the back. Aimed at delivery companies, this would let the driver park at a convenient spot for the next three deliveries, and have bikers zip off to drop the parcels.