It is always good to learn a new language so I took advantage of the holiday season to look more closely at TypeScript. At least, that was the original intent. So far I have spent longer on configuring stuff to work, than I have on actual coding. I think of it as time invested rather than wasted.

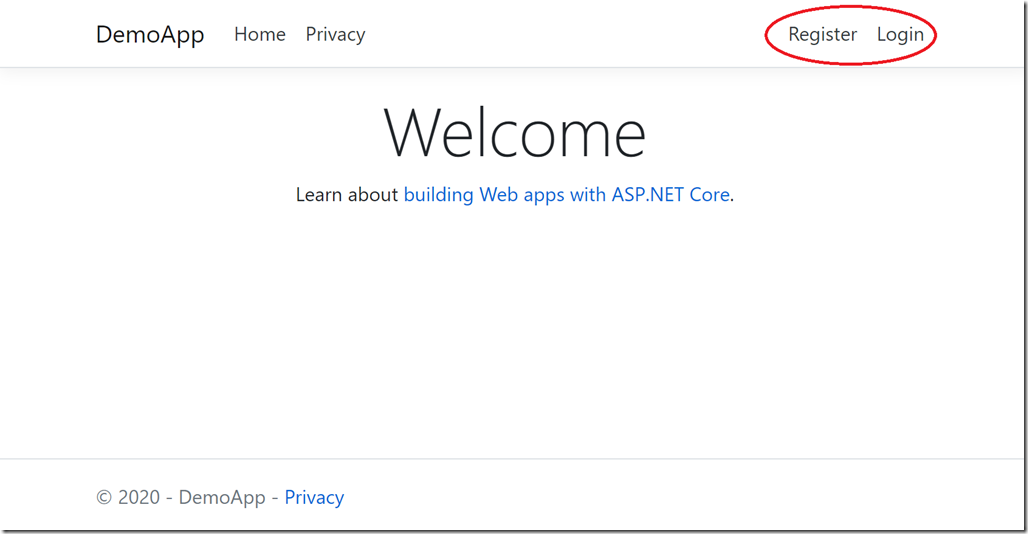

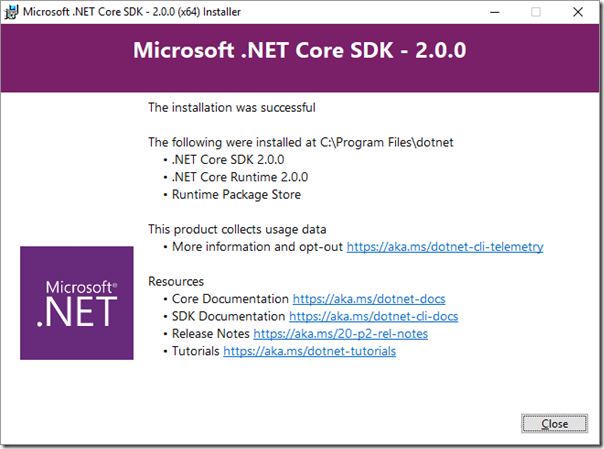

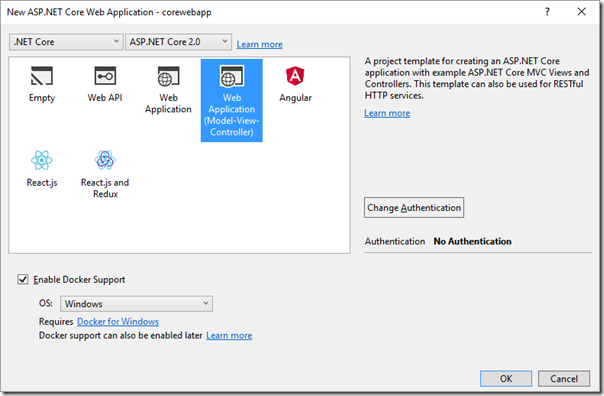

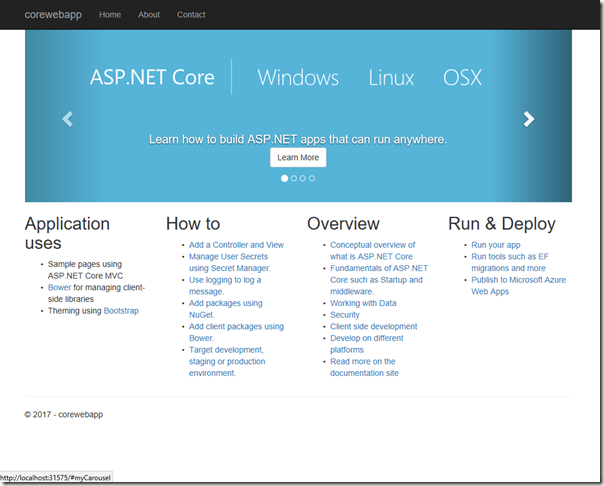

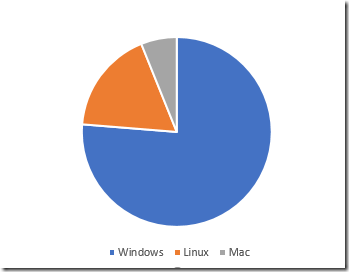

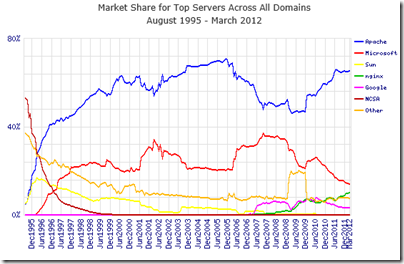

As long-term readers will know I am working on a bridge (the card game) website which has been used successfully over the lockdown period. I put this together quickly in the first half of 2020, reusing a unfinished Windows project and taking advantage of everything I could get without having to code it myself, like the ASP.NET identity system. So it is C#, ASP.NET Core, SignalR, runs on Linux on Azure App Service, and mostly coded in Visual Studio, with a few detours into Visual Studio Code.

Of course there is a ton of JavaScript involved and since the user interface for a bridge-playing game is fairly custom I did not use a JavaScript framework unless you could jQuery and Bootstrap. I wrote a separate JavaScript file for each page (possibly a mistake). I also started using the AWS Chime SDK for JavaScript which means referencing a huge 680K JavaScript file.

I therefore had several goals in mind. One was to code in TypeScript rather than JavaScript in order to take advantage of its features and catch more mistakes at compile time. Second, I wanted to optimize the JavaScript better, with automatic minification. Third, I wanted to align my project more closely with the JavaScript ecosystem. The AWS SDK, for example, is written in TypeScript using modules, but I have been using some demo code provided to compile a single JavaScript file. Maybe I can get better optimization by coding my own project in TypeScript, and importing only the modules I need.

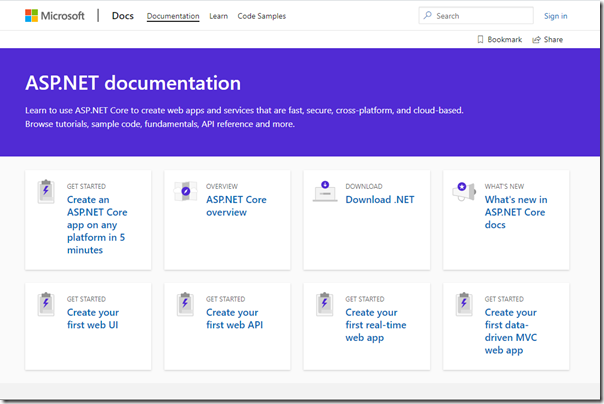

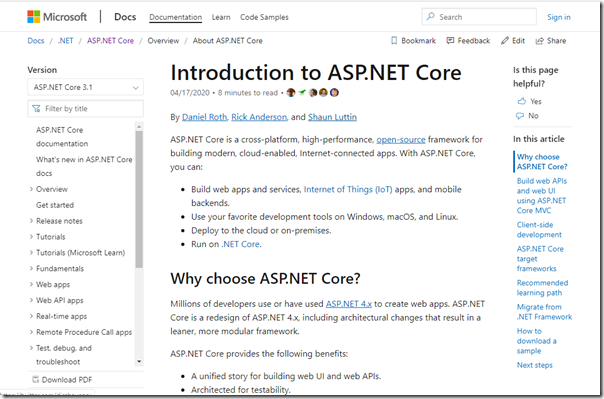

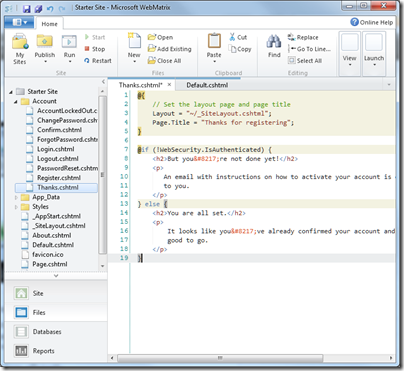

Visual Studio is not well aligned with the modern JavaScript ecosystem, as you can tell if you read this article on bundling and minification of static assets. “ASP.NET Core doesn’t provide a native bundling and minification solution,” it says, and refers developers to the WebOptimizer project or other tools such as Gulp and Webpack.

I did want to start with TypeScript though, and to begin with this looked easy. All you have to do is to add the TypeScript NuGet package, do some minimal configuration by creating and editing tsconfig.json, and you can write TypeScript and have it transpiled to JavaScript in your preferred target directory whenever the project is built. I moved a bunch of my JavaScript files to a directory of TypeScript files, renamed them from .js to .ts, and set to work making the TypeScript compiler happy.

When you do this you discover that the TypeScript compiler considers all .ts files that are not modules to be in the same scope. So if you have two JavaScript files and they both contain functions called DoSomething(), the compiler throws a duplicate function error, even if you will never reference them both from the same web page. You can fix this by making them modules – it feels like TypeScript is designed on this basis – but now you have the opposite problem, that if JavaScript file A references functions or variables in JavaScript file B, they have to be exported and imported. A good thing in principle, but now you have import statements in the code. The TypeScript compiler does not transpile these for compatibility with browsers that do not support import, and in addition, you now have to use “type = ‘module'” on script references in HTML. I also ran into issues with the libraries I use, primarily SignalR and the AWS Chime SDK. You can either npm install these and import them in the proper way, if the developers have provided TypeScript definition files (with a d.ts extension) or find a type library via DefinitelyTyped which provides only the types; you still need to reference the library separately. There is an obvious potential version issue if you go the DefinitelyTyped route.

In other words, what starts out as a simple idea of writing TypeScript instead of JavaScript soon becomes a complete refactor of the code to be modular and use imports and exports. Again, this is not a bad thing, but it is more work and not quite the incremental transition that I had in mind. I had over 1000 errors reported by the TypeScript compiler but gradually whittled them down (and this is with TypeScript set with strict off, intended to be a temporary expedient).

So I did all that but had a problem with these import statements when it came to using them in the browser. It seemed that WebPack could fix this for me, plus I could configure it to do tree-shaking to reduce code size and to use a minifier (it uses terser by default). There is a slight issue though since modern JavaScript tools like WebPack and terser are geared towards bundling all your JavaScript into a single file, and/or having a single-page application, which is not how my bridge site works. Still, it looked like it could be configured to work for me so I started down the track, using a post build step in Visual Studio to run WebPack.

I am sure this is obvious to people familiar with WebPack, but I still had problems getting my HTML pages to talk to the JavaScript. By default terser will mangle and shorten all the function names, but that is easily configured. The HTML still could not call any JavaScript functions: function not defined. Eventually I discovered that you have to configure WebPack to output a library. So if you have an HTML button that called a JavaScript function in its onclick event handler, there are several things you need to do. First, export the function in the JavaScript (or TypeScript) code. Second, add a library name and preferably type ‘umd’ in webpack.config.js. Third, add the library name as a prefix to the function you are calling from HTML, for example mylibrary.myfunction.

I also had issues with code splitting – essential to avoid bloated JavaScript bundles. This is done in WebPack by configuring SplitChunks. If set to ‘all’ then my library exports stopped working. After much trial and error, I found a fix. First, set chunkLoading to ‘jsonp’. Second, if your library variable is set to “undefined” at runtime there is a problem with one of the bundled JavaScript files. Unfortunately this was not reported as an error in the browser console – that is, the undefined library variable was reported, but not the reason for it.I tracked it down to a call to document.readyState or possibly document.addEventListener; using jQuery instead fixed it.

Another tip: do not call any JavaScript code directly from cshtml, other than via event handlers. It might try to run before the JavaScript is loaded. I found it easiest to put initialization code in a function and call it from JavaScript. You can put the function declaration into index.d.ts to keep TypeScript happy, since it is external.

Is it worth it? Watch this space. It has pushed me into refactoring which is improving the structure of my code – but I have also added complexity with a build process that compiles TypeScript to JavaScript (using tsloader), then merging and splitting files with WebPack, while along the way minifying and mangling it with terser. Yes I have encountered unexpected behaviour, partly thanks to my inexperience, but the interactions for example between jQuery and WebPack and library exports are quite complex, for example. I have spent time and energy wresting with WebPack, instead of coding my application. There is a lot to be said for my old approach, where you code in JavaScript and it runs as JavaScript and it is easier to trace what is going on.

Still, it is working and I have achieved some of my goals – but the AWS Chime SDK file is still huge, just 30K smaller than before, which is disappointing. Perhaps there is something I have missed. I will be coding in TypeScript now and look forward to further refactoring as I get to know the language.

Update: I have abandoned this experiment. There were niggling problems with the WebPack bundles and I came to the conclusion that is it unsuitable for a multi-page application. A shame as I wanted to use WebPack; but for the time being I am just using TypeScript with terser. This means I am using native ES modules in the browser and I intend to write up the experience soon.