At Microsoft’s Build event in May this year I interviewed Dharma Shukla, Technical Fellow for the Azure Data group, about Cosmos DB. I enjoyed the interview but have not made use of the material until now, so even though Build was some time back I wanted to share some of his remarks.

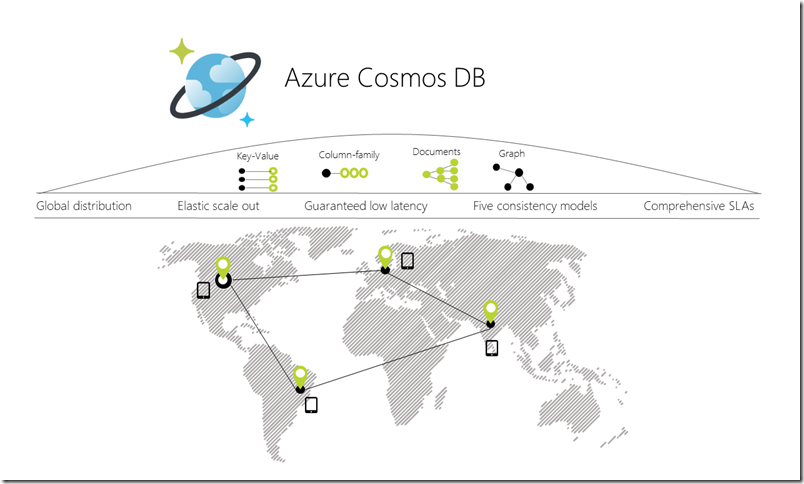

Cosmos DB is Microsoft’s cloud-hosted NoSQL database. It began life as DocumentDB, and was re-launched as Cosmos DB at Build 2017. There are several things I did not appreciate at the time. One was how much use Microsoft itself makes of Cosmos DB, including for Azure Active Directory, the identity provider behind Office 365. Another was how low Cosmos DB sits in the overall Azure cloud system. It is a foundational piece, as Shukla explains below.

There were several Cosmos DB announcements at Build. What’s new?

“Multi-master is one of the capabilities that we announced yesterday. It allows developers to scale writes all around the world. Until yesterday Cosmos DB allowed you to scale writes in a single region but reads all around the world. Now we allow developers to scale reads and writes homogeneously all round the world. This is a huge deal for apps like IoT, connected cars, sensors, wearables. The amount of writes are far more than the amount of reads.

“The second thing is that now you get single-digit millisecond write latencies at the 99 percentile not just in one region.

“And the third piece is that what falls out of this high availability. The window of failover, the time it takes to failover from one region when a disaster happens, to the other, has shrunk significantly.

“It’s the only system I know of that has married the high consistency models that we have exposed with multi-master capability as well. It had to reach a certain level of maturity, testing it with first-party Microsoft applications at scale and then with a select set of external customers. That’s why it took us a long time.

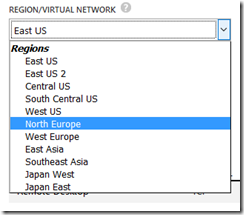

“We also announced the ability to have your Cosmos Db database in your own VNet (virtual network). It’s a huge deal for enterprises where they want to make sure that no data leaks out of that VNet. To do it for a global distributed database is specially hard because you have to close all the transitive networking dependencies.”

Technical Fellow Dharma Shukla

Does Cosmos DB work on Azure Stack?

“We are in the process of going to Azure Stack. Azure Stack is one of the top customer asks. A lot of customers want a hybrid Cosmos DB on Azure Stack as well as in Azure and then have Active – Active. One of the design considerations for multi master is for edge devices. Right now Azure has about 50 regions. Azure’s going to expand to let’s say 200 regions. So a customer’s single Cosmos DB table spanning all these regions is one level of scalability. But the architecture is such that if you directly attach lots of Azure Stack devices, or you have sensors and edge devices, they can also pretend to be replicas. They can also pretend to be an Azure region. So you can attach billions of endpoints to your table. Some of those endpoints could be Azure regions, some of them could be instances of Azure Stack, or IoT hub, or edge devices. This kind of scalability is core to the system.”

Have customers asked for any additional APIs into Cosmos DB?

“There is a list of APIs, HBase, richer SQL, there are a number of such API requests. The good news is that the system has been built in a way that adding new APIs is relatively easy addition. So depending on the demand we continue to add APIs.”

Can you tell me anything about how you’ve implemented Cosmos DB? I know you use Service Fabric. Do you use other Azure services?

“We have dedicated clusters of compute machines. Cosmos DB is a Ring 0 service. So it’s there any time Azure opens a new region, Cosmos DB clusters have provision by default. Just like compute, storage, Cosmos DB is also one of the Ring 0 services which is the bottommost. Azure Active Directory for example depends on Cosmos DB. So Cosmos DB cannot take a dependency on Active Directory.

“The dependency that we have is our own clusters and machines, on which we put Service Fabric. For deployment of Cosmos DB code itself, we use Service Fabric. For some of the load balancing aspects we use Service Fabric. The partition management, global distribution, replication, is our own. So Cosmos DB is layered on top of Service Fabric, it is a Service Fabric application. But then it takes over. Once the Cosmos DB bits are laid out on the machine then its replication and partition management and distribution pieces take over. So that is the layering.

“Other than that there is no dependency on Azure. And that is why one of the salient aspects of this is that you can take the system and host it easily in places like Azure Stack. The dependencies are very small.

“We don’t use Azure Storage because of that dependency. So we store the data locally and then replicate it. And all of that data is also encrypted at rest.”

So when you say it is not currently in Azure Stack, it’s there underneath, but you haven’t surfaced it?

“It is in a defunct mode. We have to do a lot of work to light it up. When we light up it on such on-prem or private cloud devices, we want to enable this active to active pathway. So you are replicating your data and that is getting synchronized with the cloud and Azure Stack is one of the sockets.”

Microsoft itself is using Cosmos DB. How far back does this go? Azure AD is quite old now. Was it always on Cosmos DB / DocumentDB?

“Over the years Office 365, Xbox, Skype, Bing, and more and more of Azure services, have started moving. Now it has almost become ubiquitous. Because it’s at the bottom of the stack, taking a dependency on it is very easy.

“Azure Active Directory consists of a set of microservices. So they progressively have moved to Cosmos DB. Same situation with Dynamics, and our slew of such applications. Skype is by and large on Cosmos DB now. There are still some fragments of the past. Xbox and the Microsoft Store and others are running on it.”

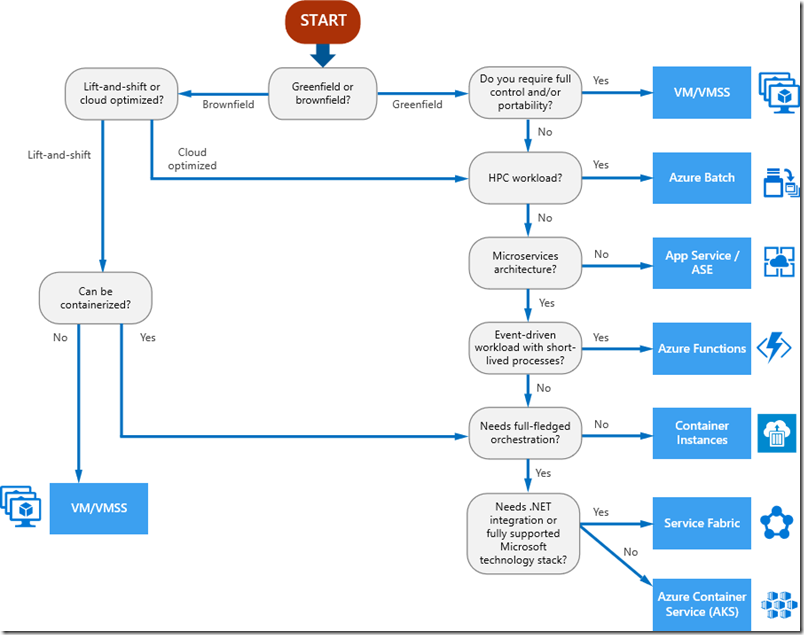

Do you think your customers are good at making the right choices over which database technology to use? I do pick up some uncertainty about this.

“We are working on making sure that we provide that clarity. Postgres and MySQL and MariaDB and SQL Server, Azure SQL and elastic pools, managed instances, there is a whole slew of relational offerings. Then we have Cosmos DB and then lots of analytical offerings as well.

“If you are a relational app, and if you are using a relational database, and you are migrating from on-prem to Azure, then we recommend the relational family. It comes with this fundamental scale caveat which is that up to 4TB. Most of those customers are settled because they have designed the app around those sorts of scalability limitations.

“A subset of those customers, and a whole bunch of brand new customers, are willing to re-write the app. They know that that they want to come to cloud for scale. So then we pitch Cosmos DB.

“Then there are customers who want to do massive scale offline analytical processing. So there is, Databricks, Spark, HD Insight, and that set of services.

“We realise there are grey lines between these offerings. We’re tightening up the guidance, it’s valid feedback.”

Any numbers to flesh out the idea that this is a fast-growing service for Microsoft?

“I can tell you that the number of new clusters we provision every week is far more than the total number of clusters we had in the first month. The growth is staggering.”