I attended the Amazon Web Services (AWS) London Summit. Not much news there, since the big announcements were the week before in San Francisco, but a chance to drill into some of the AWS services and keep up to date with the platform.

The keynote by CTO Werner Vogels was a bit too much relentless promotion for my taste, but I am interested in the idea he put forward that cloud computing will gradually take over from on-premises and that more and more organisations will go “all in” on Amazon’s cloud. He instanced some examples (Netflix, Intuit, Tibco, Splunk) though I am not quite clear whether these companies have 100% of their internal IT systems on AWS, or merely that they run the entirety of their services (their product) on AWS. The general argument is compelling, especially when you consider the number of services now on offer from AWS and the difficulty of replicating them on-premises (I wrote this up briefly on the Reg). I don’t swallow it wholesale though; you have to look at the costs carefully, but even more than security, the loss of control when you base your IT infrastructure on a public cloud provider is a negative factor.

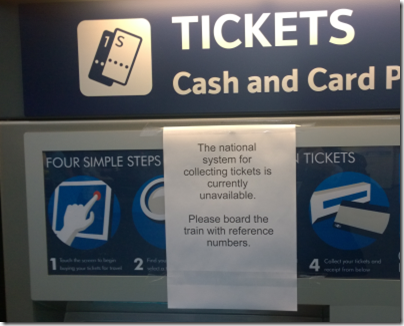

As it happens, the ticket systems for my train into London were down that morning, which meant that purchasers of advance tickets online could not collect their tickets.

The consequences of this outage were not too serious, in that the trains still ran, but of course there were plenty of people travelling without tickets (I was one of them) and ticket checking was much reduced. I am not suggesting that this service runs on AWS (I have no idea) but it did get me thinking about the impact on business when applications fail; and that led me to the question: what are the long-term implications of our IT systems and even our economy becoming increasingly dependent on a (very) small number of companies for their health? It seems to me that the risks are difficult to assess, no matter how much respect we have for the AWS engineers.

I enjoyed the technical sessions more than the keynote. I attended Dean Bryen’s session on AWS Lambda, “Event-driven code in the cloud”, where I discovered that the scope of Lambda is greater than I had previously realised. Lambda lets you write code that runs in response to events, but what is also interesting is that it is a platform as a service offering, where you simply supply the code and AWS runs it for you:

AWS Lambda runs your custom code on a high-availability compute infrastructure and administers all of the compute resources, including server and operating system maintenance, capacity provisioning and automatic scaling, code, and security patches.

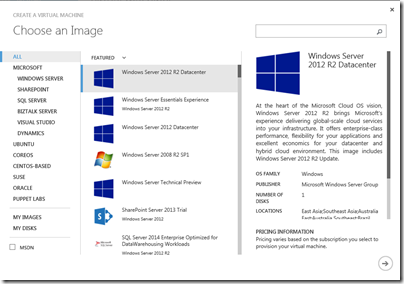

This is a different model than running applications in EC2 (Elastic Compute Cloud) VMs or even in Docker containers, which are also VM based. Of course we know that Lambda ultimately runs in VMs as well, but these details are abstracted away and scaling is automatic, which arguably is a better model for cloud computing. Azure Cloud Services or Heroku apps are somewhat like this, but neither is very pure; with Azure Cloud Services you still have to worry about how many VMs you are using, and with Heroku you have to think about dynos (app containers). Google App Engine is another example and autoscales, though you are charged by application instance count so you still have to think in those terms. With Lambda you are charged based on the number of requests, the duration of your code, and the amount of memory allocated, making it perhaps the best abstracted of all these PaaS examples.

But Lambda is just for event-handing, right? Not quite; it now supports synchronous as well as asynchronous event handling and you could create large applications on the service if you chose. It is well suited to services for mobile applications, for example. Java support is on the way, as an alternative to the existing Node.js support. I will be interested to see how this evolves.

I also went along to Carlos Conde’s session on Amazon Machine Learning (one instance in which AWS has trailed Microsoft Azure, which already has a machine learning service). Machine learning is not that easy to explain in simple terms, but I thought Conde did a great job. He showed us a spreadsheet which was a simple database of contacts with fields for age, income, location, job and so on. There was also a Boolean field for whether they had purchased a certain financial product after it had been offered to them. The idea was to feed this spreadsheet to the machine learning service, and then to upload a similar table but of different contacts and without the last field. The job of the service was to predict whether or not each contact listed would purchase the product. The service returned results with this field populated along with a confidence indicator. A simple example with obvious practical benefit, presuming of course that the prediction has reasonable accuracy.