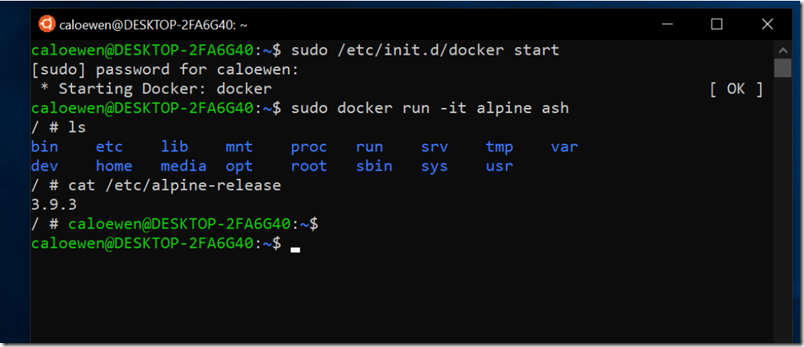

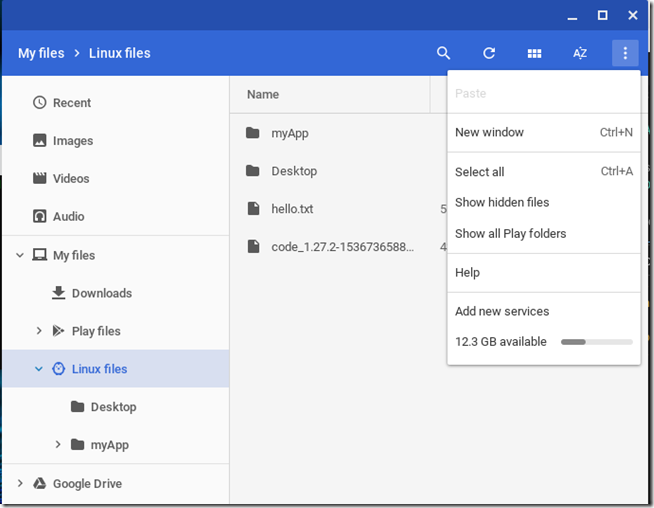

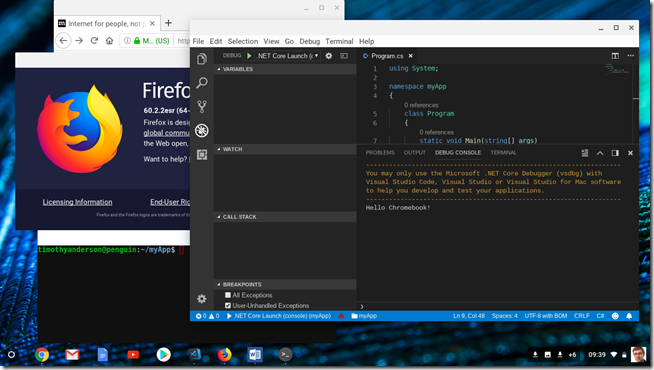

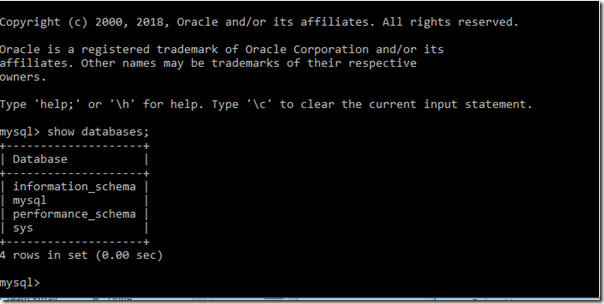

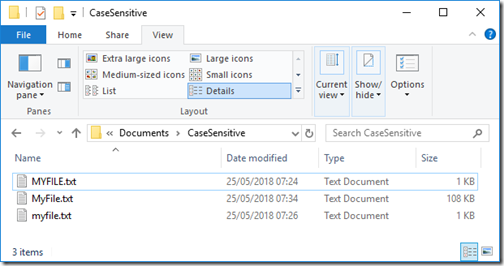

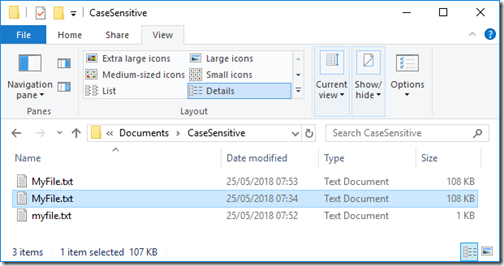

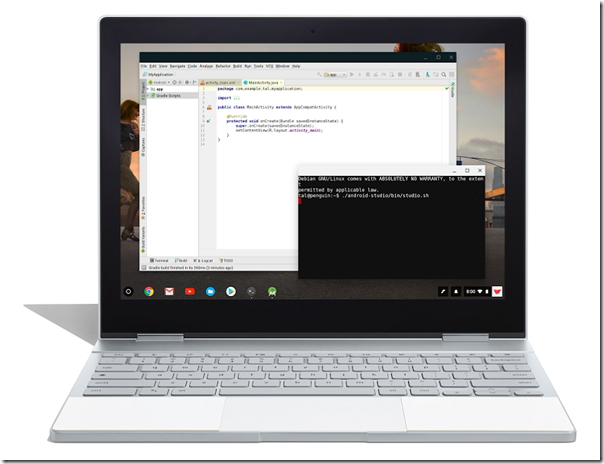

It is s feature which most users are not even aware of, but for developers and admins the Windows Subsystem for Linux (WSL) is perhaps the best feature of Windows 10. It gives you seamless access to Linux applications and utilities without needing to run a virtual machine (VM) or remote session. For example, I use it to develop and debug LAMP (Linux, Apache, MySQL, PHP) applications using Visual Studio Code on Windows as the editor. I also use it for running the Let’s Encrypt certbot utility as well as using Linux OpenSSL utilities. It solves Windows annoyances like path limitations and case insensitivity.

Now at the Build developer conference Microsoft has introduced WSL, advertising “dramatic file system performance increases, and full system call compatibility.” That is great, but there is a downside. Unlike the first version, WSL 2 runs in a VM:

WSL 2 uses the latest and greatest in virtualization technology to run its Linux kernel inside of a lightweight utility virtual machine (VM)

says the announcement from Microsoft’s Craig Loewen.

Although Microsoft also says that WSL 2 “still provides the same user experience as in WSL 1,” this is not altogether true. One specific difference is that currently I can run my LAMP application, fire up a Windows browser, navigate to Localhost, and there is my application. In WSL 2, the LAMP application will have a different IP number so this will not work. To be fair, when I discussed this with a member of the team I was told that they are working to address this and tinker with the networking so that localhost will work again. It also arguable that the different IP number is preferable behaviour, since it will not conflict with other endpoints on the Windows side. But it is different.

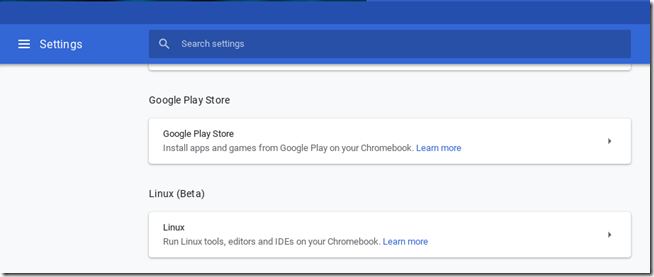

The use of a VM for WSL 2 is the conventional approach to this problem. In fact, you have been able to run a Linux VM on Windows for many years. The difference is the work Microsoft is doing to provide the fastest possible startup and deep integration with the file system so that it behaves more like the original WSL than like an isolated VM. In other words, the problem of running Linux binaries by redirecting system calls (WSL) has been exchanged for another.

Why the change of direction? There are several reasons.

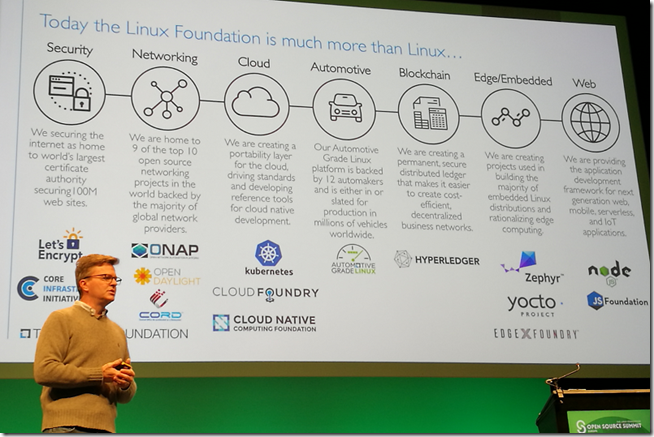

The first is compatibility. No matter how well WSL worked (and it does work very well), there would always be something that did not work as users attempted to use more and more Linux applications.

Second, performance. Apparently:

Initial tests that we’ve run have WSL 2 running up to 20x faster compared to WSL 1 when unpacking a zipped tarball, and around 2-5x faster when using git clone, npm install and cmake on various projects.

Third, when WSL was first conceived it was intended to work on mobile devices which could not support a VM (maybe this was something to do with Android compatibility efforts on Windows Phone).

Finally, Hyper-V has improved to the extent that running WSL 2 on a VM is more feasible.

It does mean that Microsoft will ship its own (but open source) Linux kernel with Windows and update it via Windows Update, a good thing for security.

The reasons are good ones, but it would not surprise me to see other niggling integration issues. And it is just a little sad that the magic of the original WSL has been replaced by a more conventional approach.

I also feel that if you came to Build looking for support for a narrative that Microsoft is drifting away from Windows and towards Linux, WSL 2 would support that narrative.