CES in Las Vegas is an amazing event, partly through sheer scale. It is the largest trade show in Vegas, America’s trade show city. Apparently it was also the largest CES ever: two million square feet of exhibition space, 3,200 exhibitors, 150,000 industry attendees, of whom 35,000 were from outside the USA.

It follows that CES is beyond the ability of any one person to see in its entirety. Further, it is far from an even representation of the consumer tech industry. Notable absentees include Apple, Google and Microsoft – though Microsoft for one booked a rather large space in the Venetian hotel which was used for private meetings. The primary purpose of CES, as another journalist explained to me, is for Asian companies to do deals with US and international buyers. The success of WowWee’s stand for app-controllable MiP robots, for example, probably determines how many of the things you will see in the shops in the 2014/15 winter season.

The kingmakers at CES are the people going round with badges marked Buyer. The press events are a side-show.

CES is also among the world’s biggest trade shows for consumer audio and high-end audio, which is a bonus for me as I have an interest in such things.

Now some observations. First, a reminder that CEA (the organisation behind CES) kicked off the event with a somewhat downbeat presentation showing that global consumer tech spending is essentially flat. Smartphones and tablets are growing, but prices are falling, and most other categories are contracting. Converged devices are reducing overall spend. One you had a camera, a phone and a music player; now the phone does all three.

Second, if there is one dominant presence at CES, it is Samsung. Press counted themselves lucky even to get into the press conference. A showy presentation convinced us that we really want not only UHD (4K UHD is 3840 x 2160 resolution) video, but also a curved screen, for a more immersive experience; or even the best of both worlds, an 85” bendable UHD TV which transforms from flat to curved.

We already knew that 4K video will go mainstream, but there is more uncertainty about the future connected home. Samsung had a lot to say about this too, unveiling its Smart Home service. A Smart Home Protocol (SHP) will connect devices and home appliances, and an app will let you manage them. Home View will let you view your home remotely. Third parties will be invited to participate. More on the Smart Home is here.

The technology is there; but there are several stumbling blocks. One is political. Will Apple want to participate in Samsung’s Smart Home? will Google? will Microsoft? What about competitors making home appliances? The answer is that nobody will want to cede control of the Smart Home specifications to Samsung, so it can only succeed through sheer muscle, or by making some alliances.

The other question is around value for money. If you are buying a fridge freezer, how high on your list of requirements is SHP compatibility? How much extra will you spend? If the answer is that old-fashioned attributes like capacity, reliability and running cost are all more important, then the Smart Home cannot happen until there are agreed standards and a low cost of implementation. It will come, but not necessarily from Samsung.

Samsung did not say that much about its mobile devices. No Galaxy S5 yet; maybe at Mobile World Congress next month. It did announce the Galaxy Note Pro and Galaxy Tab Pro series in three sizes; the “Pro” designation intrigues me as it suggests the intention that these be business devices, part of the “death of the PC” theme which was also present at CES.

Samsung did not need to say much about mobile because it knows it is winning. Huawei proudly announced that it it is 3rd in smartphones after Samsung and Apple, with a … 4.8% market share, which says all you need to know.

That said, Huawei made a rather good presentation, showing off its forthcoming AscendMate2 4G smartphone, with 6.1” display, long battery life (more than double that of iPhone 5S is claimed, with more than 2 days in normal use), 5MP front camera for selfies, 13MP rear camera, full specs here. No price yet, but expect it to be competitive.

Sony also had a good CES, with indications that PlayStation 4 is besting Xbox One in the early days of the next-gen console wars, and a stylish stand reminding us that Sony knows how to design good-looking kit. Sony’s theme was 4K becoming more affordable, with its FDR-AX100 camcorder offering 4K support in a device no larger than most camcorders; unfortunately the sample video we saw did not look particularly good.

Sony also showed the Xperia Z1 compact smartphone, which went down well, and teased us with an introduction for Sony SmartWear wearable entertainment and “life log” capture. We saw the unremarkable “core” gadget which will capture the data but await more details.

Another Sony theme was high resolution audio, on which I am writing a detailed piece (not just about Sony) to follow.

As for Microsoft Windows, it was mostly lost behind a sea of Android and other devices, though I will note that Lenovo impressed with its new range of Windows 8 tablets and hybrids – like the 8” Thinkpad with Windows 8.1 Pro and full HD 1920×1200 display – more details here.

There is an optional USB 3.0 dock for the Thinkpad 8 but I commented to the Lenovo folk that the device really needs a keyboard cover. I mentioned this again at the Kensington stand during the Mobile Focus Digital Experience event, and they told me they would go over and have a look then and there; so if a nice Kensington keyboard cover appears for the Thinkpad 8 you have me to thank.

Whereas Lenovo strikes me as a company which is striving to get the best from Windows 8, I was less impressed by the Asus press event, mainly because I doubt the Windows/Android dual boot concept will take off. Asus showed the TD300 Transformer Book Duet which runs both. I understand why OEMs are trying to bolt together the main business operating system with the most popular tablet OS, but I dislike dual boot systems, and if the Windows 8 dual personality with Metro and desktop is difficult, then a Windows/Android hybrid is more so. I’d guess there is more future in Android emulation on Windows. Run Android apps in a window? Asus did also announce its own 8” Windows 8.1 tablet, but did not think it worth attention in its CES press conference.

Wearables was a theme at CES, especially in the health area, and there was a substantial iHealth section to browse around.

I am not sure where this is going, but it seems to me inevitable that self-monitoring of how well or badly our bodies are functioning will become commonplace. The result will be fodder for hypochondriacs, but I think there will be real benefits too, in terms of motivation for exercise and healthy diets, and better warning and reaction for critical problems like heart attacks. The worry is that all that data will somehow find its way to Google or health insurance companies, raising premiums for those who need it most. As to which of the many companies jostling for position in this space will survive, that is another matter.

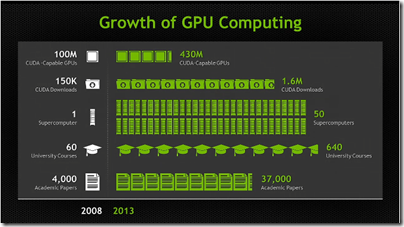

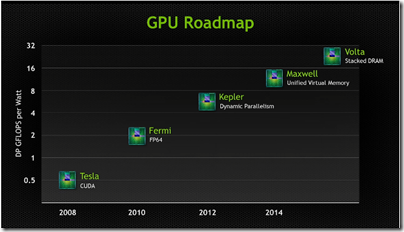

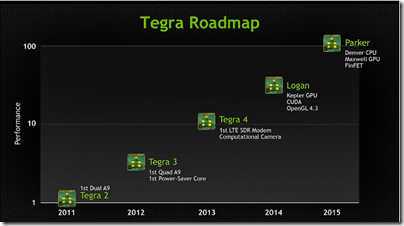

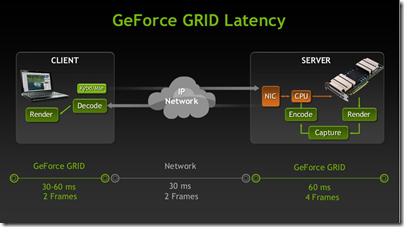

What else? It is a matter of where to stop. I was impressed by NVidia’s demo rig showing three 4K displays driven by a GTX-equipped PC; my snap absolutely does not capture the impact of the driving game being shown.

I was also impressed by NVidia’s ability to befuddle the press at its launch of the Tegra K1 chipset, confusing 192 CUDA cores with CPU cores. Having said that, the CUDA support does mean you can use those cores for general-purpose programming and I see huge potential in this for more powerful image processing on the device, for example. Tegra 4 on the Surface 2 is an excellent experience, and I hope Microsoft follows up with a K1 model in due course even though that looks doubtful.

There were of course many intriguing devices on show at CES, on some of which I will report over at the Gadget Writing blog, and much wild and wonderful high-end audio.

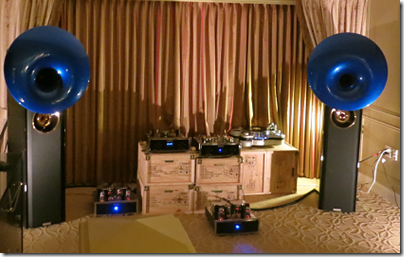

On audio I will note this. Bang & Olufsen showed a stylish home system, largely wireless, but the sound was disappointing (it also struck me as significant that Android or iOS is required to use it). The audiophiles over in the Venetian tower may have loopy ideas, but they had the best sounds.

CES can do retro as well as next gen; the last pinball machine manufacturer displayed at Digital Experience, while vinyl, tubes and horns were on display over in the tower.

![image_thumb[14] image_thumb[14]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb14_thumb.png)

![image_thumb[16] image_thumb[16]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb16_thumb.png)

![image_thumb[17] image_thumb[17]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb17_thumb.png)

![image_thumb[18] image_thumb[18]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb18_thumb.png)

![image_thumb[13] image_thumb[13]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb13_thumb.png)