NVIDIA’s GPU Technology conference is an unusual event, in part a get-together for academic researchers using HPC, in part a marketing pitch for the company. The focus of the event is on GPU computing, in other words using the GPU for purposes other than driving a display, such as processing simulations to model climate change or fluid dynamics, or to process huge amounts of data in order to calculate where best to drill for oil. However NVIDIA also uses the event to announce its latest GPU innovations, and CEO Jen-Hsun Huang used this morning’s keynote to introduce its GPU in the cloud initiative.

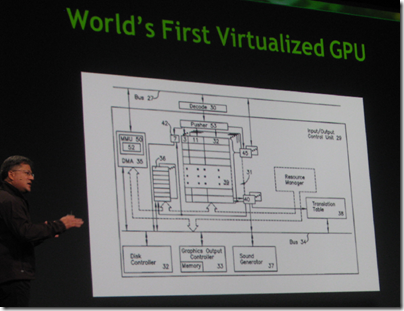

This takes two forms, though both are based on a feature of the new “Kepler” wave of NVIDIA GPUs which allows them to render graphics to a stream rather than to a display. It is the world’s first virtualized GPU, he claimed.

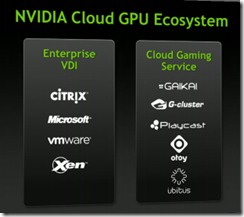

The first target is enterprise VDI (Virtual Desktop Infrastructure). The idea is that in the era of BYOD (Bring Your Own Device) there is high demand for the ability to run Windows applications on devices of every kind, perhaps especially Apple iPads. This works fine via virtualisation for everyday applications, but what about GPU-intensive applications such as Autocad or Adobe Photoshop? Using a Kepler GPU you can run up to 100 virtual desktop instances with GPU acceleration. NVIDIA calls this the VGX Platform.

What actually gets sent to the client is mostly H.264 video, which means most current devices have good support, though of course you still need a remote desktop client.

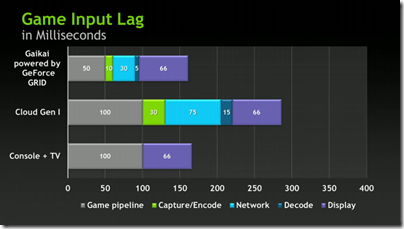

The second target is game streaming. The key problem here – provided you have enough bandwidth – is minimising the lag between when a player moves or clicks Fire, and when the video responds. NVIDIA has developed software called the Geforce GRID which it will supply along with specially adapted Kepler GPUs to cloud companies such as Gaikai. Using the Geforce GRID, lag is reduced, according to NVIDIA, to something close to what you would get from a game console.

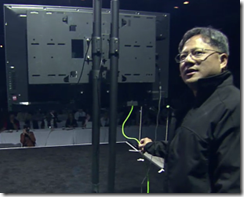

We saw a demo of a new Mech shooter game in which one player is using an Asus Transformer Prime, an Android tablet, and the other an LG television which has a streaming client built in. The game is rendered in the cloud but streamed to the clients with low latency.

“This is your game console,” said NVIDIA CEO Jen-Sun Huang, holding the Ethernet cable that connected the TV to the internet.

The concept is attractive for all sorts of reasons. Users can play games without having to download and install, or connect instantly to a game being played by a friend. Game companies are protected from piracy, because the game code runs in the cloud, not on the device.

NVIDIA does not plan to run its own cloud services, but is working with partners, as the following slide illustrates. On the VDI side, Citrix, Microsoft, VMWare and Xen were mentioned as partners.

If cloud GPU systems take off, will it cannibalise the market for powerful GPUs in client devices, whether PCs, game consoles or tablets? I put this to Huang in the press Q&A after the keynote, and he denied it, saying that people like designers hate to share their PCs. It was an odd and unsatisfactory answer. After all, if Huang is saying that your games console is now an Ethernet cable, he is also saying that there is no need any longer for game consoles which contain powerful NVIDIA GPUs. The same might apply to professional workstations, with the logic that cloud computing always presents: that shared resources have better utilisation and therefore lower cost.

Cloud computing is only “always present” if users have a fast reliable Internet connection, and that is still far from the case for a majority of console owners. A significant fraction of 360/PS3 consoles (a third or so I believe) has never been online.

OnLive has been doing for years what Nvidia is proposing here, and it’s still not even being offered outside of a few metropolitan areas — only there enough households have a fast enough connection. And by all testimonies, the experience is still noticeably inferior to locally playing a game on a console.

It’s much easier and cheaper to increase local compute power than local bandwidth, and I don’t see that changing anytime soon. The cost argument is bogus since we already get all the local compute power we want for a pittance. The only ones who would benefit from streamed games and applications are the publishers who could finally turn all software into piracy-safe rentals. Do we really want that? I for one don’t.

In any case, doesn’t this reduce Nvidia’s competitive advantage vs. ATI? Nvidia currently has the premium brand, and they charge a correspondingly premium price.

But cloud operators will not hesitate to buy 1.5 million ATI chips over 1 million Nvidia chips, if it delivers the same performance at a lower price. All they need is a way to deliver X rendered frames per second to the consumer.