Microsoft has announced new capabilities for its next-generation OneDrive for Business sync client – the software that lets users access OneDrive documents through Windows Explorer rather than having to go via a web browser.

Technically, there are two ways to access OneDrive with Windows Explorer. One uses WebDAV and only works online, the other makes a local copy of the documents and synchronises them when it can. Microsoft pushes users towards the second option. If you use WebDAV, repeated authentication prompts and lack of offline capabilities are annoyances that many find it hard to cope with.

Problem is that the old OneDrive for Business sync client, called Groove, is just not reliable. Every so often it stops syncing and there is often no solution other than to delete all the local copies and start again.

Microsoft is therefore replacing it with a new OneDrive for Business sync client, which has been in preview since September 2015. “The preview client adds OneDrive for Business connectivity to our proven OneDrive consumer client,” explained Microsoft, abandoning the problematic Groove.

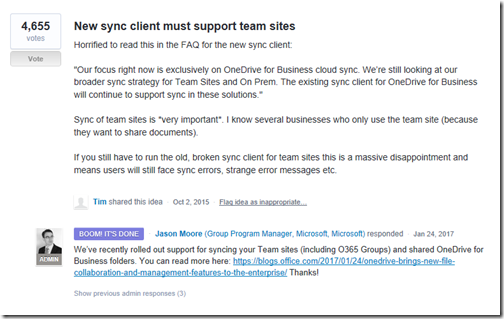

There was a snag though. The new client did not support Team Sites, also known as SharePoint Online, but only personal OneDrive for Business cloud storage. Many businesses make more use of Team Sites than they do of the personal storage. Users with both had to run both the old and new sync clients side by side.

I was among those complaining so it is pleasing to see that Microsoft, a mere 15 months later, has met my request, by adding support for Team Sites to its new client.

(I had no idea until I looked today how much support the feedback had received).

Today’s announcement also includes a new standalone Mac client, which can be deployed centrally, and an enhancd UI with an Activity Center.

There are also new admin features in the Office 365 dashboard, like blocking syncing of specified file types, control over device access, and usage reporting.

There may still be some snags – and note that the new client is still a preview.

Competitors like DropBox and Box have some technical advantages, but Microsoft’s key benefit is integration with Office 365, and the fact that it comes as part of the bundle in most plans. If it can iron out the technical issues, of which sync has to date been the most annoying, it will significantly strengthen its cloud platform.