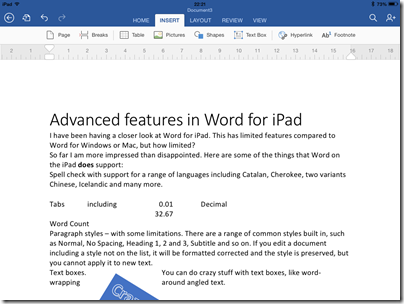

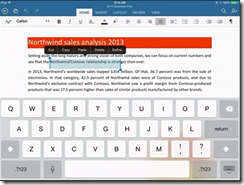

I have been having a closer look at Word for iPad. This has limited features compared to Word for Windows or Mac, but how limited?

So far I am more impressed than disappointed. Here are some of the things that Word on the iPad does support:

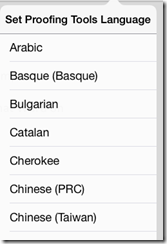

Spell check with support for a range of languages including Catalan, Cherokee, two variants Chinese, Icelandic and many more.

Tabs including left, center, right and Decimal

Paragraph styles – with some limitations. There are a range of common styles built in, such as Normal, No Spacing, Heading 1, 2 and 3, Subtitle and so on. If you edit a document including a style not on the list, it will be formatted corrected and the style is preserved, but you cannot apply it to new text.

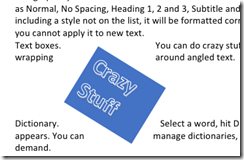

Text boxes. You can do crazy stuff with text boxes, like word-wrapping around angled text.

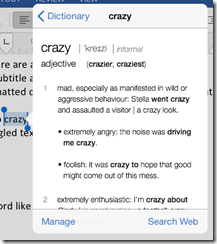

Dictionary. Select a word, hit Define, and a dictionary definition appears. You can manage dictionaries, which seem to be downloaded on demand.

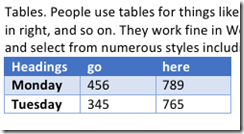

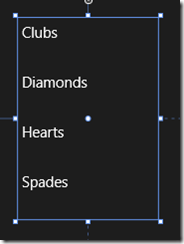

Tables. People use tables for things like formatting minutes: speaker in left column, actions in right, and so on. They work fine in Word on iPad. You can insert a table, type in the cells, and select from numerous styles including invisible gridlines.

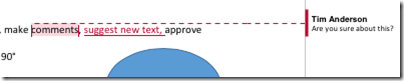

Track changes. You can review changes, make comments,suggest new text, approve changes made by others, and so on.

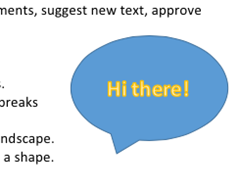

You can change the direction of text by 90°.

You can edit headers and footers.

You can insert page numbers in a variety of formats.

You can use multiple columns. You can insert page breaks and column breaks.

You can change page orientation from portrait to landscape.

Shapes are supported, and you can type text within a shape.

Text highlighting works.

Bulleted and numbered lists work as expected

Footnoting works.

Word count is available, with options like whether to include footnotes, plus character count with or without spaces.

Pictures: you can insert images, resize, stretch and rotate them (though I have not found a crop function) and apply various effects.

Overall, it is impressive, more than just a lightweight word processor.

What’s missing?

So what features are missing, compared to the desktop version? I am sure the list is long, but they may be mostly things you do not use.

One notable missing feature is format support. Desktop Word supports OpenDocument (.odt) and can edit the old binary .doc format as well as the newer .docx (Office Open XML). Word for iPad can only edit .docx. It can view and convert .doc, but cannot even view .odt. Nor can you do clever stuff like importing and editing a PDF. Here are a few more omissions:

- No thesaurus.

- No equation editor.

- No character map for inserting symbols – you have to know the keyboard shortcut.

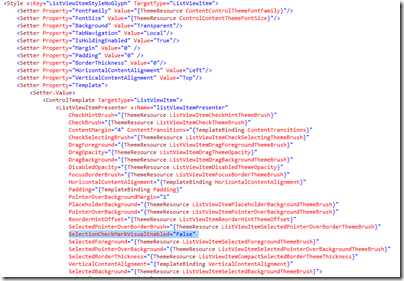

- Paragraph formatting is far richer in desktop Word, and you have the ability to create and modify paragraph styles. One thing I find annoying in Word for iPad is the inability to set space above or below a paragraph (let me know if I have missed a feature)

- Academic features like endnotes, cross-references, index, contents, table of figures, citations.

- Watermarks

- Image editing – but you can do this in a separate app on the iPad

- Captions

- Macros and Visual Basic for Applications

- SmartArt

- WordArt

- Templates

- Special characters (you need to know where to find them on the keyboard)

- Printing – I guess this is more of an iPad problem

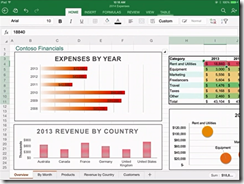

Office for iPad versus Office for Surface RT

If you have Microsoft’s Surface tablet, would you rather have the equivalent of Office for iPad, touch-friendly but cut-down, or the existing Office for Surface RT? I took a sample of opinion on Twitter and most said they would rather have Office for iPad. This is Office reworked for tablet use, touch friendly in a way that desktop Office will never be.

Then again, Office on Surface RT (VBA aside) is more or less full desktop Office and can meet needs where Office for iPad falls short.

If Microsoft is still serious about the “Metro” environment, it will need to do something similar as a Windows Store app. Matching the elegance and functionality of the iPad version will be a challenge.

I typed this on the iPad of course, using a Logitech Bluetooth keyboard. I would not have wanted to do it with the on-screen keyboard alone. However for the final post, I moved it to Windows (via SkyDrive) in order to use Live Writer. Word on the Surface has a Blog template I could have used; another missing feature I guess.

Microsoft has exceeded expectations. This would sell well in the App Store, but you need an Office 365 subscription, making it either a significant annual cost, or a nice free bonus for those using Office 365 anyway, depending on how you look at it. The real target seems to be business users, for whom Office 365 plus Apple iPad (which they were using anyway) is now an attractive proposition.