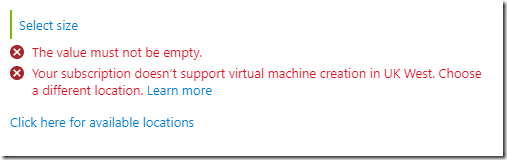

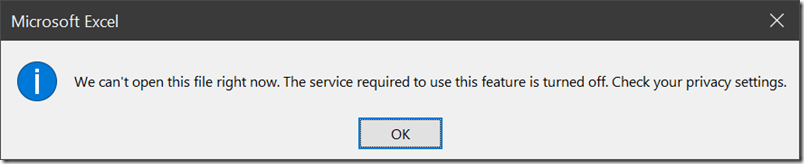

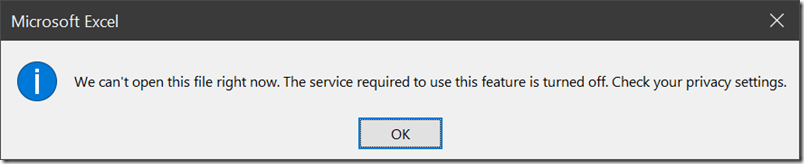

I attempted to open a document from on-premises SharePoint recently and was greeted with an error asking me to check my privacy settings.

“The service required to use this feature is turned off” I was informed. Hmm, what service is that then? The solution turned out to be in the new Office privacy settings, just as the dialog suggested.

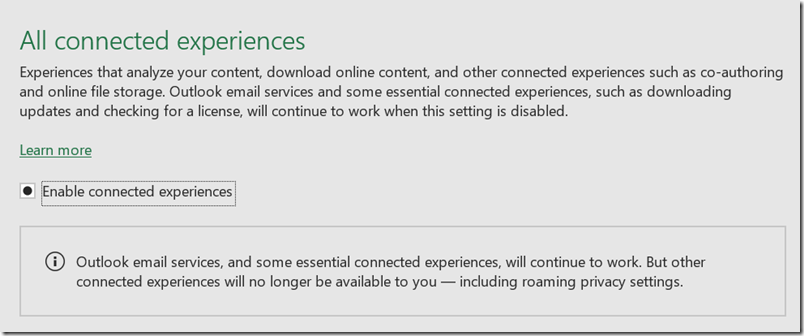

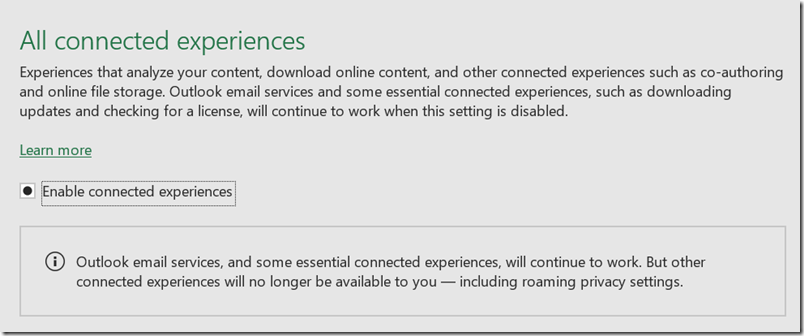

If you disable what Microsoft calls “Connected experiences” it appears to block access to SharePoint. Probably not what the user intended.

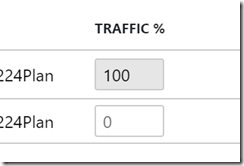

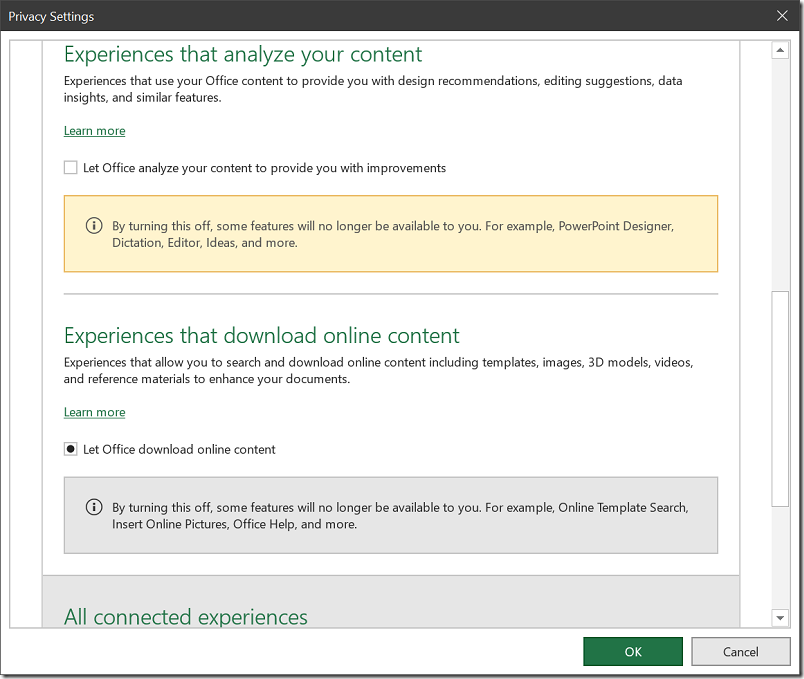

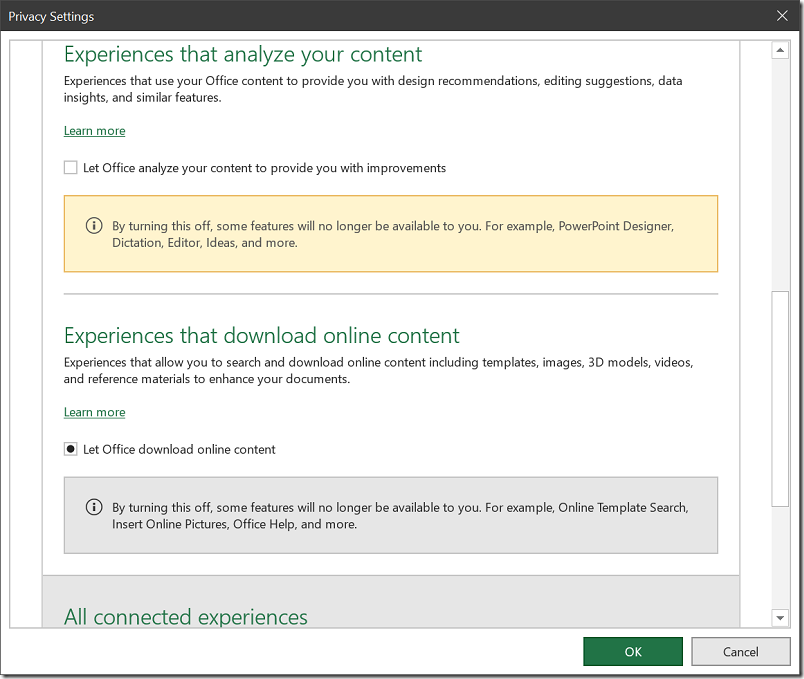

This setting is not great for clarity. Privacy-conscious users like myself may disable it because it represents your agreement to “experiences that analyze your content”. Since this means uploading your content to the cloud for analysis it sounds as if it might weaken both privacy and security. If you look at all the options though, it may be possible to agree to access online file storage without agreeing to content analysis:

It looks as if by unchecking “Let Office analyze your content” you might be able to stop Office uploading your stuff.

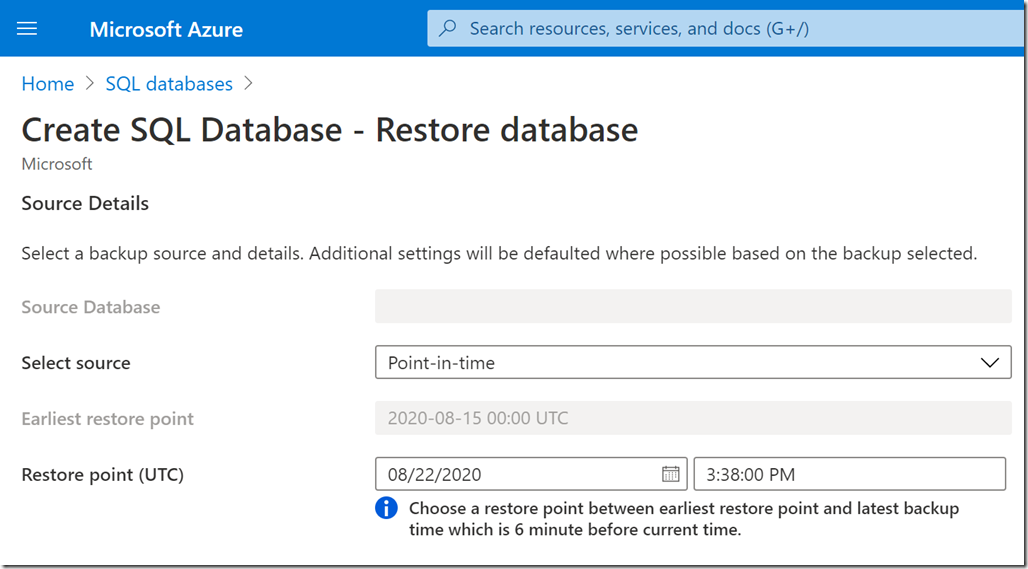

Is there anything to worry about? We need to know more about what happens to our data. There is a Learn More link that takes us here. This lists lots of features but does not tell us what we want to know. Maybe here? This tell us that:

Three types of information make up required service data.

- Customer content, which is content you create using Office, such as text typed in a Word document, and is used in conjunction with the connected experience.

It is still not clear though what happens to our data, other than that it is “sent to Microsoft”. Even the massive Microsoft Privacy Statement is no more illuminating on this point. In fact, it is arguably rather alarming since it contains this statement:

Microsoft uses the data we collect to provide you with rich, interactive experiences. In particular, we use data to:

- Provide our products, which includes updating, securing, and troubleshooting, as well as providing support. It also includes sharing data, when it is required to provide the service or carry out the transactions you request.

- Improve and develop our products.

- Personalize our products and make recommendations.

- Advertise and market to you, which includes sending promotional communications, targeting advertising, and presenting you with relevant offers.

We also use the data to operate our business, which includes analyzing our performance, meeting our legal obligations, developing our workforce, and doing research.

In carrying out these purposes, we combine data we collect from different contexts (for example, from your use of two Microsoft products) or obtain from third parties to give you a more seamless, consistent, and personalized experience, to make informed business decisions, and for other legitimate purposes.

This suggests that Microsoft will profile me and send me advertising based on the data it collects. What I need to know is not only the fact that this happens, but also the mechanism, in order to make an informed judgement about whether it is sensible to enable these options. Of course it is also possible that the Office content analysis service does not do this. I am guessing.

What can go wrong? These risks are hard to quantify. If you are typing something confidential, it makes sense not to share it more than is necessary, as further sharing can only increase the risk. There are some interesting scenarios too, such as what happens if Microsoft receives a legal demand to have sight of the content of your documents. Microsoft may not want to give access to your content, but in some circumstances it might not have the choice. Then again, I doubt it retains content sent for the purpose of personalisation, beyond whatever factors the service determines are significant. However this is not stated here.

Is this any different from storing documents on a cloud service such as SharePoint / OneDrive online? It is a bit different since in the Office case you are permitting Microsoft to analyze as well as to store your content.

All of this is up for debate. I accept that the risks are probably small as well as the fact that the wider world has little or no interest in most of the content I type but do not choose to publish.

Nevertheless, there are a few things which seem to me reasonable requests.

– A clear statement concerning what happens to my content if I choose to let it be analyzed by Microsoft’s cloud service, to enable better informed decisions about whether or not to enable this feature. Dumping the user into an all-encompassing privacy policy is not good enough.

– Improved settings (and possibly some fixed bugs) so that privacy-conscious users do not inadvertently disable access to on-premises SharePoint, as in my example, or other unexpected outcomes.

– A simple way to exclude a specific document from the service, conceptually similar to “in-private” mode in a web browser though with more chance of actually protecting your privacy (in-private mode is not really very private).

In general, I do not think the solution to a customer’s reasonable concerns about privacy and security of personal information is to obscure how this data is handled.