I’ve been putting together a quick web application (well, I thought it would be quick) in my spare time (hah!) and I picked ASP.NET Core on Linux as a sensible option given that I like working in C#. Overall it has been a reasonable experience so far and I still love the language. This is the most extensive work I have done so far with ASP.NET Core though and I have a few observations.

It is not a difficult framework to work with but I believe it could be made more approachable. This is largely a matter of documentation though another point of confusion is the transition Microsoft has been making from ASP.NET MVC to Razor Pages. These two frameworks are similar but different, they share a lot of technology but some things work in one but not the other, and sometimes it is not clear whether what you are reading applies just to ASP.NET MVC, or just to Razor Pages, or to both, or to both but with a little tweaking to account for differences. I started with MVC because I am more familiar with it but have shifted to Razor Pages because that seems to be the preferred direction; really I am equally happy with either.

If you are thinking of getting started with ASP.NET Core I recommend you start not with the framework, but with making sure that you are familiar with the following topics:

Dependency injection. If you are puzzling about something in the framework the answer may be “add it to the constructor and it magically works.” This is obvious if you are familiar with it but not otherwise.

Anonymous types. These seem to crop up quite a lot.

Lambdas and the arrow operator =>

LINQ queries

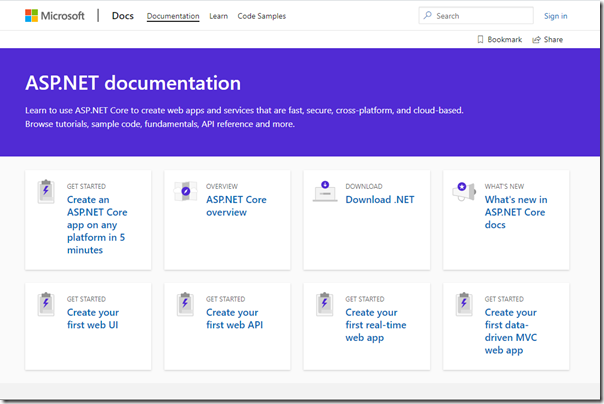

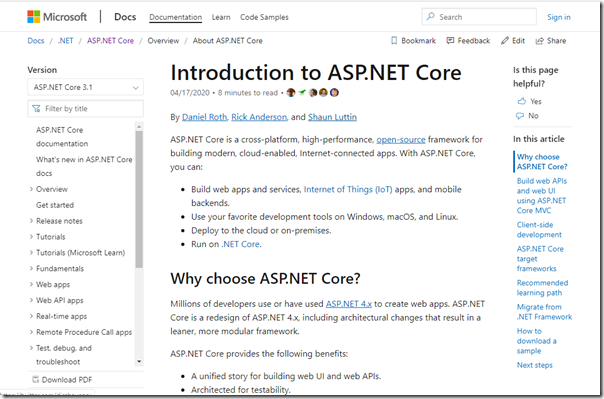

Now, the documentation. Unless you have perhaps found a good and up to date book you will probably start here.

Now, I do think there are lots of good things about docs.microsoft.com, the fact that it is all on GitHub and open for comment and improvement, the fact that it performs well, and the obvious effort that has gone into many of the topics.

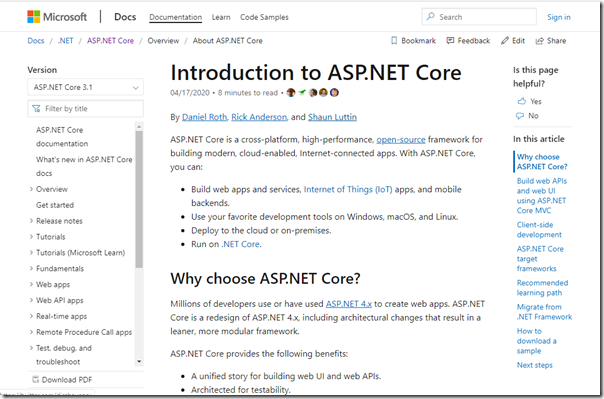

That said, I do not much like this page. My biggest problem with it is that there is no simple link to a comprehensive reference. It is a bunch of little tutorials which may or may not tell you what you need to know. It gets better if you click into one of the topics and I like this page, for example, much better, with the hierarchical list of topics on the left.

It is still not great though. There is a big emphasis on tutorials, and while I agree that learning through doing is a great way to learn, the problem with the tutorials is that they tend to leave you with lots of questions and no obvious route to answers.

I will give you an example. I decided to use the ASP.NET Identity system in my application, because it saves a ton of tedious work doing registration, password reset, login, and so on, plus it is security-critical code that I would likely get wrong if I did it myself.

The problem you will immediately hit though is that you want to store additional data about users. This could be any kind of data but let’s call it additional profile data. For example, you want to let users upload an image which is then displayed in the application. There are some heavy articles about customizing identity but there is also this one on adding custom user data to an ASP.NET Core web app. It’s great but it does not actually tell you how to retrieve the custom user data in your application. Eventually I figured out a way of doing it. You just have to use dependency injection to get an instance of the UserManager class. So you pop this in the constructor for one of your classes:

UserManager<YourCustomUser> UserManager

and store it in a private variable. Then you can do:

var MyTask = _userManager.GetUserAsync(User);

MyTask.Wait();

var MyUser = MyTask.Result;

or something similar (if it is a synchronous method) and it just works.

Let me add something else. The actual API reference for ASP.NET Core is almost useless. It faithfully documents each class and method while often saying nothing about how or why to use it.

Data access

My application is really forms over data as so many are, so data access plays a big role. There seem to be plenty of tutorials on data access in the ASP.NET Core documentation but I don’t much like them. The problem is Entity Framework. Most of the documentation assumes it. It is not that Entity Framework is bad; it does seem to work well and while there is debate about how well it performs, in many cases it does not matter, and in other cases you can fine-tune it. My problem rather is that what Microsoft calls a “complex data model” is actually the normal case, where you have many-to-many relationships, and dealing with this in Entity Framework soon gets fiddly. I am guilty of lacking patience, but being familiar with SQL it is easier for me just to write the SQL and to know exactly what data is being saved and what data is being retrieved. I have left Entity Framework in place because the Identity system uses it (and it looks non-trivial to replace) but for the rest I have migrated to Dapper which seems ideal. It is not a full-featured ORM and it expects you to write the SQL but does a lot that saves time. My only complaint about Dapper is that (again) the documentation isn’t great but I’ve found it much simpler to grok than the more advanced aspects of Entity Framework.

One thing I do like about Entity Framework is data migrations. Like most developers I have a local database and another one online and code-first data migrations save a lot of work creating database tables and keeping the schema in sync. Dapper does not have this.

StackOverflow

Of course it is true that no matter what is your question, someone has asked it before, and often the best place to find the answer is on StackOverflow. Big appreciation for the folk who take the time to answer questions there, though I’d add that it is not a place from which to copy code, it is a place to understand a solution. Out of date information is a problem, as it is in Microsoft’s own documentation.

Finally

I think ASP.NET Core is a great framework (or frameworks) but not as approachable as it could be. Documenting it in the best way is not an easy problem to solve, and every developer comes with different skills and requirements. Perhaps Microsoft could get someone suitable to write a nice book aimed at intermediate coders, and one that does not assume you want to use Entity Framework. Then offer it as a free download and/or publish it online as part of the documentation, and keep it up to date as new versions appear.