I have recently moved into a new area and noticed that my (now) local city council was running a Google Digital Garage:

Winchester City Council is very excited to be partnering up with The Digital Garage from Google – a digital skills training platform to assist you in growing your business, career and confidence, online. Furthermore, a Google digital expert is coming to teach you what is needed to gain a competitive advantage in the ever changing digital landscape, so come prepared to learn and ask questions, too.

I went along as a networking opportunity and learn more about Google’s strategy. The speaker was from Google partner Uplift Digital, “founded by Gori Yahaya, a digital and experiential marketer who had spent years working on behalf of Google, training and empowering thousands of SMEs, entrepreneurs, and young people up and down the country to use digital to grow their businesses and further their careers.”

I am not sure “digital garage” was the right name in this instance, as it was essentially a couple of presentations which not much interaction and no hands-on. The first session had three themes:

- Understanding search

- Manage your presence on Google

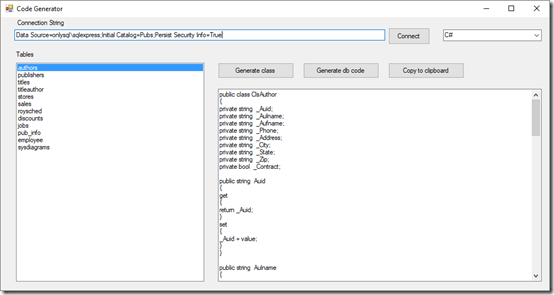

- Get started with paid advertising

What we got was pretty much the official Google line on search: make sure your site performs well on mobile as well as desktop, use keywords sensibly, and leave the rest to Google’s algorithms. The second topic was mainly about Google’s local business directory called My Business. Part three introduced paid advertising, mainly covering Google AdWords. No mention of click fraud. Be wary of Facebook advertising, we were told, since advertising on Facebook may actually decrease your organic reach, it is rumoured. Don’t bother advertising on Twitter, said the speaker.

Session two was about other ways to maintain a digital presence, mainly looking at social media, along with a (rather unsatisfactory) introduction to Google Analytics. The idea is to become an online authority in what you do, we were told. Good advice. YouTube is the second most popular search engine, we were told, and we should consider posting videos there. The speaker recommended the iOS app YouTube Director for Business, a free tool which I later discovered is discontinued from 1st December 2017; it is being replaced by Director Onsite which requires you to spend $150 on YouTube advertising in order to post a video.

Overall I thought the speaker did a good job on behalf of Google and there was plenty of common sense in what was presented. It was a Google-centric view of the world which considering that it is, as far as I can tell, entirely funded by Google is not surprising.

As you would also expect, the presentation was weak concerning Facebook, Twitter and other social media platforms. Facebook in particular seems to be critically important for many small businesses. One lady in the audience said she did not bother with a web site at all since her Facebook presence was already providing as many orders for her cake-making business as she could cope with.

We got a sanitised view of the online world which in reality is a pretty mucky place in many respects.

IT vendors have always been smart about presenting their marketing as training and it is an effective strategy.

The aspect that I find troubling is that this comes hosted and promoted by a publicly funded city council. Of course an independent presentation or a session with involvement from multiple companies with different perspectives would be much preferable; but I imagine the offer of free training and ticking the box for “doing something about digital” is too sweet to resist for hard-pressed councils, and turn a blind eye to Google’s ability to make big profits in the UK while paying little tax.

Google may have learned from Microsoft and its partners who once had great success in providing basic computer training which in reality was all about how to use Microsoft Office, cementing its near-monopoly.