At the NVIDIA GPU Technology Conference in San Jose CEO Jen-Hsun Huang talked up the company’s progress in GPU computing, showed some example applications, and announced a high-level roadmap for future graphics chip architectures. NVIDIA has three areas of focus, he said: the Quadro line for visualisation, Tesla for parallel computing, and GeForce/Tegra for personal computing. Tegra is a system on a chip aimed at mobile devices. Mobile, says Huang, is “a completely disruptive force to all of computing.”

NVIDIA’s current chip architecture is called Fermi. The company is settling on a two-year product cycle and will deliver Kepler in 2011 with 3 to 4 times the performance (expressed as Gigaflops per watt) of Fermi. Maxwell in 2013 will have around 12 times the performance of Fermi. In between these architecture changes, NVIDIA will do “kicker” updates to refresh its products, with one for Fermi due soon.

The focus of the conference though is not on super-fast graphics cards in themselves, but rather on using the GPU for general purpose computing. GPUs are very, very good at doing mathematics fast and in parallel. If you have an application that does intensive calculations, then executing that part of the code on the GPU can offer impressive performance increases. NVIDIA’s CUDA library for C lets you do exactly that. Another option is OpenCL, a standard that works across GPUs from multiple vendors.

Adobe uses CUDA for the Mercury Playback engine in Creative Suite 5, greatly improving performance in After Effects, Premiere Pro and Photoshop, but with the annoyance that you have to use a compatible NVIDIA graphics card.

The performance gain from GPU programming is so great that it is unavoidable for applications in relevant areas, such as simulation or statistical analysis. Huang gave a compelling example during the keynote, bringing heart surgeon Dr Michael Black on stage to talk about his work. Operating on a beating heart is difficult because it presents a moving target. By combining robotic surgery with software that is able to predict the heart’s movement through simulation, he is researching how to operate on a heart almost as if it were stopped and with just a small incision.

Programming the GPU is compelling, but difficult. NVIDIA is keen to see it become part of mainstream programming, for obvious reasons, and there are new libraries and tools which help with this, like Parallel Nsight for Visual Studio 2010. Another interesting development, announced today, is CUDA for x86, being developed by PGI, which will let your CUDA code run even when an NVIDIA GPU is not present. Even if the performance gains are limited, it will mean developers who need to support diverse systems can run the same code, rather than having a different code path when no CUDA GPU is detected.

That said, GPU programming still has all the challenges of concurrent development, prone to race conditions and synchronization problems.

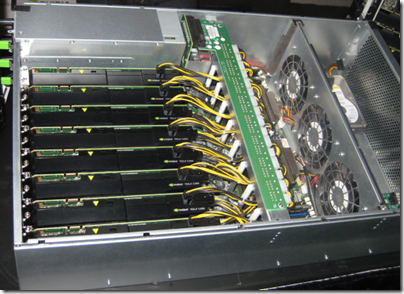

Stuffing a server full of GPUs is a cost-effective route to super-computing. I took a brief look at the exhibition, which includes this Colfax CXT8000 with 8 Tesla GPUs; it also has three 1200W power supplies. It may cost $25,000 but if you look at the performance you are getting for the price, machines like this are great value.