Several years ago I created a web application using ASP.NET and Azure AD authentication. The requirement was that only members of certain security groups could access the application, and the level of access varied according to the group membership. Pretty standard one would think.

The application has been running well but stopped working – because it used ADAL (Azure Active Directory Authentication Library) and the Microsoft Graph API with the old graph.windows.net URL both of which are deprecated.

No problem, I thought, I’ll quickly run up a new application using the latest libraries and port the code across. Easier than trying to re-plumb the existing app because this identity stuff is so fiddly.

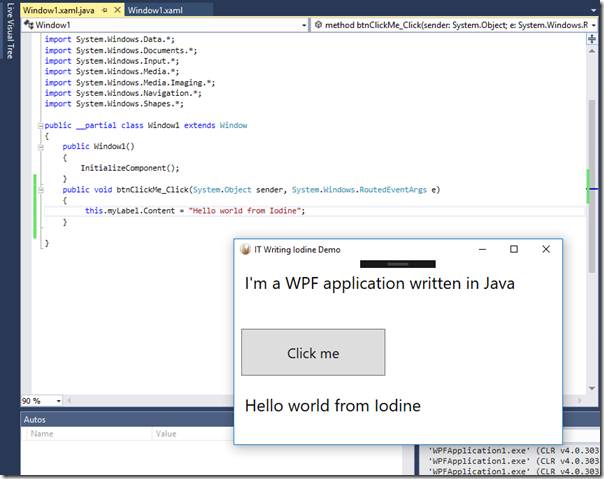

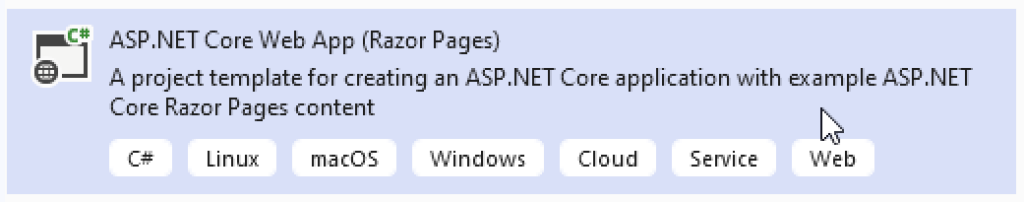

Latest Visual Studio 2022 17.13.6. Create new application and choose ASP.NET Core Web App (Razor Pages) which is perhaps the primary .NET app framework.

Next the wizard tells me I need to install the dotnet msidentity tool – a dedicated tool for configuring ASP.NET projects to use the Microsoft identity platform. OK.

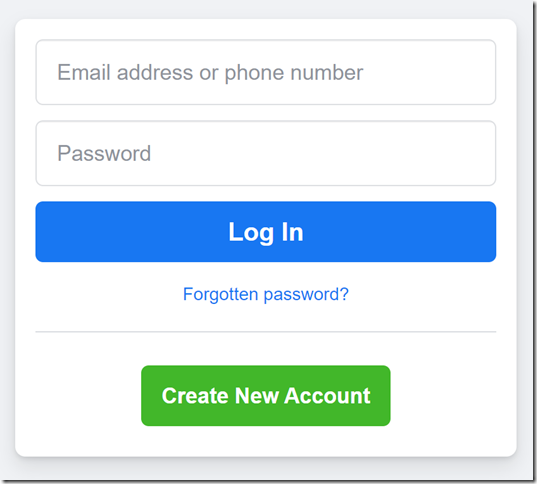

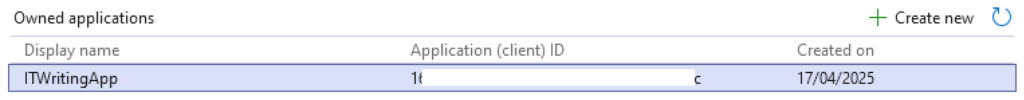

I have to sign in to my Azure tenancy (expected) and register the app. Here I can see existing registrations or create a new one. I create a new one:

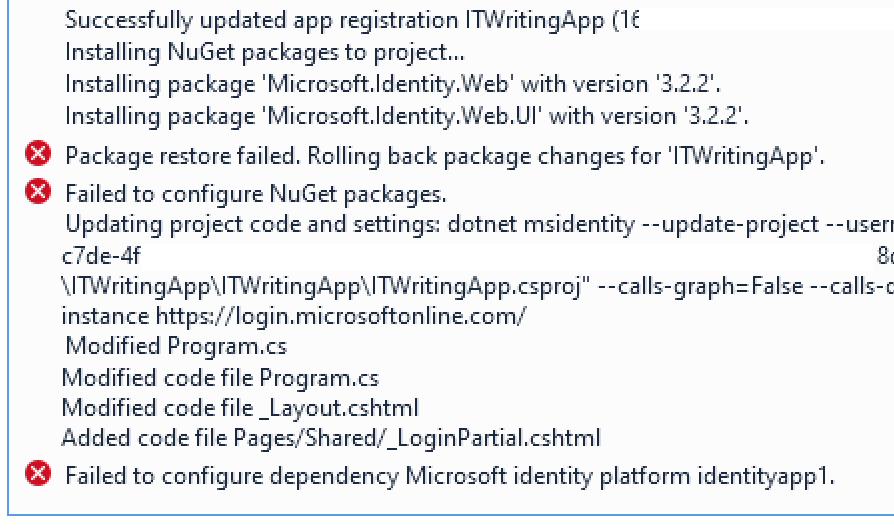

I continue in the wizard but it errors:

This does not appear to be an easy fix. I click Back and ask the wizard just to update the project code. I will add packages and do other configuration manually. Though as it turned out the failed step had actually added packages and the app does already work. However Visual Studio is warning me that the version of Microsoft.Identity.Web installed has a critical security vulnerability. I edit Nuget packages and update to version 3.8.3.

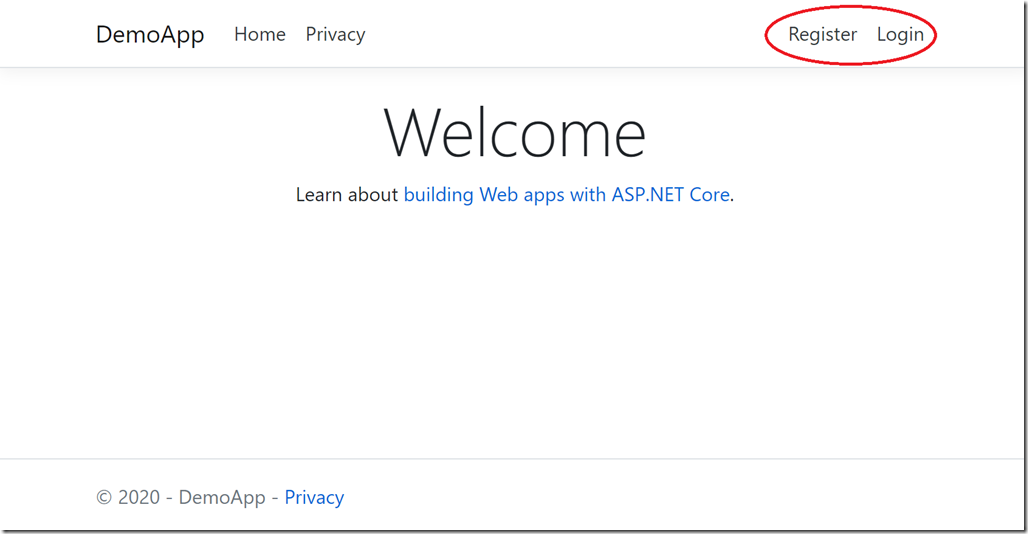

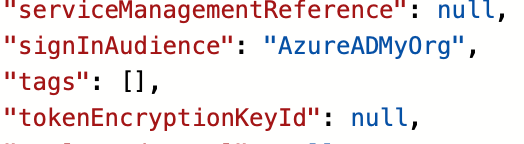

The app works and I can sign in but it is necessary to take a close look at the app registration. By default my app allows anyone with any Entra ID or personal Microsoft account to sign in. I feel this is unlikely to be what many devs intend and that the default should be more restricted. What you have to do (if this is not what you want) is to head to the Azure portal, Entra ID, App registrations, find your app, and edit the manifest. I edited the signInAudience from AzureADandPersonalMicrosoftAccount to be AzureADMyOrg:

noting that Microsoft has not been able to eliminate AzureAD from its code despite the probably misguided rename to Entra ID.

However my application has no concept of restriction by security group. I’ve added a group called AccessITWritingApp and made myself a member, but getting the app to read this turns out to be awkward. There are a couple of things to be done.

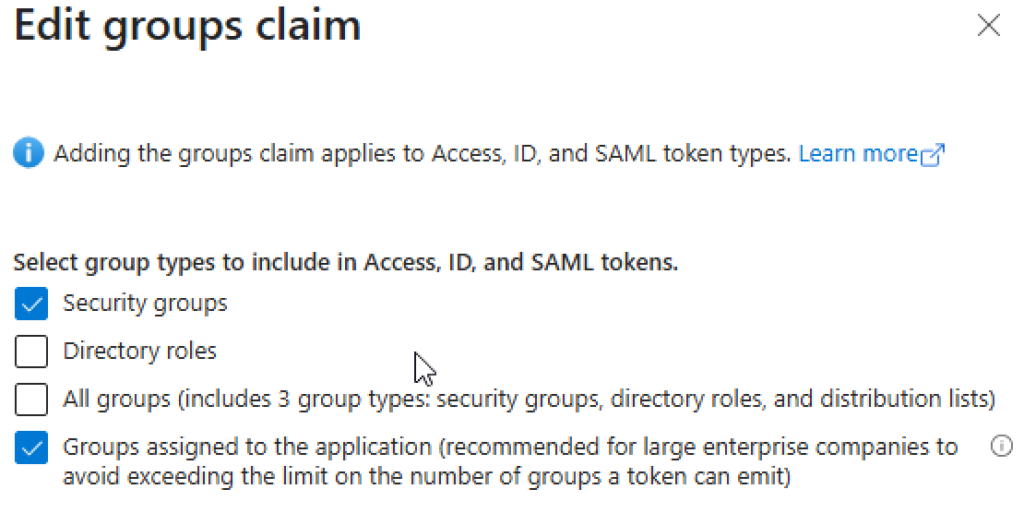

First, while we are in the App Registration, find the bit that says Token Configuration and click Edit Groups Claim. This will instruct Azure to send group membership with the access token so our app can read it. Here we have a difficult decision.

If we choose all security groups, this will send all groups with the token including users who are in a group within a group – but only up to a limit of somewhere between 6 and 200. If we choose Groups assigned to the application we can limit this to just AccessITWritingApp but this will only work for direct members. By the way, you will have to assign the group to the app in Enterprise applications in the Azure portal but the app might not appear there. You can do this though via the Overview in the App registration and clicking the link for Manage application in local directory. Why two sections for app registrations? Why is the app both in and not in Enterprise applications? I am sure it makes sense to someone.

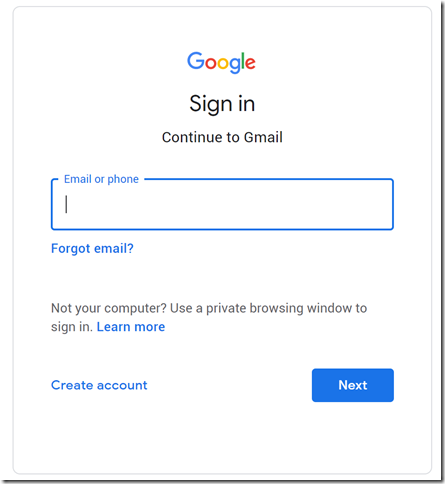

In the enterprise application you can click Assign users and groups and add the AccessITWritingWebApp group – though only if you have a premium “Active Directory plan level” meaning a premium Entra ID directory plan level. There is some confusion about this.

Another option is App Roles. You can assign App Roles to a user of the application with a standard (P1) Entra ID subscription. Information on using App Roles rather than groups, or alongside them, is here. Though note:

“Currently, if you add a service principal to a group, and then assign an app role to that group, Microsoft Entra ID doesn’t add the

rolesclaim to tokens it issues.”

Note that assigning a group or a user here will not by default either allow or prevent access for other users. It does link the user or group with the application and makes it visible to them. If you want to restrict access for a user you can do it by checking the Assignment required option in the enterprise application properties. That is not super clear either. Read up on the details here and note once again that nested group memberships are not supported “at this time” which is a bit rubbish.

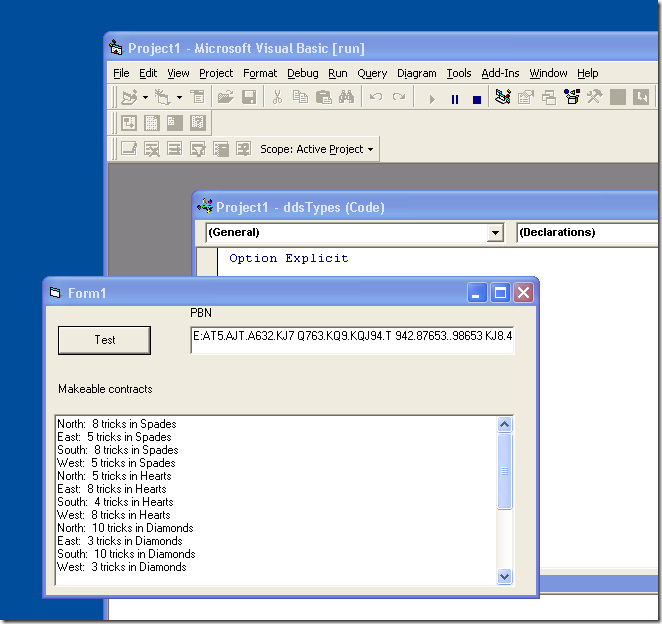

OK, so we are going down the groups route. What I want to do is to use ASP.NET Core role-based authorization. I create a new Razor page called SecurePage and at the top of the code-behind class I stick this attribute:

[Authorize(Roles = "AccessITWritingApp,[yourGroupID")]

public class SecurePageModel : PageModelNotice I am using the GroupID alongside the group name as that seems to be what arrives in the token.

Now I run the app, I can sign in, but when I try to access SecurePage I get Access Denied.

We have to make some changes for this to work. First, add a Groups section to appsettings.json like this:

"Groups": {

"AccessItWritingApp": "[yourGroupIDhere]"

},Next, in Program.cs, find the bit that says:

// Add services to the container.

builder.Services.AddAuthentication(OpenIdConnectDefaults.AuthenticationScheme)

.AddMicrosoftIdentityWebApp(builder.Configuration.GetSection("AzureAd"));and change it to:

// Add services to the container.

builder.Services.AddAuthentication(OpenIdConnectDefaults.AuthenticationScheme)

.AddMicrosoftIdentityWebApp(options =>

{

// Ensure default token validation is carried out

builder.Configuration.Bind("AzureAd", options);

// The following lines code instruct the asp.net core middleware to use the data in the "roles" claim in the [Authorize] attribute, policy.RequireRole() and User.IsInRole()

// See https://docs.microsoft.com/aspnet/core/security/authorization/roles for more info.

options.TokenValidationParameters.RoleClaimType = "groups";

options.Events.OnTokenValidated = async context =>

{

if (context != null)

{

List requiredGroupsIds = builder.Configuration.GetSection("Groups")

.AsEnumerable().Select(x => x.Value).Where(x => x != null).ToList();

// Calls method to process groups overage claim (before policy checks kick-in)

//await GraphHelper.ProcessAnyGroupsOverage(context, requiredGroupsIds, cacheSettings);

}

await Task.CompletedTask;

};

}

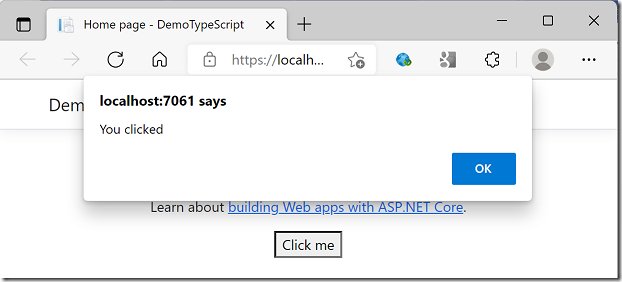

);Run the app, and now I can access SecurePage:

There are a few things to add though. Note I have commented a call to GraphHelper; you might want to uncomment this but there are further steps if you do. GraphHelper is custom code in this sample https://github.com/Azure-Samples/active-directory-aspnetcore-webapp-openidconnect-v2/ and specifically the one in 5-WebApp-AuthZ/g-2-Groups. I do not think I could have got this working without this sample.

The sample does something clever though. If the token does not supply all the groups of which the user is a member, it calls a method called ProcessAnyGroupsOverage which eventually calls graphClient.Me.GetMemberGroups to get all the groups of which the user is a member. As far as I can tell this does retrieve membership via nested groups though note there is a limit of 11,000 results.

Note that in the above I have not described how to install the GraphClient as there are a few complications, mainly regarding permissions.

It is all rather gnarly and I was surprised that years after I coded the first version of this application there is still no simple method such as graphClient.isMemberOf() that discovers if a user is a member of a specific group; or a simple way of doing this that supports nested groups which are often easier to manage than direct membership.

Further it is disappointing to get errors with Visual Studio templates that one would have thought are commonly used.

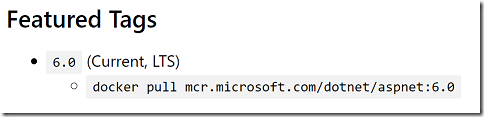

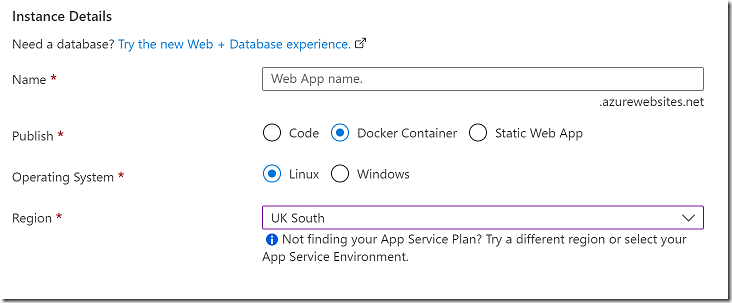

And another time perhaps I will describe the issues I had deploying the application to Azure App Service – yes, more errors despite a very simple application and using the latest Visual Studio wizard.