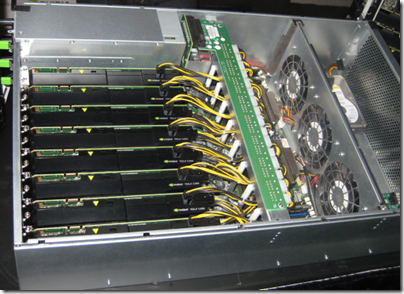

A new standard for accelerating C/C++ programming with compiler directives has been announced at the SC11 Supercomputing conference in Seattle. The new standard is called OpenACC and has been created by NVIDIA, Cray, PGI (Portland Group) and CAPS enterprise.

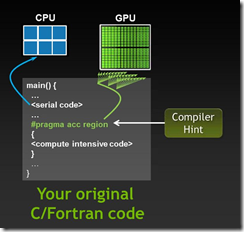

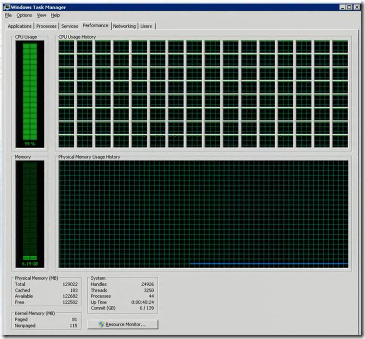

OpenACC compiler directives are code annotations that enable the compiler to parallelise code while ensuring thread-safety. The big difference between OpenACC and the existing OpenMP standard is that OpenACC primarily targets the GPU rather than CPU, whereas OpenMP is generally CPU only. That said, OpenACC can also target the CPU so it is flexible; the idea is that it will adapt to the target system.

OpenACC is “defined to be interoperable with OpenMP” according to the FAQ and the OpenACC CEO hopes for some future integration, though it seems to have been developed independently which may cause some tension.

OpenACC is expected to ship during the first half of 2012 on compilers from PGI, Cray and CAPS Enterprise. The NVIDIA involvement may make you wonder whether it is GPU-specific; the answer is “maybe”. The FAQ says:

Will OpenACC run on AMD GPUs?

– It could, it requires implementation, there is no reason why it couldn’t

Will OpenACC run on top of OpenCL?

– It could, it requires implementation, there is no reason why it couldn’t

Will AMD/Intel/MS/XX support this?

– As this is just announced we can’t speak to the rate of external adoption or participation.

Will OpenACC run on NVIDIA GPUs with CUDA?

– Yes. Programmers may wish to develop some code using directives, and more sophisticated code using CUDA C, CUDA C++ or CUDA Fortra

Spot the Yes in the above! Still, you can scarcely blame NVIDIA for supporting its own GPU family; and I have been impressed with how the company works with the scientific and academic community to realise the potential of massively parallel computing.

OpenACC is about democratising parallelism, rather than advancing the state of the art. Best optimisation is obtained by more complex programming, but directives make some remarkable performance improvements easy to achieve.