Oppo will launch Reno 2 on 16th October, under the heading “Make the world your studio”. Oppo mobiles have been making a an impression as an example of high quality technology at a price a bit less than you would pay for a Samsung or a Sony – similar in that respect to Huawei, though currently without the challenge Huawei faces in trying to market Android devices without Google Play services.

Oppo is a brand of BBK Electronics Corp, a Chinese company based in Chang’an, Dongguan. Other BBK Electronics brands include OnePlus and Vivo. If you combine the market share of all these brands, it is in the top four globally.

My first encounter with the Reno brand was in May this year when I attended the launch of the Reno 10x Zoom and the Reno 5G (essentially the 10x Zoom with 5G support) in London. Unfortunately I was not able to borrow a device for review until recently; however I have been using a 10x Zoom for the last couple of weeks and found it pretty interesting.

First impression: this is a large device. It measures 7.72 x 16.2 x 0.93cm and weighs about 215g. The AMOLED screen diagonal is 16.9cm and the resolution 2340 x 1080 pixels.

Second impression: it takes amazing pictures. To me, this is not just a matter of specification. I am not a professional photographer, but do take thousands of photos for work. Unfortunately I don’t have an iPhone 11, Samsung Galaxy Note 10 to test against. The mobile I’ve actually been using of late is the Honor 10 AI, a year older and considerably cheaper than the Reno but with a decent camera. I present the below snaps not as a fair comparison but to show how the Reno 10x Zoom compares to a more ordinary smartphone camera.

Here is a random pic of some flowers taken with the Honor 10 AI (left) and the Reno 10x Zoom (right):

Not too much in it? Try zooming in on some detail (same pic, cropped):

The Reno 10x Zoom also, believe it not, has a zoom feature. Here is a detail from my snap of an old coin at 4.9x, hand-held, no tripod.

There is something curious about this. Despite the name, the Reno has 5x optical zoom, with 10x and more (in fact up to 60x) available through digital processing. You soon learn that the quality is best when using the optical zoom alone; there is a noticeable change when you exceed 5x and not a good one.

The image stabilisation seems excellent.

The UI for this is therefore unfortunate. The way it works is that when you open the camera a small 1x button appears in the image. Tap it, and it goes to 2x.Tap again for 6x, and again for 10x. If you want other settings you either use pinch and zoom, or press and hold on the button whereupon a scale appears. Since there is a drop-off in quality after 5x, it would make more sense for the tap to give this setting.

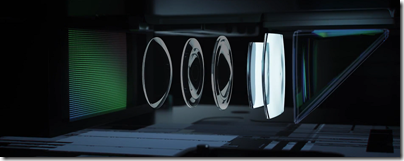

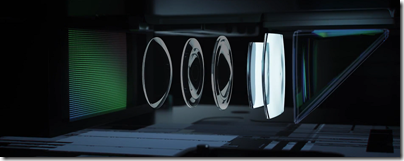

There are four camera lenses on the Reno. On the rear, a 48MP f/1.7 wide, a 13MP f/2.4 telephoto, and an 8MP f/2.2 ultra-wide. The telephoto lens has a periscope design (like Huawei’s P30 Pro), meaning that the lens extends along with the length of the phone internally, using a prism to bend the light, so that the lens can be longer than a thin smartphone normally allows.

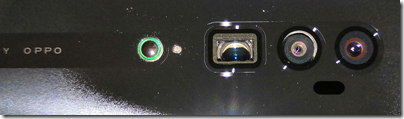

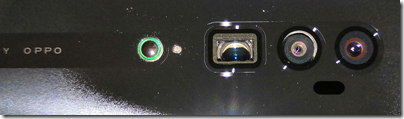

There is also a small bump (surrounded by green in the pic below) which is a thoughtful feature to protect the lenses if the device is placed on a flat surface.

On the front is a 16MP f/2.0 sensor which also gives great results, excellent for selfies or video conferencing. The notable feature here is that it is hinged and when not in use, slides into the body of the camera. This avoids having a notch. Nice feature.

ColorOS and special features

We might wish that vendors just use stock Android but they prefer to customize it, probably in the hope that customers, once having learned a particular flavour of Android, will be reluctant to switch.

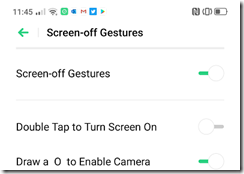

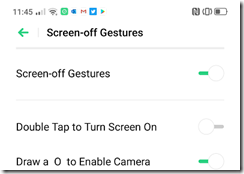

The Oppo variant is called ColorOS. One good thing about it is that you can download a manual which is currently 335pp. It is not specific to the Reno 10x Zoom and some things are wrong (it references a non-existent headphone jack, for example), but it helps if you want to understand the details of the system. You might not otherwise know, for example, that there is a setting which lets you open the camera by drawing an O gesture on the lock screen.

How many customers will find and read this manual? My hunch is relatively few. Most people get a new smartphone, transfer their favourite apps, tap around a bit to work out how to set a few things as they want them, and then do not worry.

If you have a 10x, I particularly recommend reading the section on the camera as you will want to understand each feature and how to operate it.

The Reno 10x does have quite a few smart features. Another worth noting is “Auto answer when phone is near ear”. You can also have it so that it will automatically switch from speaker to receiver when you hold the phone to your ear.

Face unlock is supported but you are not walked through setting this up automatically. You are prompted to enrol a fingerprint though. The fingerprint sensor is under glass on the front – I prefer them on the rear – but there is a nice feature where the fingerprint area glows when you pick up the device. It works but it is not brilliant if conditions are sub-optimal, for example with a damp hand.

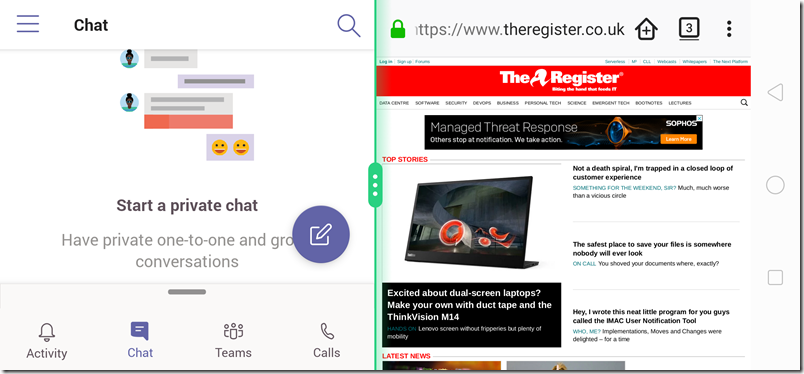

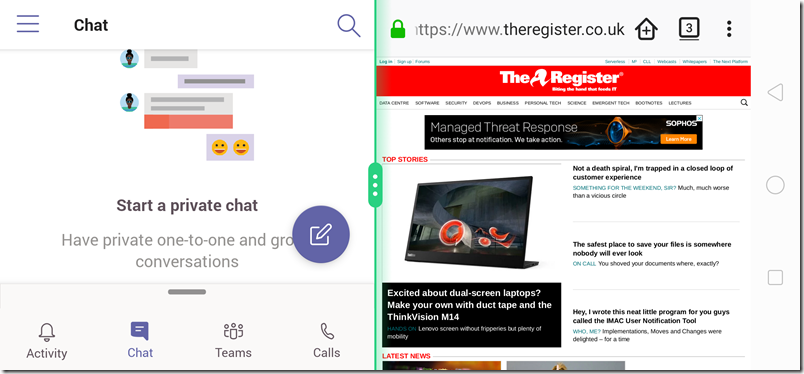

The Reno 10x Zoom supports split screen mode via a three-finger gesture. With a large high-resolution screen this may be useful. Here is Microsoft Teams (Left) with a web browser (Right).

Settings – Smart services includes Riding mode, designed for cycling, which will disable all notifications except whitelisted calls.

VOOC (Voltage Open Loop Multistep Constant-current Charging) is Oppo’s fast charging technology.

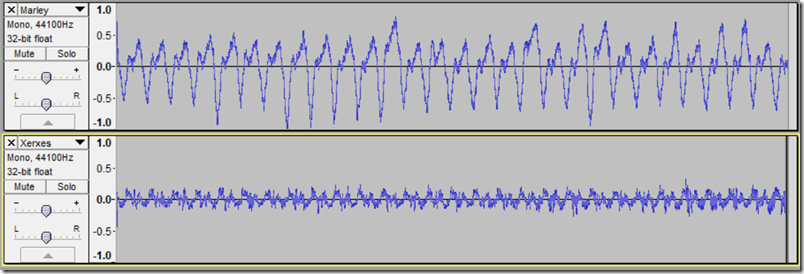

Dolby Atmos audio is included and there are stereo speakers. Sound from these is nothing special, but sound from the bundled earbuds is excellent.

Quick conclusions

A Reno 10x Zoom is not a cheap smartphone, but it does cost less than the latest flagship devices from Apple or Samsung. If you are like me and need a great camera, it strikes me as a good choice. If you do not care much about the camera, look elsewhere.

Things I especially like:

- Excellent camera

- No notch

- Great audio quality though supplied earbuds

- Thoughtful design and high quality build

There are a few things against it though:

- Relatively bulky

- No wireless charging

- No headphone jack (less important now that wireless earbuds are common)

Spec summary

OS: Android 9 with ColorOS 6

Screen: AMOLED 6.6″ 2340 x 1080 at 387 ppi

Chipset: Qualcomm Snapdragon 855 SM8150 , 8 Core Kryo 485 2.85 GHz

Integrated GPU: Qualcomm Adreno 640

RAM: 8GB

Storage: 256GB

Dual SIM: Yes – 2 x Nano SIM or SIM + Micro SD

NFC: Yes

Sensors: Geomagnetic, Light, Proximity, Accelerometer, Gyro, Laser focus, dual-band GPS

WiFi: 802.11 a/b/g/n/ac, 2.4GHz/5GHz, hotspot support

Bluetooth: 5.0

Connections: USB Type-C with OTG support.

Size and weight: 162 mm x 77.2 mm x 9.3 mm, 215g

Battery: 4065 mAh. No wireless charging.

Fingerprint sensor: Front, under glass

Face unlock: Yes

Rear camera: Rear: 48MP + 8MP + 13MP

Front camera: 16MP