Microsoft’s PR team has helpfully summarised many of the announcements at the Ignite event, kicking off today in Orlando. I count 82, but you might make it fewer or many more, depending on what you call an announcement. And that is not including the business apps announcements made at the end of last week, most notably the arrival of the HoloLens-based Remote Assist in Dynamics 365.

Not all announcements are equal. Some, like the release of Windows Server 2019, are significant but not really news; we knew it was coming around now, and the preview has been around for ages. Others, like larger Azure managed disk sizes (8, 16 and 32TB) are cool if that is what you need, but hardly surprising; the specification of available cloud infrastructure is continually being enhanced.

Note that this post is based on what Microsoft chose to reveal to press ahead of the event, and there is more to come.

It is worth observing though that of these 82 announcements, only 3 or 4 are not cloud related:

- SQL Server 2019 public preview

- [Windows Server 2019 release] – I am bracketing this because many of the new features in Server 2019 are Azure-related, and it is listed under the heading Azure Infrastructure

- Chemical Simulation Library for Microsoft Quantum

- Surface Hub 2 release promised later this year

Microsoft’s journey from being an on-premises company, to being a service provider, is not yet complete, but it is absolutely the focus of almost everything new.

I will never forget an attendee at a previous Microsoft event a few years back telling me, “this cloud stuff is not relevant to us. We have our own datacenter.” I cannot help wondering how much Office 365 and/or Azure that person’s company is consuming now. Of course on-premises servers and applications remain important to Microsoft’s business, but it is hard to swim against the tide.

Ploughing through 82 announcements would be dull for me to write and you to read, so here are some things that caught my eye, aside from those already mentioned.

1. Azure confidential computing in public preview. A new series of VMs using Intel’s SGX technology lets you process data in a hardware-enforced trusted execution environment.

2. Cortana Skills Kit for Enterprise. Currently invite-only, this is intended to make it easier to write business bots “to improve workforce productivity” – or perhaps, an effort to reduce the burden on support staff. I recall examples of using conversational bots for common employee queries like “how much holiday allowance do I have remining, and which days can I take off?”. As to what is really new here, I have yet to discover.

3. A Python SDK for Azure Machine Learning. Important given the popularity of Python in this space.

4. Unified search in Microsoft 365. Is anyone using Delve? Maybe not, which is why Microsoft is bringing a search box to every cloud application, which is meant to use Microsoft Graph, AI and Bing to search across all company data and bring you personalized results. Great if it works.

5. Azure Digital Twins. With public preview promised on October 15, this lets you build “comprehensive digital models of any physical environment”. Once you have the model, there are all sorts of possibilities for optimization and safe experimentation.

6. Azure IoT Hub to support the Android Things platform via the Java SDK. Another example of Microsoft saying, use what you want, we can support it.

7. Azure Data Box Edge appliance. The assumption behind Edge computing is both simple and compelling: it pays to process data locally so you can send only summary or interesting data to the cloud. This appliance is intended to simplify both local processing and data transfer to Azure.

8. Azure Functions 2.0 hits general availability. Supports .NET Core, Python.

9. Helm repositories in Azure Container Registry, now in public preview.

10 Windows Autopilot support extended to existing devices. This auto-configuration feature previously only worked with new devices. Requires Windows 10 October Update, or automated upgrade to this.

Office and Office 365

In the Office 365 space there are some announcements:

1. LinkedIn integration with Office 365. Co-author documents and send emails to LinkedIn contacts, and surface LinkedIn information in meeting invites.

2. Office Ideas. Suggestions as you work to improve the design of your document, or suggest trends and charts in Excel. Sounds good but I am sceptical.

3. OneDrive for Mac gets Files on Demand. A smarter way to use cloud storage, downloading only files that you need but showing all available documents in Mac Finder.

4. New staff scheduling tools in Teams. Coming in October. ”With new schedule management tools, managers can now create and share schedules,employees can easily swap shifts, request time off, and see who else is working.” Maybe not a big deal in itself, but Teams is huge as I previously noted. Apparently the largest Team is over 100,000 strong now and there are 50+ out there with 10,000 or more members.

Windows Virtual Desktop

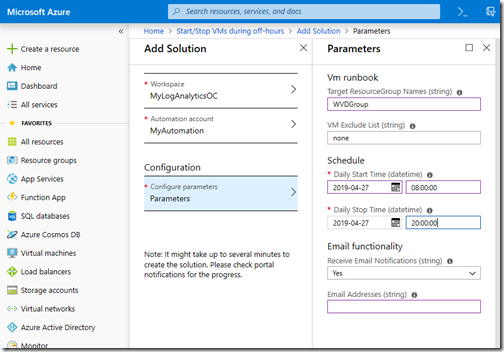

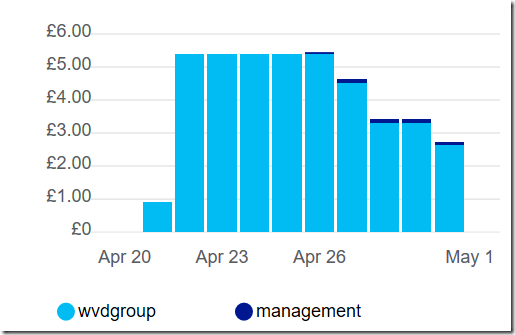

This could be nothing, or it could be huge. I am working on the basis of a one-paragraph statement that promises “virtualized Windows and Office on Azure … the only cloud-based service that delivers a multi-user Windows 10 experience, is optimized for Office 365 Pro Plus … with Windows Virtual Desktop, customers can deploy and scale Windows and Office on Azure in minutes, with built-in security and compliance.”

Preview by the end of 2018 is targeted.

Virtual Windows desktops are already available on Azure, via partnership with Citrix or VMWare Horizon, but Microsoft has held back from what is technically feasible in order to protect its Windows and Office licensing income. By the time you have paid for licenses for Windows Server, Remote Access per user, Office per user, and whatever third-party technology you are using, it gets expensive.

This is mainly about licensing rather than technology, since supporting multiple users running Office applications is now a light load for a modern server.

If Microsoft truly gets behind a pure first-party solution for hosted desktops on Azure at a reasonable cost, the take up would be considerable since it is a handy solution for many scenarios. This would not please its partners though, nor the many hosting companies which offer this.

On the other hand, Microsoft may want to compete more vigorously with Amazon Web Services and its Workspaces offering. Workspaces is still Windows, but of course integrates nicely with AWS solutions for storage, directory, email and so on, so there is a strategic aspect here.

Update: A little more on Microsoft Virtual Desktop here.

More details soon.