Do small businesses still need a server? In my case, I do still run a couple, mainly for trying out new releases of server products like Windows Server 2012 R2, System Center 2012, Exchange and SharePoint. The ability to quickly run up VMs for testing software is of huge value; you can do this with just a desktop but running a dedicated hypervisor is convenient.

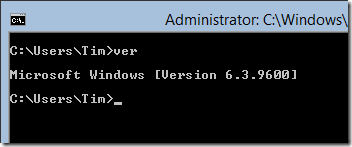

My servers run Hyper-V Server 2012 R2, the free version, which is essentially Server Core with just the Hyper-V role installed. I have licenses for full Windows server but have stuck with the free one partly because I like the idea of running a hypervisor that is stripped down as far as possible, and partly because dealing with Server Core has been educational; it forces you into the command line and PowerShell, which is no bad thing.

Over the years I have bought several of HP’s budget servers and have been impressed; they are inexpensive, especially if you look out for “top value” deals, and work reliably. In the past I’ve picked the ML110 range but this is now discontinued (though the G7 is still around if you need it); the main choice is either the small Proliant Gen8 MicroServer which packs in space for 4 SATA drives and up to 16GB RAM via 2 PC3 DDR3 DIMM slots and support for the dual-core Intel Celeron G1610T or Pentium G2020T; or the larger ML310 Gen8 series with space for 4 3.5" or 8 small format SATA drives and 4 PC3 DDR3 DIMM slots for up to 32GB RAM, with support for the Core i3 or Xeon E3 processors with up to 4 cores. Both use the Intel C204 chipset.

I picked the ML310e because a 4-core processor with 32GB RAM is gold for use with a hypervisor. There is not a huge difference in cost. While in a production environment it probably makes sense to use the official HP parts, I used non-HP RAM and paid around £600 plus VAT for a system with a Xeon E3-1220v2 4-core CPU, 32GB RAM, and 500GB drive. I stuck in two budget 2Tb SATA drives to make up a decent server for less than £800 all-in; it will probably last three years or more.

There is now an HP ML310e Gen 8 v2 which might partly explain why the first version is on offer for a low price; the differences do not seem substantial except that version 2 has two USB 3.0 ports on the rear in place of four USB 2.0 ports and supports Xeon E3 v3.

Will I replace this server? The shift to the cloud means that I may not bother. I was not even sure about this one. You can run up VMs in the cloud easily, on Amazon ECC or Microsoft Azure, and for test and development that may be all you need. That said, I like the freedom to try things out without worrying about subscription costs. I have also learned a lot by setting up systems that would normally be run by larger businesses; it has given me better understanding of the problems IT administrators encounter.

So how is the server? It is just another box of course, but feels well made. There is an annoying lock on the front cover; you can’t remove the side panel unless this is unlocked, and you can’t remove the key unless it is locked, so the solution if you do not need this little bit of physical security is to leave the key in the lock. It does not seem worth much to me since a miscreant could easily steal the entire server and rip off the panel at leisure.

On the front you get 4 USB 2.0 ports, UID LED button, NIC activity LED, system health LED and power button.

The main purpose of the UID (Unit Identifier) button is to help identify your server from the rear if it is in a rack. You press the button on the front and an LED lights at the rear. Not that much use in a micro tower server.

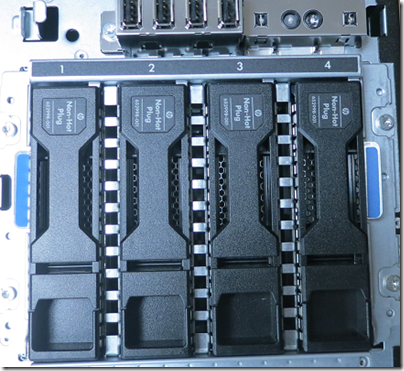

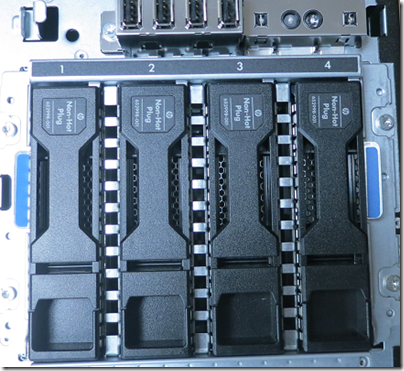

Remove the front panel and you can see the drive cage:

Hard drives are in caddies which are easily pulled out for replacement. However note the “Non hot plug” on these units; you must turn the server off first.

You might think that you have to buy HP drives which come packaged in caddies. This is not so; if you remove one of the caddies you find it is not just a blank, but allows any standard 3.5" drive to be installed. The metal brackets in the image below are removed and you just stick the drive in their place and screw the side panels on.

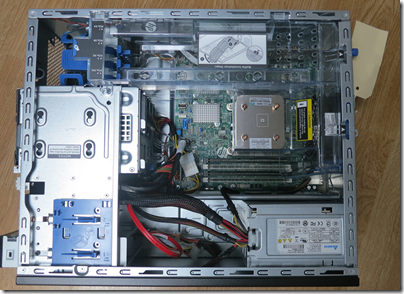

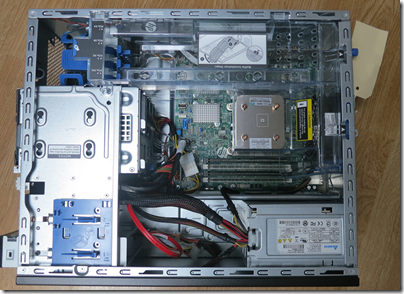

Take the side panel off and you will see a tidy construction with the 350w power supply, 4 DIMM slots, 4 PCI Express slots (one x16, two x8, one x4), and a transparent plastic baffle that ensures correct air flow.

The baffle is easily removed.

What you see is pretty much as it is out of the box, but with RAM fitted, two additional drives, and a PCIX USB 3.0 card fitted since (annoyingly) the server comes with USB 2.0 only – fixed in the version 2 edition.

On the rear are four more USB 2.0 ports, two 1GB NIC ports, a blank where a dedicated ILO (Integrated Lights Out) port would be, video and serial connector.

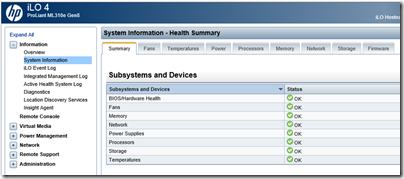

Although there is no ILO port on my server, ILO is installed. The luggage label shows the DNS name you need to access it. If you can’t get at the label, you can look at your DHCP server and see what address has been allocated to ILOxxxxxxxxx and use that. Once you log in with a web browser you can change this to a fixed IP address; probably a good idea in case, in a crisis, the DHCP server is not working right.

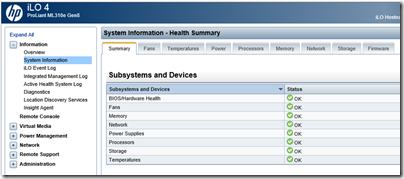

ILO is one of the best things about HP servers. It is a little embedded system, isolated from whatever is installed on the server, which gets you access to status and troubleshooting information.

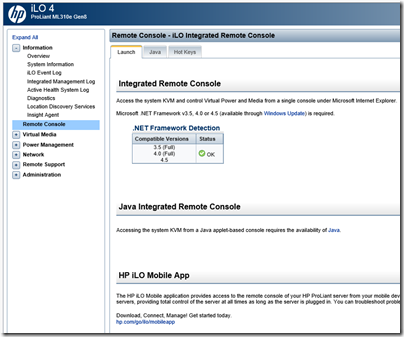

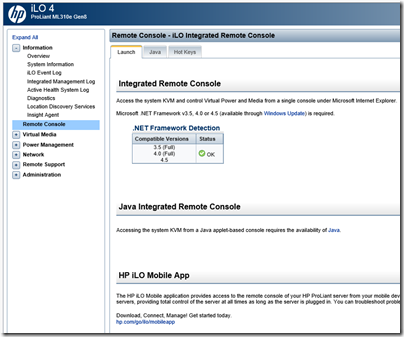

Its best feature is the remote console which gives you access to a virtual screen, keyboard and mouse so you can get into your OS from a remote session even when the usual remote access techniques are not working. There are now .NET and mobile options as well as Java.

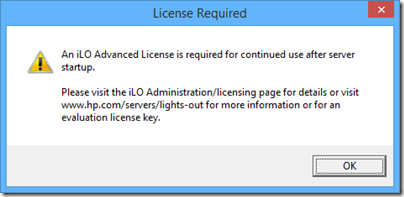

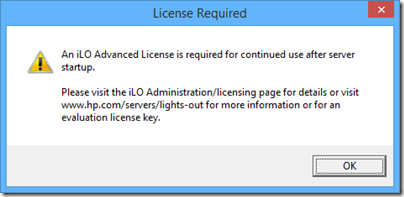

Unfortunately there is a catch. Try to use this an a license will be demanded.

However, you can sign up for an evaluation that works for a few weeks. In other words, your first disaster is free; after that you have to pay. The license covers several servers and is not good value for an individual one.

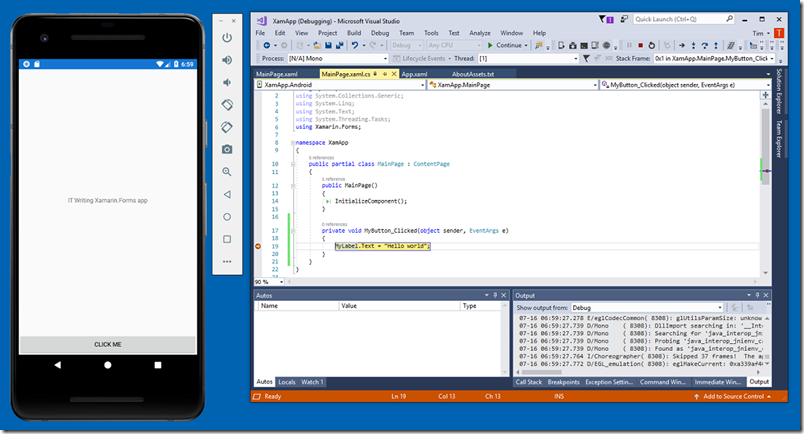

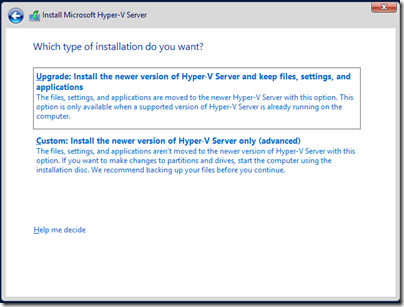

Everything is fine on the hardware side, but what about the OS install? This is where things went a bit wrong. HP has a system called Intelligent Provisioning built in. You pop your OS install media in the DVD drive (or there are options for network install), run a wizard, and Intelligent Provisioning will update its firmware, set up RAID, and install your OS with the necessary drivers and HP management utilities included.

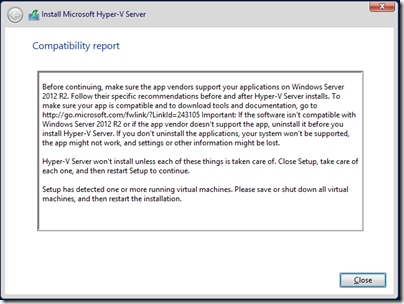

I don’t normally bother with all this but I thought I should give it a try. Unfortunately Server 2012 R2 is not supported, but I tried it for Server 2012 x64, hoping this would also work with Hyper-V Server, but no go; failed with unattend script error.

Next I set up RAID manually using the nice HP management utility in the BIOS and tried to install using the storage drivers saved to a USB pen drive. It seemed to work but was not stable; it would sometimes fail to boot, and sometimes you could log on and do a few things but Windows would crash with a Kernel_Security_Check_Failure.

Memory problems? Drive problems? It was not clear; but I decided to disable embedded RAID in the BIOS and use standard AHCI SATA. Install proceeded perfectly with no need for additional drivers, and the OS is 100% stable.

I did not want to give up RAID though, so wondered if I could use Storage Spaces on Hyper-V Server. Apparently you can. I joined the Hyper-V Server to my domain and then used Server Manager remotely to create a Storage Pool from my pair of 2TB drives, and then a mirrored virtual disk.

My OS drive is not on resilient storage but I am not too concerned about that. I can backup the OS (wbadmin works), and since it does nothing more than run Hyper-V, recovery should be straightforward if necessary.

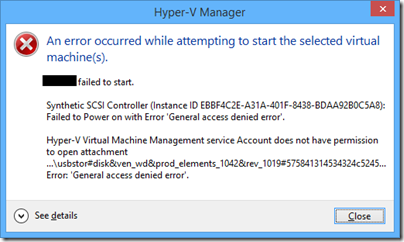

After that I moved across some VMs using a combination of Move and Export with no real issues, other than finding Move too slow on my system when you have a large VHD to copy.

The server overall seems a good bargain; HP may have problems overall, but the department that turns out budget servers seems to do an excellent job. My only complaint so far is the failure of the storage drivers on Server 2012 R2, which HP will I hope fix with an update.