Microsoft’s Windows Virtual Desktop (WVD) is now in preview. This is virtual Windows desktops on Azure, and the first time Microsoft has come forward with a fully integrated first-party offering. There are also a few notable features:

– You can use a multi-session edition of Windows 10 Enterprise. Normally Windows 10 does not support concurrent sessions: if another user logs on, any existing session is terminated. This is an artificial restriction which is more to do with licensing than technology, and there are hacks to get around it but they are pointless presuming you want to be correctly licensed.

– You can use Windows 7 with free extended security updates to 2023. As standard, Windows 7 end of support is coming in January 2020. Without Windows Virtual Desktop, extended security support is a paid for option.

– Running a VDI (Virtual Desktop Infrastructure) can be expensive but pricing for Windows Virtual Desktop is reasonable. You have to pay for the Azure resources, but licensing comes at no extra cost for Microsoft 365 users. Microsoft 365 is a bundle of Office 365, Windows InTune and Windows 10 licenses and starts at £15.10 or $20 per month. Office 365 Business Premium is £9.40 or $12.50 per month. These are small business plans limited to 300 users.

Windows Virtual Desktop supports both desktops and individual Windows applications. If you are familiar with Windows Server Remote Desktop Services, you will find many of the same features here, but packaged as an Azure service. You can publish both desktops and applications, and use either a client application or a web browser to access them.

What is the point of a virtual desktop when you can just use a laptop? It is great for manageability, security, and remote working with full access to internal resources without a VPN. There could even be a cost saving, since a cheap device like a Chromebook becomes a Windows desktop anywhere you have a decent internet connection.

Puzzling out the system requirements

I was determined to try out Windows Virtual Desktop before writing about it so I went over to the product page and hit Getting Started. I used a free trial of Azure. There is a complication though which is that Windows Virtual Desktop VMs must be domain joined. This means that simply having Azure Active Directory is not enough. You have a few options:

– Azure Active Directory Domain Services (Azure ADDS) This is a paid-for azure service that provides domain-join and other services to VMs on an Azure virtual network. It costs from about £80.00 or $110.00 per month. If you use Azure ADDS you set up a separate domain from your on-premises domain, if you have one. However you can combine it with Azure AD Connect to enable sign-on with the same credentials.

There is a certain amount of confusion over whether you can use WVD with just Azure ADDS and not AD Connect. The docs say you cannot, stating that “A Windows Server Active Directory in sync with Azure Active Directory” is required. However a user reports success without this; of course there may be snags yet to be revealed.

– Azure Active Directory with AD Connect and a site to site VPN. In this scenario you create an Azure virtual network that is linked to your on-premises network via a site to site VPN. I went this route for my trial. I already had AD Connect running but not the VPN. A VPN requires a VPN Gateway which is a paid-for option. There is a Basic version which is considered legacy, so I used a VPNGw1 which costs around £100 or $140 per month.

Update: I have replaced the VPN Gateway with once using the Basic sku (around £20.00 or $26.00 per month) and it still works fine. Microsoft does not recommend this for production but for a very small deployment like mine, or for testing, it is much more cost effective.

This solution is working well for me but note that in a production environment you would want to add some further infrastructure. The WVD VMs are domain-joined to the on-premises AD which means constant network traffic across the VPN. AD integrates with DNS so you should also configure the virtual network to use on-premises DNS. The solution would be to add an Azure-hosted VM on the virtual network running a domain controller and DNS. Of course this is a further cost. Running just Azure ADDS and AD Connect is cheaper and simpler if it is supported.

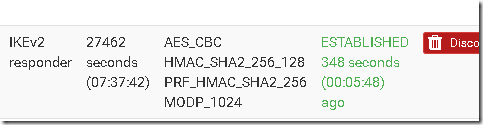

Incidentally, I use pfsense for my on-premises firewall so this is the endpoint for my site-to-site VPN. Initially it did not work. I am not sure what fixed it but it may have been the TCP MSS Clamping referred to here. I set this to 1350 as suggested. I was happy to see the connection come up in pfsense.

Setup options

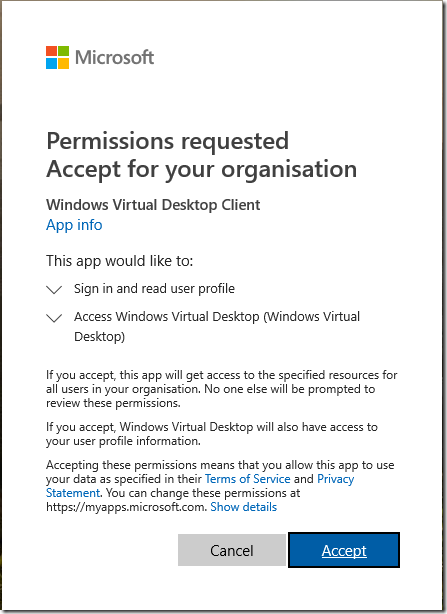

There are a few different ways to set up WVD. You start by setting some permissions and creating a WVD Tenant as described here. This requires PowerShell but it was pretty easy.

The next step is to create a WVD host pool and this was less straightforward. The tutorial offers the option of using the Azure Portal and finding Windows Virtual Desktop – Provision a host pool in the Azure Marketplace. Or you can use an Azure Resource Manager template, or PowerShell.

I used the Azure Marketplace, thinking this would be easier. When I ran into issues, I tried using PowerShell, but had difficulty finding the special Windows 10 Enterprise Virtual Desktop edition via this route. So I went back to the portal and the Azure marketplace.

Provisioning the host pool

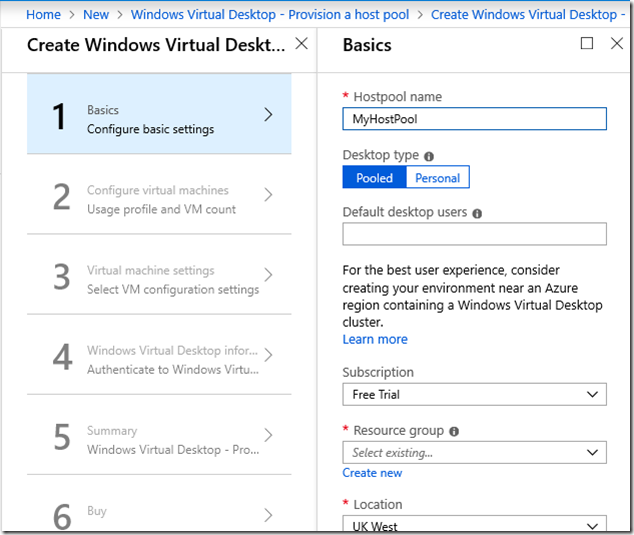

Once your tenant is created, and you have the system requirements in place, it is just a matter of running through a wizard to provision the host pool. You start by naming it and selecting a desktop type: Pooled for multi-session Windows 10, or Personal for a VM per user. I went for the Pooled option.

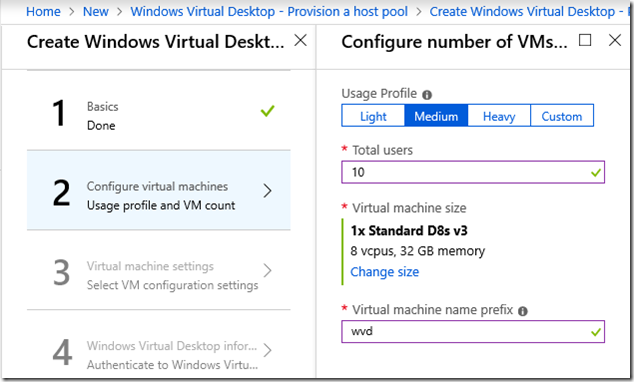

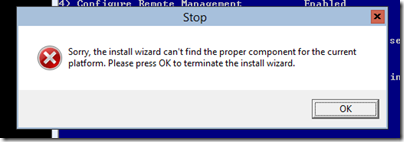

Next comes VM configuration. I stumbled a bit here. Even if you specify just 10 (or 1) users, the wizard recommends a fairly powerful VM, a D8s v3. I thought this would be OK for the trial, but it would not let me continue using the trial subscription as it is too expensive. So I ended up with a D4s v3. Actually, I also tried using a D4 v3 but that failed to deploy because it does not support premium storage. So the “s” is important.

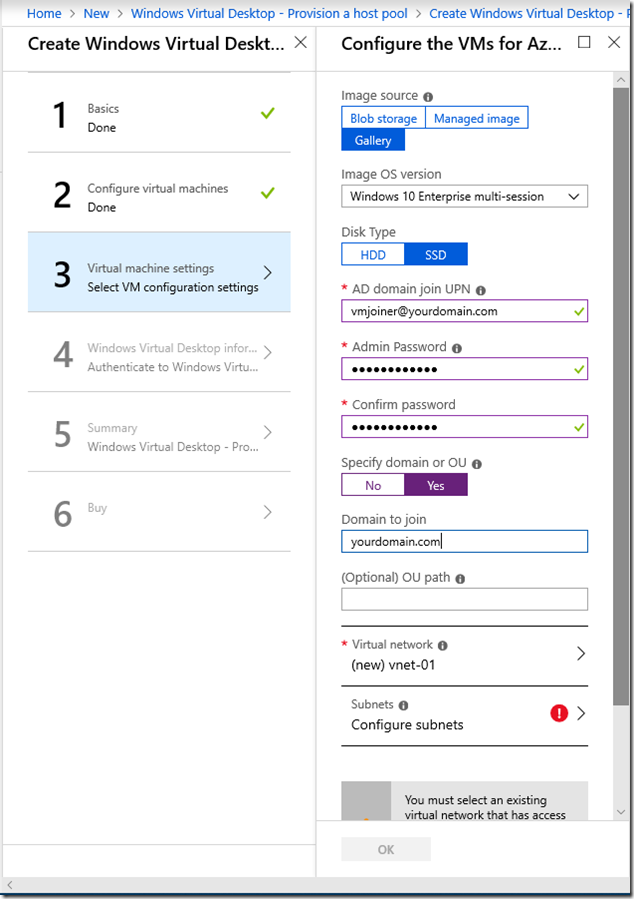

The next dialog has some potential snags.

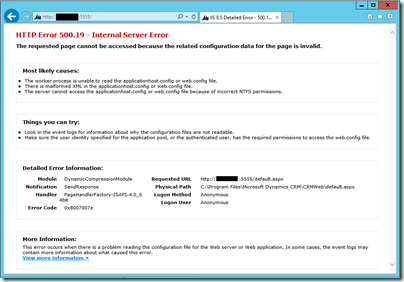

This is where you choose an OS image, note the default is Windows 10 Enterprise multi-session, for a pooled WVD. You also specify a user which becomes the default for all the VMs and is also used to join the VMs to the domain. These credentials are also used to create a local admin account on the VM, in case the domain join fails and you need to connect (I did need this).

Note also that the OU path is specified in the form OU=wvd,DC=yourdomain,DC=com (for example). Not just the name of an OU. Otherwise you will get errors on domain join.

Finally take care with the virtual network selection. It is quite simple: if you are doing what I did and domain-joining to an on-premises domain, the virtual network and subnet needs to have connectivity to your on-premises DCs and DNS.

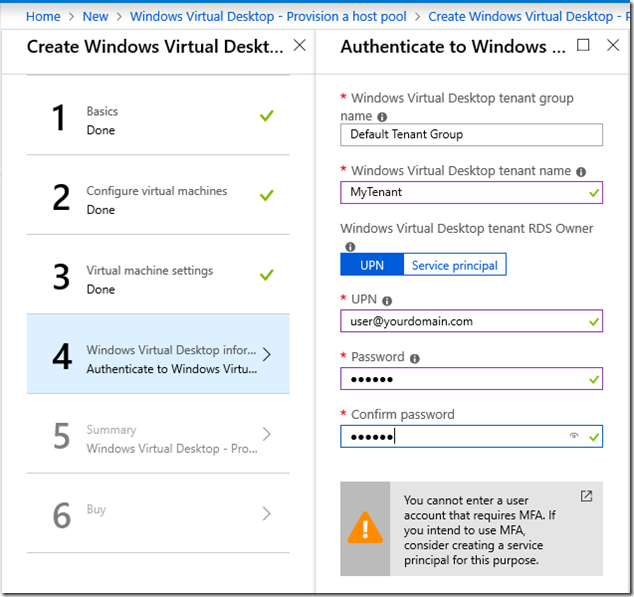

The next dialog is pretty easy. Just make sure that you type in the tenant name that you created earlier.

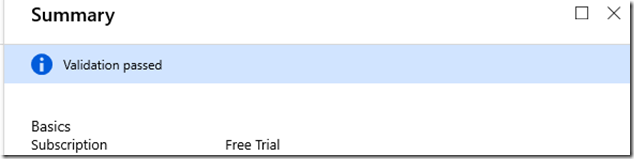

Next you get a summary screen which validates your selections.

I suggest you do not take this validation too seriously. I found it happily validated a non-working configuration.

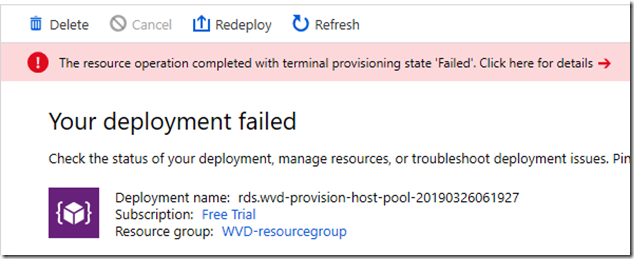

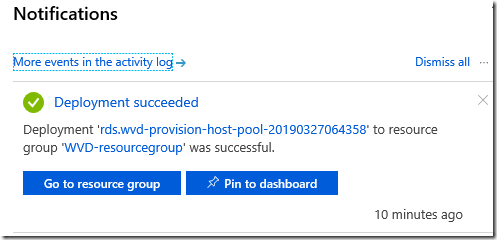

Hit OK and you can deploy your WVD host pool. This takes a few minutes, in my case around 10-15 minutes when it works. If it does not work, it can fail quickly or slowly depending on where in the process it fails.

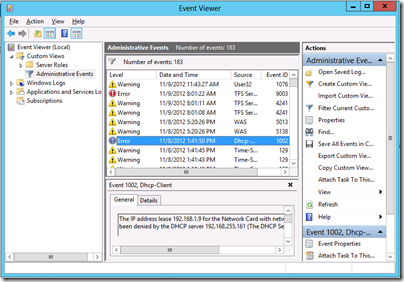

My problem, after fixing issues like using the wrong type of OS image, was failure to join the VM to the domain. I could not see why this did not work. The displayed error may or may not be useful.

If the deployment got as far as creating the VM (or VMS), I found it helpful to connect to a VM to look at its event viewer. I could connect from my on-premises network thanks to the site to site VPN.

I discovered several issues before I got it working. One was simple: I mistyped the name of the vmjoiner user when I created it so naturally it could not authenticate. I was glad when it finally worked.

Connection

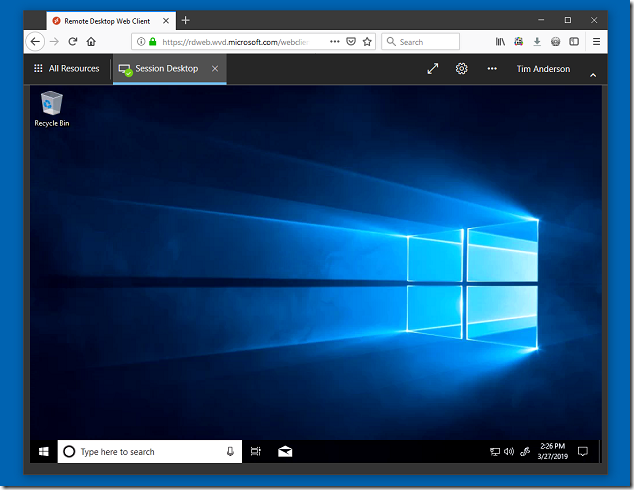

Once I got the host pool up and running my trial WVD deployment was fine. I can connect via a special Remote Desktop Client or a browser. The WVD session is fast and responsive and the VPN to my office rather handy.

Observations

I think WVD is a good strategic move from Microsoft and will probably be popular. I have to note though that setup is not as straightforward as I had hoped. It would benefit Microsoft to make the trial easier to get up and running and to improve the validation of the host pool deployment.

It also seems to me that for small businesses an option to deploy with only Azure ADDS and no dependency on an on-premises AD is essential.

As ever, careful network planning is a requirement and improved guidance for this would also be appreciated.

Update:

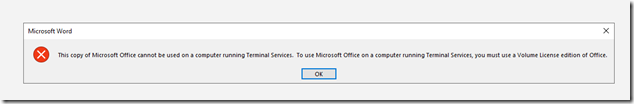

There seems to a problem with Office licensing. I have an E3 license. It installs but comes up with a licensing error. I presume this is a bug in the preview.

This was my mistake as it turned out. You have to take some extra steps to install Office Pro Plus on a terminal server, as explained here. In my case, I just added the registry key SharedComputerLicensing with a setting of 1 under HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Office\ClickToRun\Configuration. Now it runs fine. Thanks to https://twitter.com/getwired for the tip.