One of the messier aspects of the modern web is the situation with JavaScript modules. Modules, and the ability to import code from one module into another in a coherent and efficient manner, are fundamental programming concepts but JavaScript originally had no concept of them. Developers came up with CommonJS, originally for server-side JavaScript, using the keyword require to reference one module from another. Node.js borrowed and refined this system. It does not work in web browsers but can be made to do so by processing the code before deployment to make it browser compatible, or by using require.js or an equivalent.

In the meantime the ECMAScript standard evolved to develop its own module system, often referred to as ES modules or ES6 modules (modules changed a lot in ES6, also known as ES2015). Browsers implement ES6 in their JavaScript engines, not CommonJS. The two systems are not compatible.

The situation today is that although most agree that ES6 is the way forward, Node.js and a huge amount of existing code uses Node.js modules. The Node.js team is trying to migrate towards ES6 but it is inherently a difficult path. Deno, an alternative to Node but with a tiny userbase by comparison, uses ES6 and that is one of its attractions.

ASP.NET Core and JavaScript

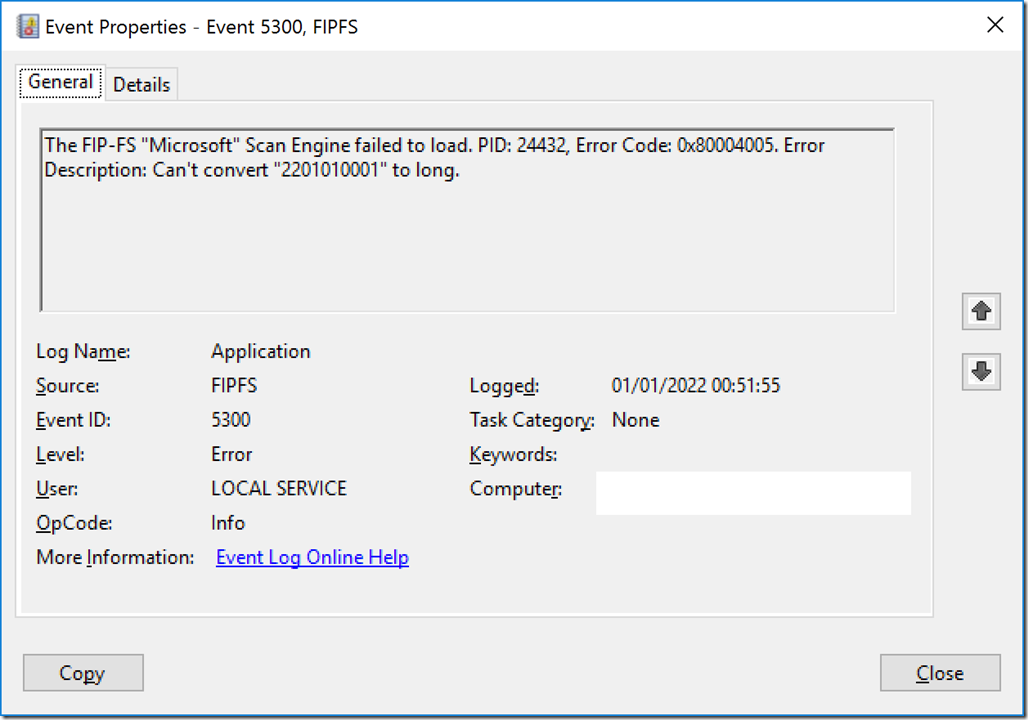

ASP.NET was originally designed to be a server-side code generator like PHP. You write code in C# or VB.NET but what gets sent to the browser is just HTML, CSS and JavaScript. The JavaScript piece was not too important at first, just handy for the occasional client-side confirmation dialog or the like.

This is no longer the case and increasing numbers of applications make heavy use of client-side JavaScript. I am working on a multi-user game for example and have written a ton of JavaScript. Perhaps I should have started with a single-page application (SPA) and used React or Vue but I did not, I did what I was most familiar with and started with a basic ASP.NET Core application. I was in a hurry and took full advantage of everything I could get built-in, including ASP.Net Identity and the SignalR real-time communications library.

Everything went fine but I wanted to shift to TypeScript and take advantage of JavaScript minification. There is WebOptimizer, an unofficial project with involvement from the .NET team at Microsoft, but I started going down the WebPack path for reasons you can read here. I got this working more or less, but abandoned it: essentially, WebPack is not designed for multipage projects and I was running into some awkward problems and spending more time on WebPack configuration than on developing my application.

Using ECMAScript modules

I am in favour both of simplicity and of keeping up to date, so I took a closer look at using the JavaScript emitted by the TypeScript compiler more directly, rather than transpiling it to browser-compatible JavaScript (one of the things WebPack does). The main issue is that the JavaScript code will now include import and export statements. You can try and use TypeScript without ever using import or export but I do not recommend it. Brower compatibility is pretty good if you can manage without Internet Explorer.

Quite a lot changes though when you start using import and export and your JavaScript files become modules. Here are a few things I found.

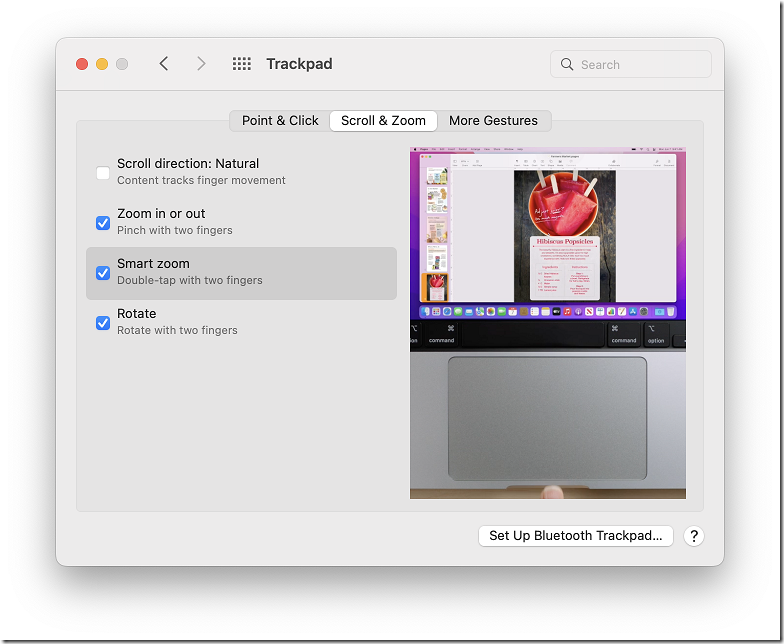

1. Any links to JavaScript files will now need to include type=”module” like so:

<script type=”module” src=”~/js/myscript.js”></script>

2. Any scripts that are imported by other scripts must not use the asp-append-version Tag Helper for cachebusting. Cachebusting is to prevent old versions of JavaScript files being used because they are cached by the browser. The asp-append-version helper adds a hash value as an argument when retrieving the script. The reason it causes problems is that the scripts that import that file do not know about the hash value and use its unadorned name. This means the browser loads the script twice with unpredictable results. Removing asp-append-version is not as bad as it first appears, thanks to Etags that inform the browser whether the file has been modified. See the discussion here.

3. If you have controls that call JavaScript functions on your web page, they will no longer work unless you import them. That is how modules work. There are a few solutions. The best is to attach things like click handlers in JavaScript rather than coding them in the HTML. This can be problematic though especially if you have server-side ASP.NET code that creates controls that call JavaScript programmatically. An alternative is to add the function to the window object, which you can do either in the ASP.NET Razor .cshtml page or in the TypeScript/JavaScript. I find it easiest to have an initialisation function in the TypeScript that I call from the web page. Scripts defined as modules never run until the page has loaded.

4. You need to be aware of side effects. Imagine you have three JavaScript files, page1.js, page2.js and shared.js. Your web page page1.cshtml uses page1.js and page2.cshtml uses page2.js. Both files import functions from shared.js. Everything works fine, but then you find that shared.js needs to import a function from page2.js. You run the application and find that page2.js has been loaded by page1.cshtml. This is by design: when you import the function you are telling the browser to load that file. It could catch you out though if you have initialisation code in both page1.js and page2.js and do not want them both to run.

The solution is either to plan for this and code accordingly, or not to import functions from page1.js or page2.js in shared.js. Of course if you follow the path of least resistance in an ASP.NET Core application and the only JavaScript code directly referenced in .cshtml is site.js then it is not a problem.

A working example with Visual Studio 2022

Imagine you have a multi-page ASP.NET Core application such as the one created by default in Visual Studio. It has site.js in wwwroot/js and that is about it. Here is what you might do:

a. Create a directory called Scripts in your project and add a file demo.ts

b. Add a file called tsconfig.json to the Scripts folder. If you use the Add Item wizard it will be prepopulated with some defaults. You will need to add as a minimum a compiler option to support ES modules and an outDir, for example:

{

“compilerOptions”: {

“module”: “es2015”,

“noImplicitAny”: false,

“noEmitOnError”: true,

“removeComments”: false,

“sourceMap”: true,

“target”: “es5”,

“outDir”: “../wwwroot/js”

},

“exclude”: [

“node_modules”,

“wwwroot”

]

}

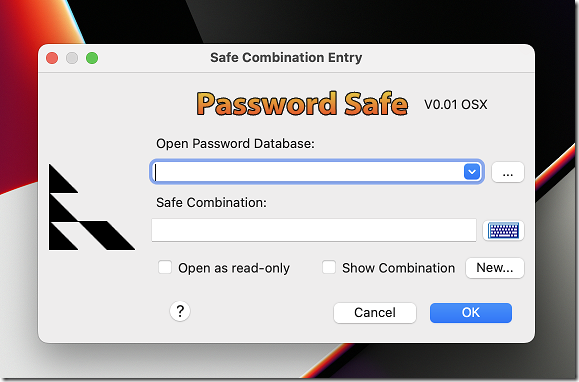

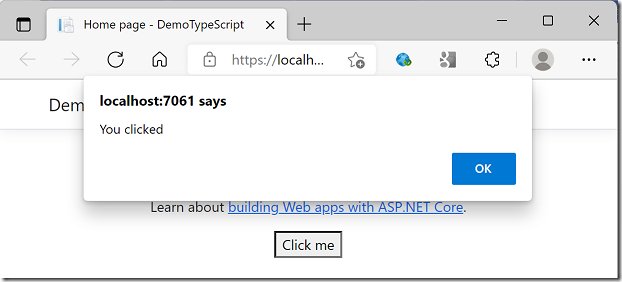

c. demo.ts looks like this:

export function clickme() {

alert(“You clicked”);

}

d. Add the following to Index.cshtml:

<script type=”module” src=”js/demo.js”></script>

<script type=”module”>

import {clickme} from ‘./js/demo.js’;

window.clickme = clickme;

</script>

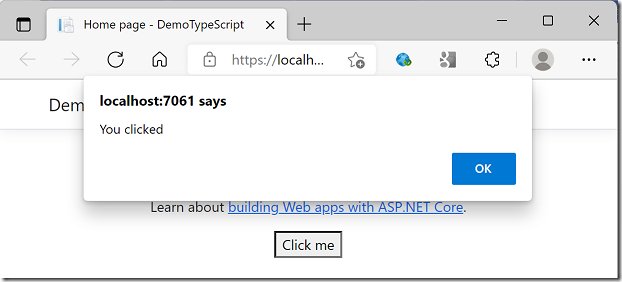

e. Now a button on the page will work, for example:

<p><button onclick=”clickme()”>Click me</button></p>

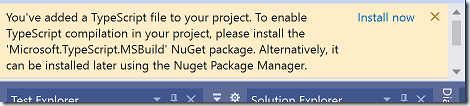

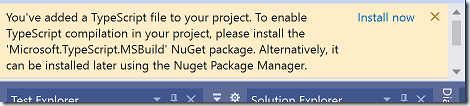

Note: when you add a TypeScript file to a Visual Studio 2022 project you get a message inviting you to install a NuGet package:

The TypeScript will still get compiled by Visual Studio, with or without this package. However, without it the .NET Core compiler will not compile the TypeScript (dotnet build etc).

Minification

Minifying the JavaScript is pretty easy. For the time being I am just running terser in a script called by a post-build event. I am deploying to a Linux Azure app service using Azure DevOps Pipelines and have had to workaround the issue that build events do not seem to handle the cross platform scenario very well, and Visual Studio does not provide much of an editor for build events in ASP.NET Core projects, but it is working.

I hope this proves a better long-term solution for me than WebPack.