I’m just back from QCon London, a software development conference with an agile flavour that I enjoy because it is not vendor-specific. Conferences like this are energising; they make you re-examine what you are doing and may kick you into a better place. Here’s what I noticed this year.

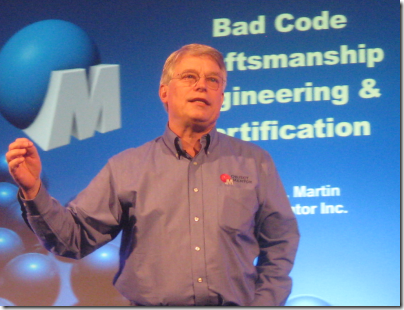

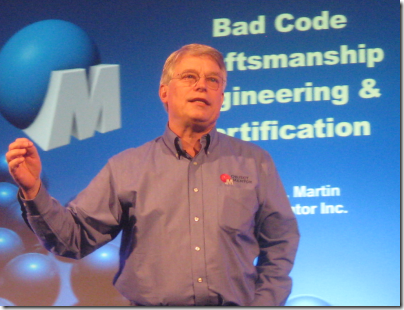

Robert C Martin from Object Mentor gave the opening keynote, on software craftsmanship. His point is that code should not just work; it should be good. He is delightfully opinionated. Certification, he says, provides value only to certification bodies. If you want to know whether someone has the skills you want, talk to them.

Martin also came up with a bunch of tips for how to write good code, things like not having more than two arguments to a function and never a boolean. I’ve written these up elsewhere.

Next I looked into the non-relational database track and heard Geir Magnusson explain why he needed Project Voldemort, a distributed key-value storage system, to get his ecommerce site to scale. Non-relational or NOSQL is a big theme these days; database managers like CouchDB and MongoDB are getting a lot of attention. I would like to have spent more time on this track; but there was too much else on; a problem with QCon.

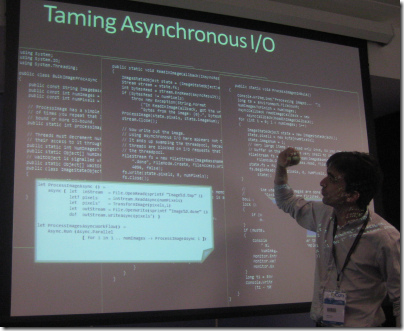

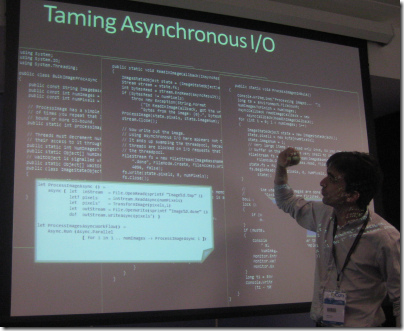

I therefore headed for the functional programming track, where Don Syme from Microsoft Research gave an inspiring talk on F#, Microsoft’s new functional language. He has a series of hilarious slides showing F# code alongside its equivalent in C#. Here is an example:

The white panel is the F# code; the rest of the slide is C#.

Seeing a slide that this makes you wonder why we use C# at all, though of course Syme has chosen tasks like asychronous IO and concurrent programming for which F# is well suited. Syme also observed that F# is ideal for working with immutable data, which is common in internet programming. I grabbed a copy of Programming F# for further reading.

Over on the Architecture track, Andres Kütt spoke on Five Years as a Skype Architect. His main theme: most of a software architect’s job is communication, not poring over diagrams and devising code structures. This is a consistent theme at QCon and in the Agile movement; get the communication right and all else follows. I was also interested in the technical side though. Skype started with SOAP but switched to a REST model for web services. Kütt also told us about the languages Skype uses: PHP for the web site, C or C++ for heavy lifting and peer-to-peer networking; Delphi for the Windows interface; PostgreSQL for the database.

Day two of QCon was even better. I’ve written up Martin Fowler’s talk on the ethics of software development in a separate post. Following that, I heard Canonical’s Simon Wardley speak about cloud computing. Canonical is making a big push for Ubuntu’s cloud package, available both for private use or hosted on Amazon’s servers; and attendees at the QCon CloudCamp later on were given a lavish, pointless cardboard box with promotional details. To be fair to Wardley though, he did not talk much about Ubuntu’s cloud solution, though he did make the point that open source makes transitions between providers much cheaper.

Wardley’s most striking point, repeated perhaps too many times, is that we have no choice about whether to adopt cloud computing, since we will be too much disadvantaged if we reject it. He says it is now more a management issue than a technical one.

Dan North from ThoughtWorks gave a funny and excellent session on simplicity in architecture. He used pseudo-biblical language to describe the progress of software architecture for distributed systems, finishing with

On the seventh day God created REST

Very good; but his serious point is that the shortest, simplest route to solving a problem is often the best one, and that we constantly make the mistake of using over-generalised solutions which add a counter-productive burden of complexity.

North talked about techniques for lateral thinking, finding solutions from which we are mentally blocked, by chunking up, which means merging details into bigger ideas, ending up with “what is this thing for anyway”; and chunking down, the reverse process, which breaks a problem down into blocks small enough to comprehend. Another idea is to articulate a problem to a colleague, which exercises different parts of the brain and often stimulates a solution – one of the reasons pair programming can be effective.

A common mistake, he said, is to keep using the same old products or systems or architectures because we always do, or because the organisation is already heavily invested in it, meaning that better alternatives do not get considered. He also talked about simple tools: a whiteboard rather than a CASE tool, for example.

Much of North’s talk was a variant of YAGNI – you ain’t gonna need it – an agile principle of not implementing something until/unless you actually need it.

I’d like to put this together with something from later in the day, a talk on cool things in the .NET platform. One of these was Guerrilla SOA, though it is not really specific to .NET. To get the idea, read this blog post by Jim Webber, another from the ThoughtWorks team (yes, there are a lot of them at QCon). Here’s a couple of quotes:

Prior to our first project starting, that client had already undertaken some analysis of their future architecture (which needs scalability of 1 billion transactions per month) using a blue-chip consultancy. The conclusion from that consultancy was to deploy a bus to patch together the existing systems, and everything else would then come together. The upfront cost of the middleware was around £10 million. Not big money in the grand scheme of things, but this £10 million didn’t provide a working solution, it was just the first step in the process that would some day, perhaps, deliver value back to the business, with little empirical data to back up that assertion.

My (small) team … took the time to understand how to incrementally alter the enterprise architecture to release value early, and we proposed doing this using commodity HTTP servers at £0 cost for middleware. Importantly we backed up our architectural approach with numbers: we measured the throughput and latency characteristics of a representative spike (a piece of code used to answer a question) through our high level design, and showed that both HTTP and our chosen Web server were suitable for the volumes of traffic that the system would have to support … We performance tested the solution every single day to ensure that we would always be able to meet the SLAs imposed on us by the business. We were able to do that because we were not tightly coupled to some overarching middleware, and as a consequence we delivered our first service quickly and had great confidence in its ability to handle large loads. With middleware in the mix, we wouldn’t have been so successful at rapidly validating our service’s performance. Our performance testing would have been hampered by intricate installations, licensing, ops and admin, difficulties in starting from a clean state, to name but a few issues … The last I heard a few weeks back, the system as a whole was dealing with several hundred percent more transactions per second than before we started. But what’s particularly interesting, coming back to the cost of people versus cost of middleware argument, is this: we spent nothing on middleware. Instead we spent around £1 million on people, which compares favourably to the £10 million up front gamble originally proposed.

This strikes me as an example of the kind of approach North advocates.

You may be wondering what other cool .NET things were presented. This session was called the State of the Art .NET, given by Amanda Laucher and Josh Graham. They offer a dozen items which they considered .NET folk should be using or learning about:

- F# (again)

- M – modelling/DSL language

- Boo – static Python for .NET

- NUnit – unit testing. Little regard for Microsoft’s test framework in Team System, which is seen as a wasted and inferior effort.

- RhinoMocks – mocking library

- Moq – another mocking library

- NHibernate – object-relational mapping

- Windsor – dependency injection, part of Castle project. Controversial; some attendees thought it too complex.

- NVelocity – .NET template engine

- Guerrilla SOA – see above

- Azure – Microsoft’s cloud platform – surprisingly good thanks to David Cutler’s involvement, we were told

- MEF – Managed Extensibility Framework as found in Visual Studio 2010, won high praise from those who have tried it

That was my last session (I missed Friday) though I did attend the first part of CloudCamp, an unconference for cloud early adopters. I am not sure there is much point in these now. The cloud is no longer subversive and the next new thing; all the big enterprise vendors are onto it. Look at the CloudCamp sponsor list if you doubt me. There are of course still plenty of issues to talk about, but maybe not like this; I stayed for the first hour but it was dull.

For more on QCon you might also want to read back through my Twitter feed or search the entire #qcon tag for what everyone else thought.