I’ve been trying Microsoft’s ADConnect tool, the replacement for the utility called DirSync, which synchronises on-premises Active Directory with Azure AD, the directory used by Office 365.

It is therefore a key piece in Microsoft’s hybrid cloud story.

In my case I have a small office set-up with Active Directory running on Server 2012 R2 VMs. I also have an Office 365 tenant that I use for testing Microsoft’s latest cloud stuff. I have long had a few basic questions about how the sync works so I created a small Server 2012 R2 VM on which to install it.

ADConnect can be installed on a Domain Controller, though this used to be unsupported for DirSync. However it seems to be tidier to give ADConnect its own server, and less likely to cause problems.

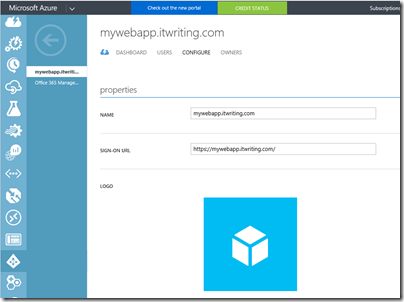

There are a number of pre-requisites but for me the only one that mattered was that your domain must be set up on the Office 365 tenant before you configure ADConnect. You cannot configure it using the default *.onmicrosoft.com domain.

Adding a domain to Office 365 is straightforward, provided you have access to the DNS records for the domain, and provided that the domain is not already linked to another Office 365 tenant. This last point can be problematic. For example, BT uses Office 365 to provide business email services to its customers. If you want to migrate from BT to your own Office 365, detaching the domain from BT’s tenant, to which you do not have admin access, is a hassle.

When I tried to set up my domain, I found another problem. At some point I must have signed up for a trial of Power BI, and without my realising it, this created an Office 365 tenant. I could not progress until I worked out how to get admin access to this Power BI tenant and assign my user account a different primary email address. The best way to discover such problems is to attempt to add the domain and note any error messages. And to resist the wizard’s efforts to get you to set up your domain in a different tenant to the one that you want.

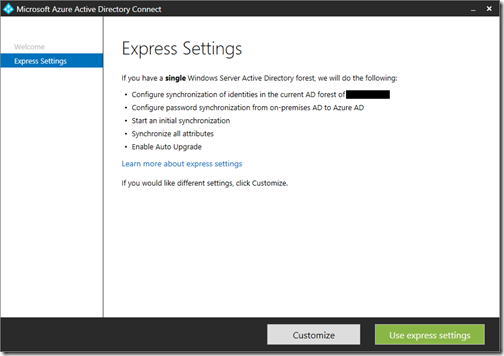

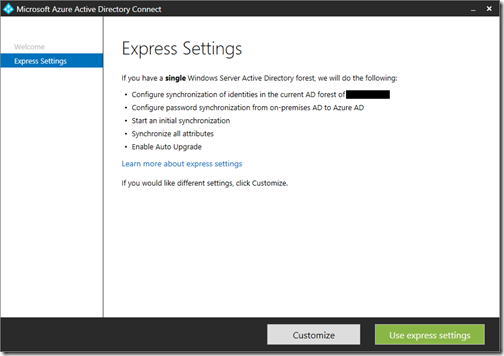

That done, I ran the setup for ADConnect. If you use the Express settings, it is straightforward. It requires SQL Server, but installs its own instance of SQL Server Express LocalDB by default.

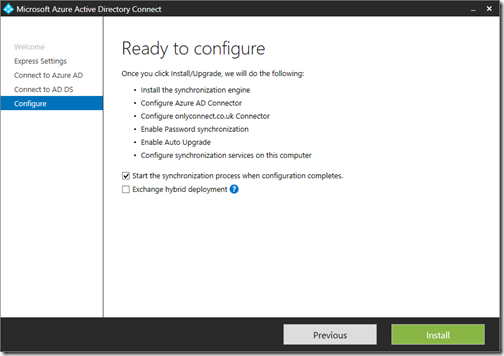

You enter credentials for your Office 365 tenant and for your on-premises AD, then the wizard tells you what it will do.

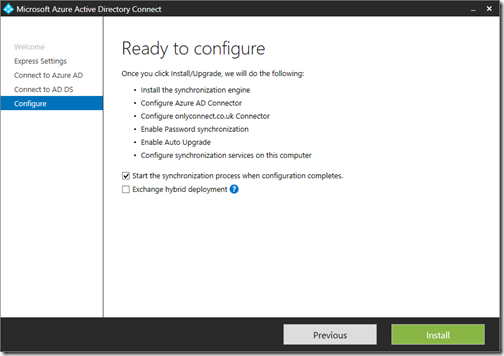

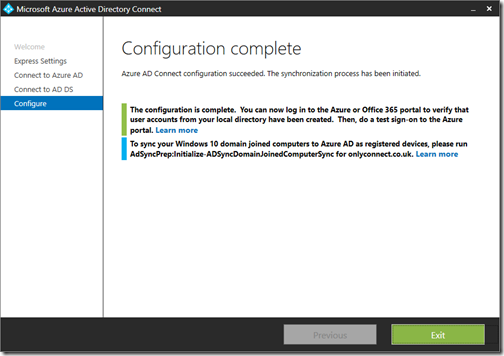

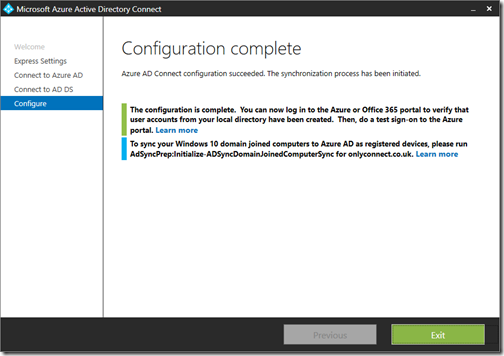

I was interested in the link on the next screen, which describes how to get all your Windows 10 domain-joined computers automatically “registered” to Azure AD, enabling smoother integration.

If you follow the link, and read the comments, you may be put off; I was. It involves configuring Active Directory Federation Services as well as Group Policy and looks fiddly. I suspect this is worth doing though, and hope that configuration will be more automated in due course.

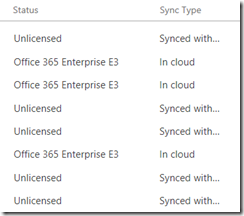

The next step was to look at the outcome. One thing that is important to understand is that synced users are distinct from other Office 365 users. Imagine then that you have existing users in Office 365 and you want to match them with existing on-premises users, rather than creating new ones. This should work if ADConnect can match the primary email address. It will convert the matching Azure AD user into a synced user. Otherwise, it will just create new users, even if there are existing Azure AD users with the same names. If it goes wrong, there are ways to recover. Note that the users are not actually linked via the email address, they are linked by an attribute called an ImmutableID.

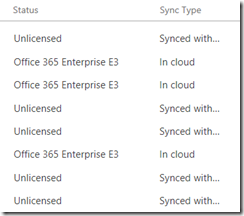

The Office 365 admin portal is fully aware of synced users and the user list shows the distinction. Users are designated as “In Cloud” or “Synced with Active Directory”.

Synced users cannot be deleted from the Office 365 portal. You delete them in on-premises AD and they disappear.

The next obvious issue is that if you dive in like me and just install ADConnect with Express Settings, you will get all your on-premises users and groups in Azure AD. In my case I have things like “ASP.NET Machine Account”, various IUSR* accounts, users created by various applications, and groups like “DHCP Administrators” and “Exchange Trusted Subsystem” that do not belong in Office 365.

These accounts do not do much harm; they do not consume licenses or mess up Office 365. On the other hand, they are annoying and confusing. You may also have business reasons to exclude some users from synchronization.

Fortunately, there are various ways to fine-tune, both before and after initial synchronization. You can read about it here. This document also states:

With filtering, you can control which objects should appear in Azure AD from your on-premises directory. The default configuration takes all objects in all domains in the configured forests. In general, this is the recommended configuration.

I find this puzzling, in that I cannot see the benefit in having irrelevant service accounts and groups synced to Office 365 – though it is not entirely obvious what is safe to exclude.

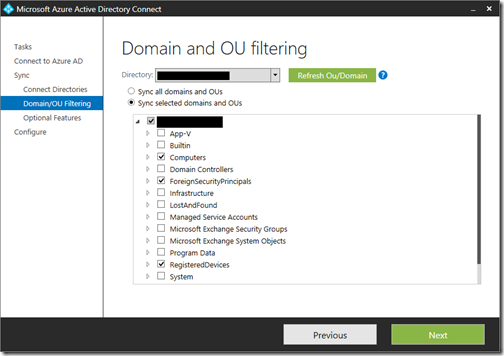

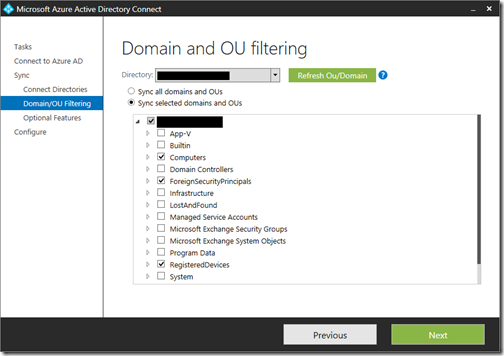

I went back to the ADConnect tool and reconfigured, using the Domain and OU filtering option. This time, I selected what seems to be a minimal configuration.

The excluded objects are meant to be deleted from Office 365, but so far they have not. I am not sure if this will fix itself. (Update: it did, though I also re-ran a full initial sync to help it along). If not, you can temporarily disable sync, manually delete them in the Office 365 portal, then re-enable sync.

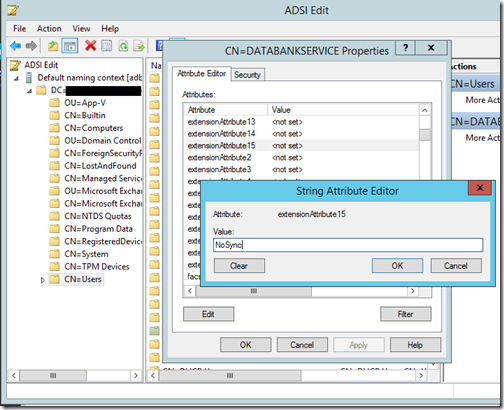

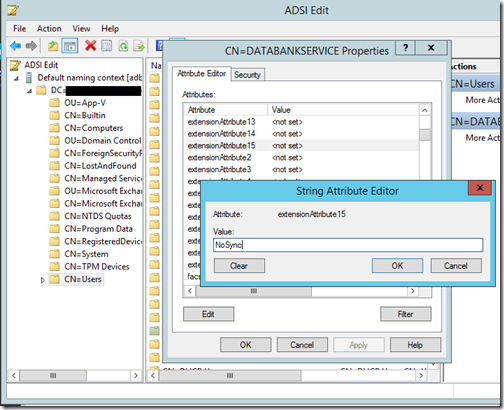

What if you want to exclude a specific user? I used the steps described to create a DoNotSync filter based on setting extensionAttribute15. You use the ADConnect Synchrhonization Rules Editor to create the rule, then set the attribute using ADSIEdit or your favourite tool. This worked, and the user I marked disappeared from Office 365 on the next sync.

Incidentally, you can trigger an immediate sync using this PowerShell command:

Start-ADSyncSyncCycle -PolicyType Delta

Complications

Setting up ADConnect does introduce complexity into Office 365. You can no longer do everything through the portal. It is not only deletion that does not work. When I tried to set up a mailbox in Office 365 I hit this message:

“This user’s on-premises mailbox hasn’t been migrated to Exchange Online. The Exchange Online mailbox will be available after migration is completed.”

I can see the logic behind this, but there might be cases where you want a new empty mailbox; I am sure there is a way around it, but now there is more to go wrong.

Update: there is a rather important lesson hiding here. If you have are running Exchange on-premises and want to end up on Office 365 with ADConnect, you must take care about the order of events. Once ADConnect is running, you cannot do a cutover migration of Exchange, only a hybrid migration. If you don’t want hybrid (which adds complexity), then do the cutover migration first. Convert the on-premise mailboxes to mail-enabled users. Then run ADConnect, which will match the users based on the primary email address.

It is also obvious that ADConnect is designed for large organisations and for administrators who know their way around Active Directory. There is a simplified sync tool in Windows Server Essentials, though I have not used it. It would be good though to see something between Essentials and the complexity of ADConnect. For example, I had imagined that there might be a mapping tool that would let you see how ADConnect intends to match on-premises users with Office 365 users and let you amend and exclude users with a few clicks.

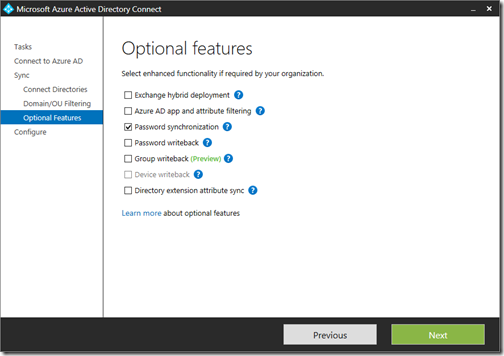

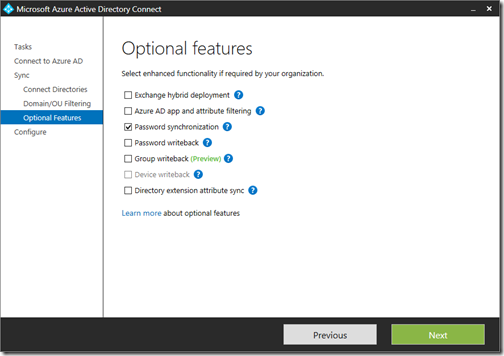

Microsoft has been working on this stuff for some time and is not done yet. In preview for example is Group Writeback, which lets you sync Office 365 groups back to on-premises AD.

Maybe Microsoft might also consider using different icons for the various ADConnect utilities as they do look a bit silly if you pin them to the taskbar:

The tools are:

- Azure ADConnect (Wizard)

- Synchronization Rules Editor (advanced filtering)

- Synchronization Service WebService Connector Config (SOAP stuff)

- Synchronization Service Key Management (what it says)

On the plus side, I have not hit any mysterious Active Directory errors and it has all worked without having to set up certificates, reverse proxies, special DNS entries (other than the standard ones for Office 365), or anything too fiddly, though note that I avoided ADFS and automatic Windows 10 registration.

Final thoughts

If you need to implement this, you will find doing what I did and trying it out on a test domain is worth it. There seem to be quite a few pitfalls, and as ever, it is easier to get it right at the start rather than trying to fix things up afterwards.