PowerShell, Microsoft’s scripting platform, is significant for several reasons. It is critical to Microsoft’s strategy of reducing dependence on a GUI in Windows Server. It is also a key piece in automating IT administration, which is fundamental to business agility.

The platform was invented by Microsoft’s Jeffrey Snover, now Technical Fellow and Lead Architect for the Enterprise Cloud Group. The evolution of PowerShell has gone hand in hand with the company’s broader strategy for Windows and Azure, guided by Snover as architect.

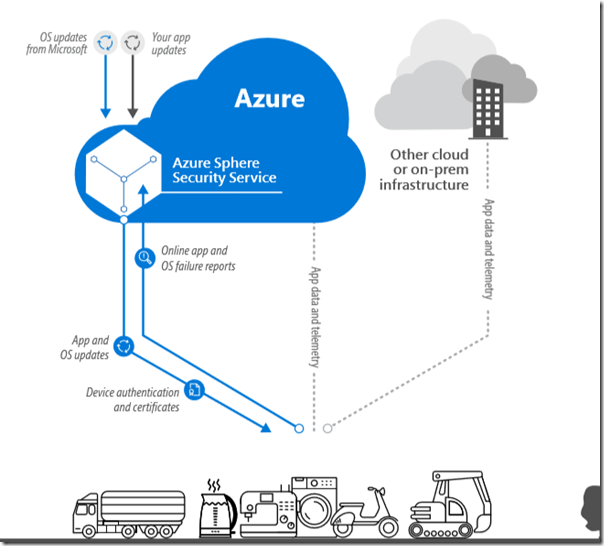

PowerShell is everywhere in Azure Stack, Microsoft’s packaged version of Azure for running on-premises, and presumably in the online version of Azure as well. There are an “average 472 cmdlet calls” when a VM is created, according to Snover’s keynote at the recent PowerShell Europe conference in Hanover.

The PowerShell team is now apparently part of the Azure Management Team within Microsoft.

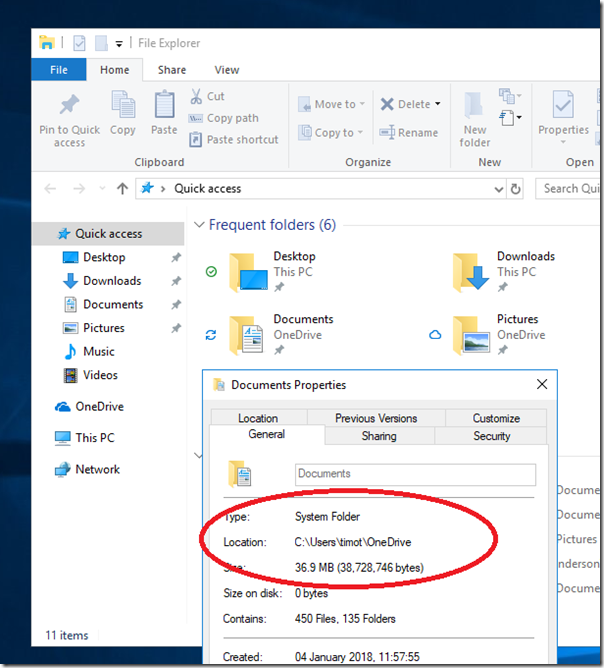

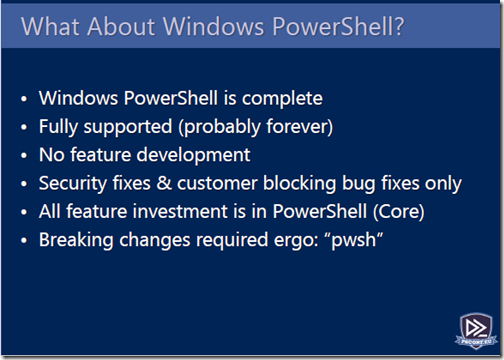

What is happening with PowerShell? The main thing to understand is that Microsoft has forked the platform. Windows PowerShell is the Windows-only version, while PowerShell Core is cross-platform on Windows, Mac and Linux. Windows PowerShell is based on the .NET Framework, while PowerShell Core is based on .NET Core.

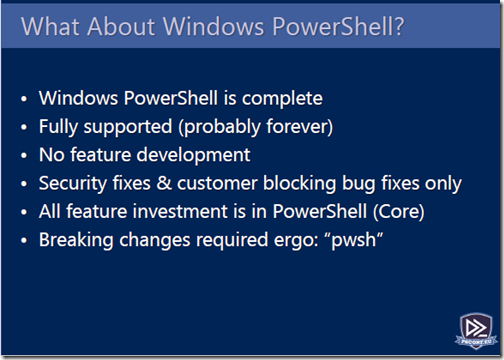

The situation with this is odd, in that Windows PowerShell is installed by default in Windows Server and the one that most people use; but PowerShell Core is the one that is under active development. This is explained here. Snover emphasised in his keynote that Windows PowerShell is done:

Note though that PowerShell is modular, and although the Windows PowerShell engine is not being developed, new or enhanced modules will still appear. In fact, they are likely to run both on Windows PowerShell and on PowerShell Core. Like all forks, there will be some pain over compatibility versus using the latest features as the Core platform evolves.

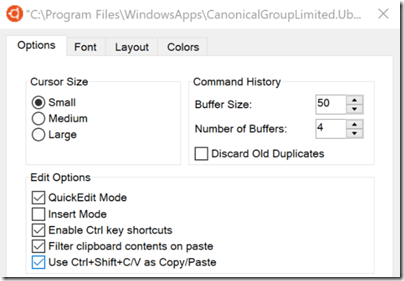

If you want to try PowerShell Core you can download it here. However it is of limited use for day to day work unless you also install and activate a module called WindowsPSModulePath which you can get from the PowerShell Gallery. This lets you use all your current Windows PowerShell modules, subject of course to compatibility.

So what is next for PowerShell? Snover’s ambition for the platform, he said, is to manage any server or service, from any client, running on any cloud (or on-premises, any hypervisor).

Much of what is interesting is not so much new features in PowerShell itself, but additional modules or other utilities.

PSSwagger is helpful for creating modules: it will create a PowerShell module from a Swagger API (a popular standard for specifying RESTful APIs).

CloudShell is a command shell for Azure which you can run from a web browser.

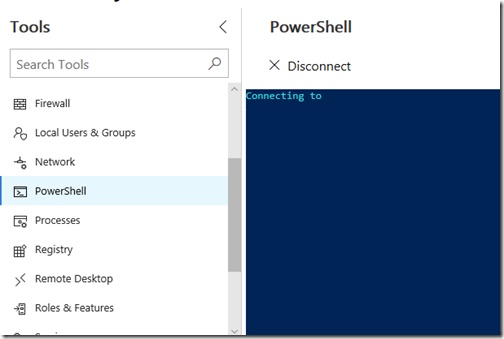

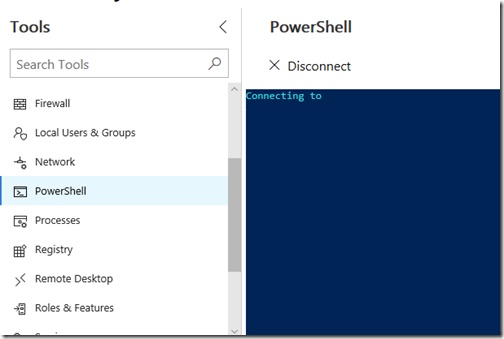

Windows Admin Center, formerly known as Project Honolulu is a browser application for managing servers (and some desktops). You can open a PowerShell session directly from the browser. In addition, it is PowerShell that enables much of the other functionality.

As for Microsoft’s plans for PowerShell Core, Snover refers to this site which sets out the company’s strategic investments. These include help system improvements, a GUI framework for the console perhaps like Curses on Linux, a mechanism for PowerShell to prompt for install (as in Bash) when a command is not found, but a module containing that command is known to exist, and Just Enough Administration (JAE) on Linux.

At the PowerShell conference Principal Software Engineer Steve Lee talked about a PowerShell Standard Library, which itself targets .NET Standard 2.0, for module authors to use when creating modules so they will work cross-platform.

PowerShell and Microsoft’s platform

One of the intriguing things about Microsoft’s evolution is its embrace of both Linux and cross-platform. PowerShell is one small part of this, but fits in with that strategy. We should no longer think of Microsoft’s platform as based on Windows, even though of course it mostly runs on Windows today. The OS is becoming less important as the company focuses on services and applications.

The further implication is that cross-platform support is not just a nice-to-have feature for pieces like .NET Core and PowerShell Core, but essential for Microsoft itself as it integrates multiple operating systems in its cloud platform.

While we tend to applaud cross-platform support as a good thing, it is not without pain. PowerShell is a case in point. Windows PowerShell is at the same time the current thing, and the thing that is no longer evolving.

PowerShell and IT admins

PowerShell is an essential skill for Windows IT admins. On Office 365, for example, there always seem to be things you can do in PowerShell that you cannot easily do though the GUI, and even where you can, it often pays to use PowerShell because you can script and automate common operations. The same is true for Azure.

Not everyone loves PowerShell as a language. Some complain of its verbosity. It can also be prickly to work with. It is not at all English-like, making it less accessible for beginners than most scripting languages.

It is however well suited to its purpose, which is what counts.