ML (Machine Learning) and AI (Artificial Intelligence) are all the rage and changing the world, but what are they?

I was asked this recently which made me realise that it is not obvious. So I will have a go at a quick explanation, based on my own perception of what is going on. I am not a data scientist so this will be a high-level take.

I will start with ML. The ingredients of ML are:

1. Data

2. Pattern recognition algorithms

Imagine that you want to identify pictures that contain images of people. The data is lots of images. What you want is an algorithm that automatically detects which images contain people. Rather than trying to code this on your own, you give the ML system a quantity of images that do contain people, and a quantity of images that do not. This is the training process. Once trained, the ML system will predict, when shown an image, whether or not it contains people. If your training has been successful, it will have a high success rate.

The combination of the algorithm and the parameters (these being the characteristics you want to identify) is called a model. There are many types of model and a number of different ML systems from open source (eg TensorFlow) to big brands like Amazon Machine Learning, Azure Machine Learning, and Google Machine Learning.

So what is AI? This is a more generic term, so we can say that ML is a form of AI. IBM describes its Watson service as AI – Watson is really a bunch of different services so that makes sense.

A quick way to think of AI is that it answers questions. Is this customer a good credit risk? Is this component good or faulty? Who is the person in this picture?

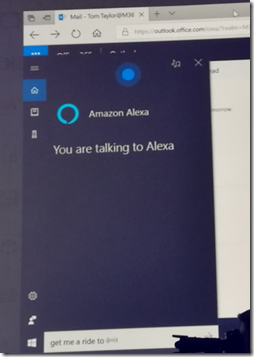

Another common form of AI is a chatbot or conversational UI. The key task here is artificial language understanding, possibly accompanied by speech to text transcription if you want voice input, and then a back-end service that will generate a response to what it things the language input means. I coded a simple one myself using Microsoft’s Bot Framework and LUIS (Language Understanding Intelligent Service). My bot just performed searches, so if you wrote or said “tell me about x”, it would do a search for x and return the results. Not much use; but you can see how the concept can work for things like travel bookings and customer service. The best chatbots understand context and remember previous conversations, and when combined with personal information like location and preferences, they can get a lot of things right by conjecture. “Get me a taxi” – “Is that to Charing Cross station as usual?”

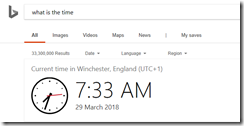

Internet search has morphed into a kind of AI. If you type “What is the time?”, it comes up on the screen.

The more the search engines personalise the search results, the more assumptions they can make. In the example above, Bing has used what it thinks is my location to give me the time where I am.

AI can also take decisions. A self-driving car, like a human driver, takes decisions constantly, whether to stop, go, what speed, turn this way or that. It uses sensors and pattern recognition, as well as its programmed route, to make those decisions.

Why does AI matter? It feels like early days; but there are obvious commercial applications, now that using ML and AI is within reach of any developer.

Marketers and advertisers are enthusiastic because they love targeting – and consumers often prefer more relevant advertising (though they might prefer less advertising even more). Personalisation is the key, and as mentioned above, ML and AI are good at answering questions. The more data, the more personal the targeting. How much does this person earn? Male or female? Where are they? Single or in a relationship? Do they have children? Even answering these (and many more) questions somewhat inaccurately will greatly increase the ability of marketers to offer the right product or service at the right moment.

Of course there are privacy questions around this. There are other questions too. What about the commercial advantage this gives to those few entities that hold huge volumes of personal data, such as Google and Facebook? What about when showing people “more relevant content” becomes a threat to democracy, because individuals get a distorted view of the world, seen through a tunnel formed by their own preference to avoid competing views? Society is only just beginning to grasp the implications.

Another key area is automation. Amazon made a splash by opening a store where you do not have to check out: object recognition detects what you buy and charges your account automatically. Fewer staff needed, and more convenient for shoppers.

Detecting faulty goods on a production line is another common use. Instead of a human inspecting goods for flaws, AI can identify a high percentage of problems automatically. It may be just a case of recognizing patterns in images, as discussed above.

AI can go wrong though. An example was mentioned at an event I attended recently. I cannot vouch for the truth of the story, but it is kind-of plausible. The task was to help the military detect tanks hidden in trees. They took photos of trees with hidden tanks, and trees without hidden tanks, and used them for machine learning. The results were abysmal. The reason: all the photos which included tanks were taken on an overcast day, and those without tanks on a clear day. So the ML decided that tanks only hide on cloudy days.

ML is prone to this kind of mistake. What similar problems might occur when applied to people? Could ML make inappropriate inferences from characteristics such as beards, certain types of clothing, names, or other things about which we should be neutral? It is quite possible, which is another reason why applications of AI need an ethical framework as well as appropriate regulation. This will not happen smoothly or quickly, and will not be universally implemented, so humanity’s use of ML and AI is something of a social experiment, with potential for both good and bad outcomes.