I have a test setup in my office which runs mostly on Hyper-V. It is a kind of home-brew small business server, with Exchange, ISA and SharePoint all running on separate VMs. I’ve followed Microsoft’s advice and kept Active Directory on a separate physical server. Until today, Hyper-V itself was running on Server 2008.

I’m reviewing Hyper-V Server 2008 R2, so I figured it would be interesting to migrate the VMs. I attached an external USB drive, shut down the VMs and exported them. Next, I verified that there was nothing else I needed to preserve on that machine, and set about installing Hyper-V Server 2008 R2 from scratch.

Aside: when I first set this up I broke the rules by having Active Directory on the Hyper-V host. That worked well enough in my small setup; but I realised that you lose some of the benefit of virtualisation if you have anything of value on the host, so I moved Active Directory to a separate box.

I wish I could tell you that the migration went smoothly. Actually, from the Hyper-V perspective it did go smoothly. However, I had an ordeal with my server, a cheapie HP ML110 G5. The driver for the embedded Adaptec Sata RAID did not work with Hyper-V Server 2008 R2, and I couldn’t find an update, so I disabled the RAID. The driver for my second network card also didn’t work, and I had to replace the card. Finally, my efforts at updating the BIOS had landed me with a known problem on this server: the fans staying at maximum speed and deafening volume. Fortunately I found this thread which gives a fix: installing upgraded firmware for HP’s Lights-Out Remote Management as well. Blissful (near) silence.

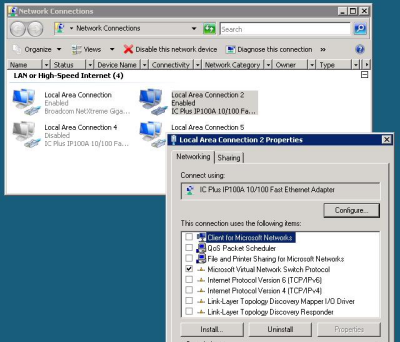

Once I’d got the operating system installed successfully, bringing the VMs back on line was a snap. I used the console menu to join the machine to the domain, set up remote management, and configure the network cards. Next, I copied the exported VMs to the new server, imported them using Hyper-V manager running on Windows 7, and shortly afterwards everything was up and running again. I did get a warning logged about the integration services being out-of-date, but this was easy to upgrade. I’m hoping to see some performance benefit, since my .vhd virtual drives are dynamic, and these are meant to be much faster in the R2 update.

Although I’m impressed with Hyper-V itself, some aspects of Hyper-V Server 2008 R2 are lacking. Mostly this is to do with Server Core. Shipping a cut-down Server OS without a GUI is a great idea in itself, but Microsoft either needs to make it easy to manage from the command line, or easy to hook up to remote tools. Neither is the case. If you want to manage Hyper-V from the command line you need this semi-official management library, which seems to be the personal project of technical evangelist James O’Neill. Great work, but you would have thought it would be built into the product.

As for remote tools, the tools themselves exist, but getting the permissions right is such an arcane process that another dedicated Microsoft individual, program manager John Howard, wrote a script to make it possible for humans. It is not so bad with domain-joined hosts like mine, but even then I’ve had strange errors. I haven’t managed to get device manager working remotely yet – “Access denied” – and sometimes I get a kerberos error “network path not found”.

Fortunately there’s only occasional need to access the host once it is up and running; it seems very stable and I doubt it will require much attention.