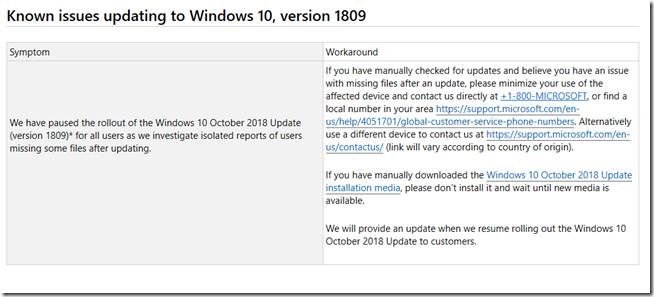

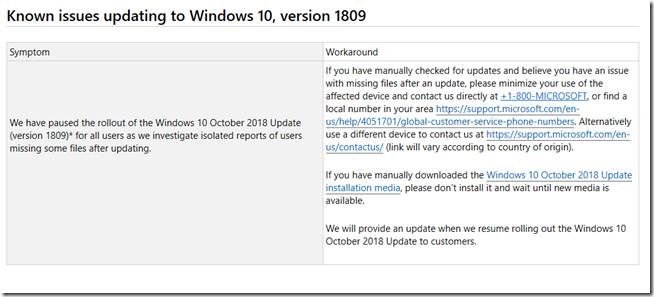

Microsoft has paused the rollout of the October 2018 Windows update for Windows 10 while it investigates reports of users losing data after the upgrade.

Update: Microsoft’s “known issues” now asks affected uses to “minimize your use of the affected device”, suggesting that file recovery tools are needed for restoring documents, with uncertain results.

Windows 10, first released in July 2015, was the advent of “Windows as a service.” It was a profound change. The idea is that whether in business or at home, Windows simply updates itself from time to time, so that you always have a secure and up to date operating system. Sometimes new features arrive. Occasionally features are removed.

Windows as a service was not just for the benefit of we, the users. It is vital to Microsoft in its push to keep Windows competitive with other operating systems, particularly as it faces competition from increasingly powerful mobile operating systems that were built for the modern environment. A two-year or three-year upgrade cycle, combined with the fact that many do not bother to upgrade, is too slow.

Note that automatic upgrade is not controversial on Android, iOS or Chrome OS. Some iOS users on older devices have complained of performance problems, but in general there are more complaints about devices not getting upgraded, for example because of Android operators or vendors not wanting the bother.

Windows as a service has been controversial though. Admins have worried about the extra work of testing applications. There is a Long Term Servicing Channel, which behaves more like the old 2-3 year upgrade cycle, but it is not intended for general use, even in business. It is meant for single-purpose PCs such as those controlling factory equipment, or embedded into cash machines.

Another issue has been the inconvenience of updates. “Restart now” is not something you want to see just before giving a presentation, or working on it at the last minute, for example. Auto-restart occasionally loses work if you have not saved documents.

The biggest worry though is the update going wrong. For example, causing a PC to become unusable. In general this is rare. Updates do fail, but Windows simply rolls back to the previous version, annoying but not fatal.

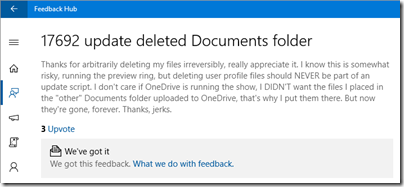

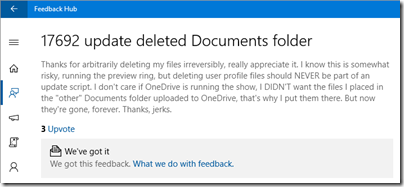

What about deleting data? Again it is rare; but in this case recovery is not simple. You are in the realm of disk recovery tools, if you do not have a backup. However it turns out that users have reported updates deleting data for some time. Here is one from 4 months ago:

Why is the update deleting data? It is not yet clear, and there may be multiple reasons, but many of the reports I have seen refer to user documents stored outside the default location (C:\users\[USERNAME]\). Some users with problems have multiple folders called Documents. Some have moved the location the proper way (Location tab in properties of special folders like Documents, Downloads, Music, Pictures) and still had problems.

Look through miglog.xml though (here is how to find it) and you will find lots of efforts to make sense of the user’s special folder layout. This is not my detailed diagnosis of the issue, just an observation having ploughed through long threads on Reddit and elsewhere; of course these threads are full of noise.

Here is an example of a user who suffered the problem and had an unusual setup: the location of his special folders had been moved (before the upgrade) to an external drive, but there was still important data in the old locations.

We await the official report with interest. But what can we conclude, other than to take backups (which we knew already)?

Two things. One is that Microsoft needs to do a better job of prioritising feedback from its Insider hub. Losing data is a critical issue. The feedback hub, like the forums, is full of noise; but it is possible to identify critical issues there.

This is related of course to the suspicion that Microsoft is now too reliant on unpaid enthusiast testers, at the expense of thorough internal testers. Both are needed and both, I am sure, exist. What though is the proportion and has internal testing been reduced on the basis of these widespread public betas?

The second thing is about priorities. There is a constant frustration that vendors (and Microsoft is not alone) pay too much attention to cosmetics and new features, and not enough to quality and fixing long-standing bugs and annoyances.

What do most users do after Windows upgrades? They are grateful that Windows is up and running again, and go back to working in Word and Excel. They do not care about cosmetic changes or new features they are unlikely to use. They do care about reliability. Such users are not wrong. They deserve better than to find documents missing.

One final note. Microsoft released Windows 10 1809 on 2nd October. However the initial rollout was said to be restricted to users who manually checked Windows Update or used the Update Assistant. Microsoft said that automatic rollout would not begin until Oct 9th. In my case though, on one PC, I got the update automatically (no manual check, no Insider Build setting) on October 3rd. I have seen similar reports from others. I got the update on an HP PC less than a year old, and my guess is that this is the reason:

With the October 2018 Update, we are expanding our use of machine learning and intelligently selecting devices that our data and feedback predict will have a smooth update experience.

In other words, my PC was automatically selected to give Microsoft data on upgrades expected to go smoothly. I am guessing though. I am sure I did not trigger the update myself, since I was away all day on the 2nd October, and buried in work on the 3rd when the update arrived (I switched to a laptop while it updated). I did not lose data, even though I do have a redirected Documents folder. I did see one anomaly: my desktop background was changed from blue to black, and I had to change it back manually.

What should you do if you have this problem and do not have backups? Microsoft asks you to call support. As far as I can tell, the files really are deleted so there will not be an easy route to recovery. The best chance is to use the PC as little as possible; do a low-level copy of the hard drive if you can. Shadow Copy Explorer may help. Another nice tool is Zero Assumption Recovery. What you recover is dependent on whether files have been overwritten by other files or not.

Update: Microsoft has posted an explanation of why the data loss occurred. It’s complicated and all do to with folder redirection (with a dash of OneDrive sync). It affected some users who redirected “known folders” like Documents to another location. The April 2018 update created spurious empty folders for some of these users. The October 2018 update therefore sought to delete them, but in doing so also deleted non-empty folders. It still looks like a bad bug to me: these were legitimate folders for storing user data and should not have been removed if not empty.

More encouraging is that Microsoft has made some changes to its feedback hub so that users can “provide an indication of impact and severity” when reporting issues. The hope is that Microsoft will find reports of severe bugs more easily and therefore take action.

Updated 8th Oct to remove references to OneDrive Sync and add support notes. Updated 10th Oct with reference to Microsoft’s explanatory post.