NVIDIA CEO Jen-Hsun Huang made a number of announcements at the GPU Technology Conference (GTC) keynote yesterday, including an updated roadmap for both desktop and mobile GPUs.

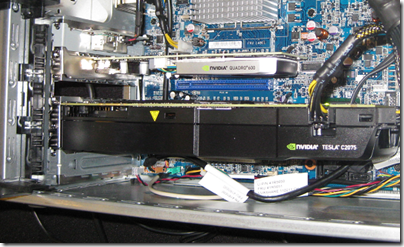

Although the focus of the GTC is on high-performance computing using Tesla GPU accelerator boards, Huang’s announcements were not limited to that area but also covered the company’s progress on mobile and on the desktop. Huang opened by mentioning the recently released GeForce Titan graphics processor which has 2,600 CUDA cores, and which starts from under £700 so is within reach of serious gamers as well as developers who can make use of it for general-purpose computing. CUDA enables use of the GPU for massively parallel general-purpose computing. NVIDIA is having problems keeping up with demand, said Huang.

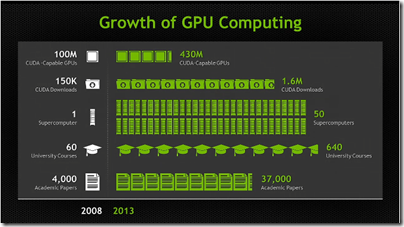

There are now 430 million CUDA capable GPUs out there, said Huang, including 50 supercomputers, and coverage in 640 university courses.

He also mentioned last week’s announcement of the Swiss Piz Daint supercomputer which will include Tesla K20X GPU accelerators and will be operational in early 2014.

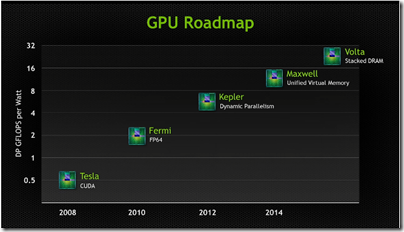

But what is coming next? Here is the latest GPU roadmap:

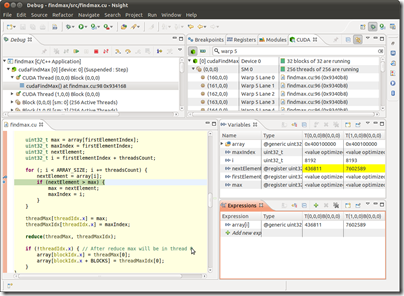

Kepler is the current GPU architecture, which introduced dynamic parallelism, the ability for the GPU to generate work without transitioning back to the CPU.

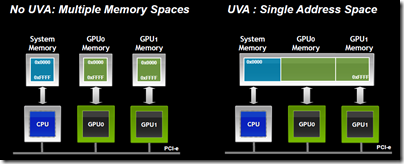

Coming next is Maxwell, which has unified virtual memory. The GPU can see the CPU memory, and the CPU can see the GPU memory, making programming easier. I am not sure how this impacts performance, but note that it is unified virtual memory, so the task of copying data between host and device still exists under the covers.

After Maxwell comes Volta, which focuses on increasing memory bandwidth and reducing latency. Volta includes a stack of DRAM on the same silicon substrate as the GPU, which Huang said enables 1TB per second of memory bandwidth.

What about mobile? NVIDIA is aware of the growth in devices of all kinds. 2.5bn high definition displays are sold each year, said Huang, and this will double again by 2015. These displays are mostly not for PCs, but on smartphones or embedded devices.

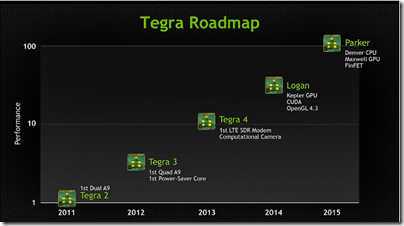

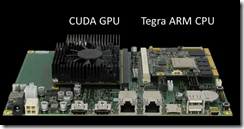

Here is the roadmap for Tegra, NVIDIA’s system-on-a-chip (SoC).

Tegra 4, which I saw in preview at last month’s mobile world congress in Barcelona, includes a software-defined modem and computational camera, able to tracks moving objects while keeping them in focus.

Next is Tegra Logan. This is the first Tegra to include CUDA cores so you can use it for general-purpose computing. It is based on the Kepler GPU and supports full CUDA 5 computing as well as Open GL 4.3. Logan with be previewed this year and in production early 2014.

After Logan comes Parker. This will be based on the Maxwell GPU (see above) and NVIDIA’s own Denver (ARM-based) CPU. It will include FinFET multigate transistors.

According to Huang, Tegra performance will includes by 100 times over 5 years. Today’s Surface RT (which runs Tegra 3) may be sluggish, but Windows RT will run fine on these future SoCs. Of course Intel is not standing still either.

Finally, Huang announced the Grid Visual Computing Appliance, which I will be covering shortly in another post.