At last month’s @Scale conference in San Francisco, developers from a number of well-known companies (Google, Facebook, Twitter, Dropbox and others) spoke about the challenge of scaling applications and services to millions or even billions of users.

Among the speakers was Igor Zaika, Distinguished Engineer in the Microsoft Office team, and the video (embedded below) is illuminating not only as an example of how to code across multiple platforms, but also as an insight into where the company is taking Office.

Zaika gives a brief résumé of the history of Office, mentioning how the team has experienced the highs and lows of cross-platform code. Word 6.0 (1993) was great on Windows but a disaster on the Mac. The team built an entire Win32 emulation layer for the Mac, enabling a high level of code reuse, but resulting in a poor user experience and lots of platform-specific bugs and performance issues in the Mac version.

Next came Word 98 for the Mac, which took the opposite approach, forking the code to create an optimized Mac-specific version. It was well received and great for user experience, but “it was only fun for the first couple of years,” says Zaika. As the Windows version evolved, merging code from the main trunk into the Mac version became increasingly difficult.

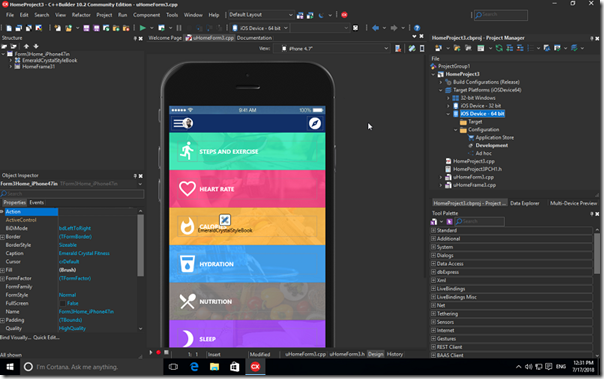

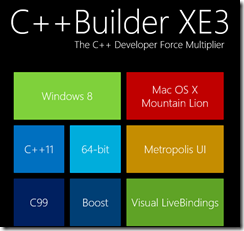

Today Microsoft is committed not only to Mac and Windows versions of Word, but to all the major platforms, by which Zaika means Apple (including iOS), Android, Windows (desktop and WinRT) and Web. “If we don’t, we are not going to have a sustainable business,” he says.

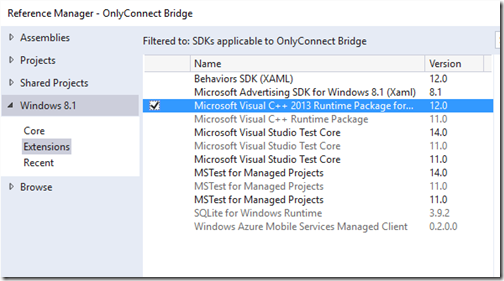

WinRT is short for the Windows Runtime, also known as Metro, or as the Store App platform. Zaika says that the relationship between WinRT and Win32 (desktop Windows) is similar to that between Apple’s OS X and iOS.

Time for a brief digression of my own: some observers have said that Microsoft should have made a dedicated version of Windows for touch/mobile rather than attempting to do both at once in Windows 8. The truth is that it did, but Microsoft chose to bundle both into one operating system in Windows 8. Windows RT (the ARM version used in Surface RT) is a close parallel to the iPad, since only WinRT apps can be installed. What seems to be happening now is that Windows Phone and Windows RT will be merged, so that the equivalence of WinRT and iOS will be closer and more obvious.

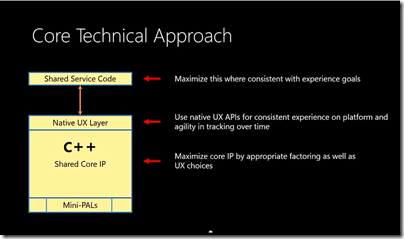

Microsoft’s goal with Office is to achieve high content fidelity and consistency of functionality across all platforms, but to use native UX/UI frameworks so that each version integrates properly with the operating system on which it runs. The company also wants to achieve a faster shipping cycle; the traditional two-year cycle is not fast enough, says Zaika.

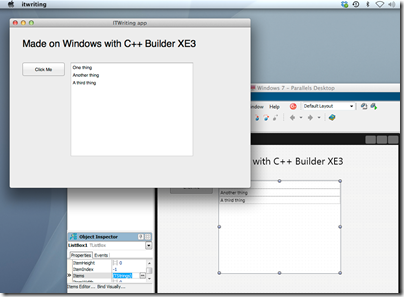

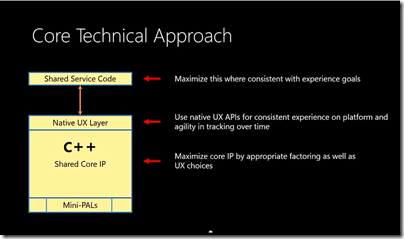

What then is Microsoft’s technical strategy for cross-platform Office now? The starting point, Zaika explains, is a shared core of C++ code. Office has always been written in C/C++, and “that has worked out well for us,” he says, since it is the only language that compiles to native code across all the platforms (web is an exception, and one that Zaika did not talk much about, except to note the importance of “shared service code,” cloud-based code that is used for features that do not need to work offline).

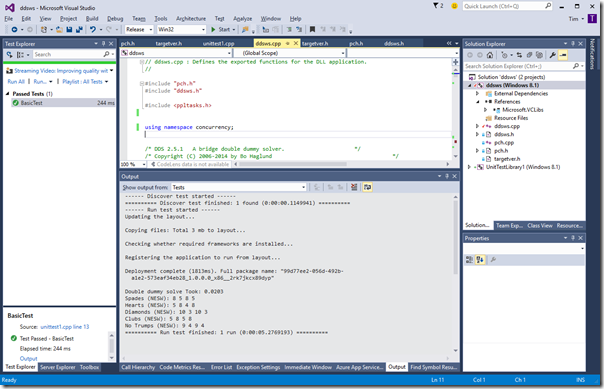

In order for the shared non-visual code to work correctly cross-platform, Microsoft has a number of platform abstraction layers (PALs). No #ifdefs (to handle platform differences) are allowed in the shared code itself. However, rather than a monolithic Win32 emulation as used in Word 6.0 for the Mac, Microsoft now has numerous mini-PALs. There is also a willingness to compromise, abandoning shared code if it is necessary for a good platform experience.

How do you ensure cross-platform fidelity in places where you cannot share code? The alternative is unit testing, says Zaika, and there is a strong reliance on this in Office development.

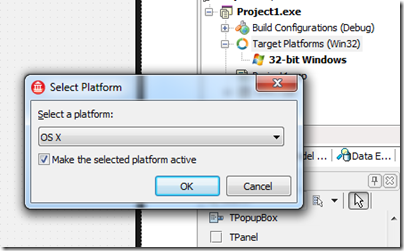

There is also an abstraction layer for document rendering. Office requires composition, animation and touch APIs on each platform. Microsoft uses DirectX on Win32, a thin layer over Apple’s CoreAnimation API on Mac and iOS, a thin layer over XAML on WinRT, and a thinnish layer over Java on Android.

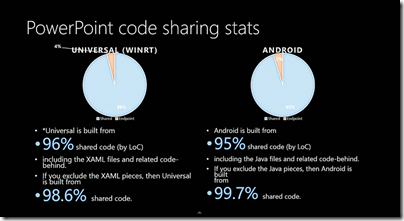

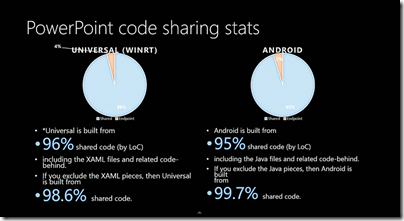

The outcome of Microsoft’s architectural work is a high level of code sharing, despite the commitment to native frameworks for UX. Zaika showed a slide revealing code sharing of over 95% for PowerPoint on WinRT and Android.

What can Microsoft-watchers infer from this about the future of Office? While there are no revelations here, it does seem that work on Office for WinRT and for Android is well advanced.

Office for WinRT has implications for future Windows tablets. If a version of Office with at least the functionality of Office for iPad runs on WinRT, there is no longer any need to include the Windows desktop on future Windows tablets – by which I mean not laptop replacements like Surface 3.0, but smaller tablets. That will make such devices less perplexing for users than Surface RT, though with equivalent versions of Office on both Android and iOS tablets, the unique advantages of Windows tablets will be harder to identify.

Thanks to WalkingCat on Twitter for alerting me to this video.