Microsoft has released SQL Server 2014 to manufacturing (an odd phrase in these diskless days) but which signifies that it is code complete for the initial release. General availability is April 1st.

What do you do if hardware trends enable you to stuff vast amounts of RAM into your server, along with many CPU cores? The answer is that you optimize applications to work mostly in RAM, with disk important as a persistence layer. This contrasts to the approach when you have large amounts of disk space and little RAM, when you focus on loading only as much data into memory as you absolutely need.

The implications for a database server are profound. Instead of a logic that goes something like “read from disk, do something, write to disk” you can address the data directly; it is just a memory pointer.

Now combine that with stored procedures compiled to native code. Performance leaps up, and by much more than you get simply by caching data in RAM, or using fast SSD storage, but still using the old disk-based approach in the database engine.

This is the reasoning behind “Hekaton”, properly known as In-Memory OLTP (online transaction processing), which is a new in-memory database engine that comes with SQL Server 2014.

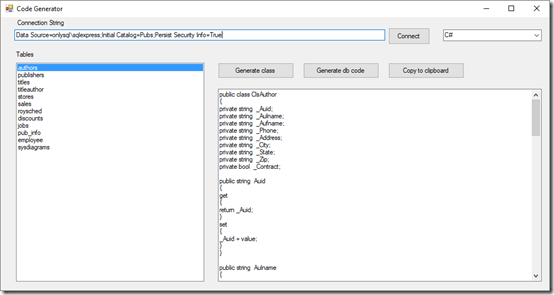

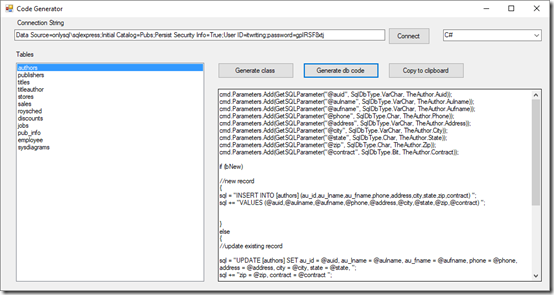

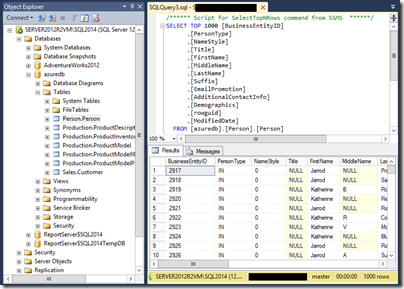

It is fully integrated. You just have to add a filegroup to a a SQL Server database with the keyword CONTAINS MEMORY_OPTIMIZED_DATA and then create a table with the keyword WITH (MEMORY_OPTIMIZED=ON). And for the stored procedures, use WITH NATIVE_COMPILATION.

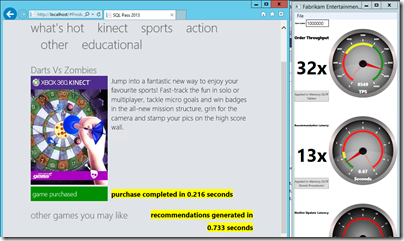

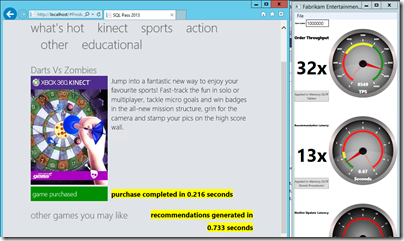

The speed-up is as great as you would expect. I have seen demonstrations of 30x or more performance increases, like this one in a demo based on one from the SQL Pass conference, but which I did for myself in one of Microsoft’s “Hands On Labs”:

In another demo, on an Azure VM, I got a speed up of 7x. Only seven times faster! Still, hard to complain about those sorts of numbers.

Unfortunately, in-memory OLTP is spoilt by some rather severe limitations in this release. The first problem is that a combination of the need to support native compilation of stored procedures, and other limitations, means that only a subset of T-SQL (the query and management language of SQL Server) is supported. You can see the list of what is not supported here; and it is depressing reading, with lots of keywords that you likely do use at the moment; even IDENTITY is on the list of what does not work.

Another issue is that the ability of In-Memory OLTP to take advantage of hardware is not as extensive as you might hope. Lead program manager Kevin Liu told me at a recent press workshop that the team recommends restricting total data size to 256GB, and that the recommended number of CPU sockets is two. You can get servers today with much more memory and more sockets. It gets complicated though: in a multi-socket server memory has processor affinity and there is a thing called NUMA (Non-Uniform Nemory Access) that describes the way memory is shared between processors.

According to Liu, Microsoft expects to lift these limitations in future releases, as well as improving T-SQL support, but things like this remind you that it is a version one release.

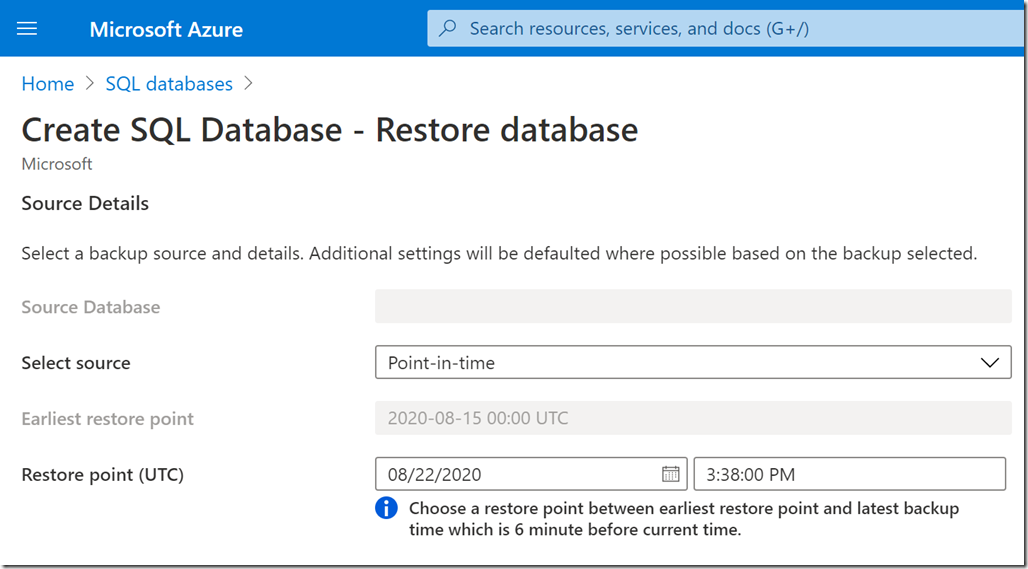

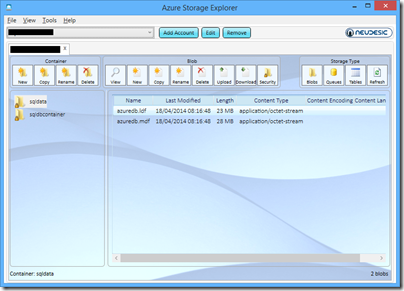

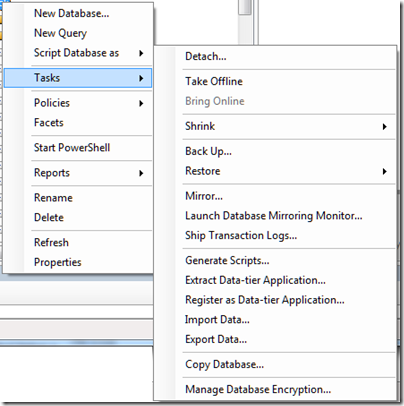

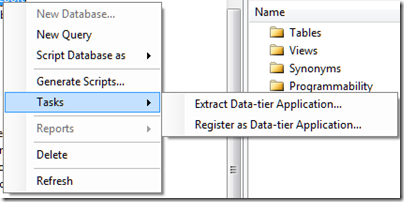

What else is in SQL Server 2014? There is some neat Azure integration, including a managed backup tool that is almost one click to have your data backed up to Azure storage; a brilliant facility for small businesses. You can also use Azure for high availability, creating always-on replicas in Azure VMs.

Data warehouse users will like the new clustered columnstore indexes, which allow you do use a column-oriented table structure for much faster processing of typical report and analysis queries. Columnstore indexes first appeared in SQL Server 2012 but were not updateable. Now they are.

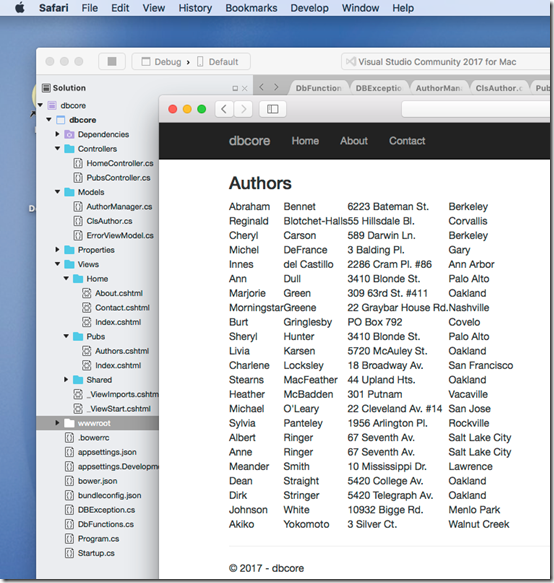

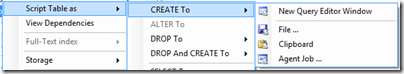

SQL Server is well liked, licensing hassles aside; and even on licensing, Microsoft can always point at Oracle and claim, rightly, to be cheaper and less complex. It has earned a reputation for solid performance. SQL Server 2014 looks as good as ever, even if the management tools now look rather dated – the shell for SQL Server Management Studio uses an old version of Visual Studio, which is one of the reasons. I also suspect the SQL Server team lacks a dialog designer, but doubt that the average database admin cares one jot.

That said, it is difficult to describe this as a must-have upgrade, unless you can make good use of “Hekaton” in-memory OLTP. The porting effort will be worth it presuming you can get it to work. One of the good fits for the technology is managing web app session data, or, as in the example above, rapid processing to display recommendations or customisations on a web site.

I can imaging though that many users will look at Hekaton and decide that it is too much work or too immature for immediate use. What is left for them, apart from some nice Azure integration?

Not a huge amount, it seems to me, making this to my mind a transitional release.

Are you planning to upgrade? I would be interested to know your reasons why or why not.