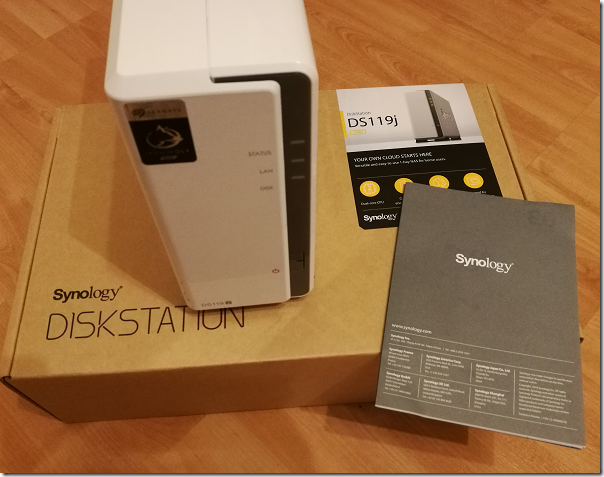

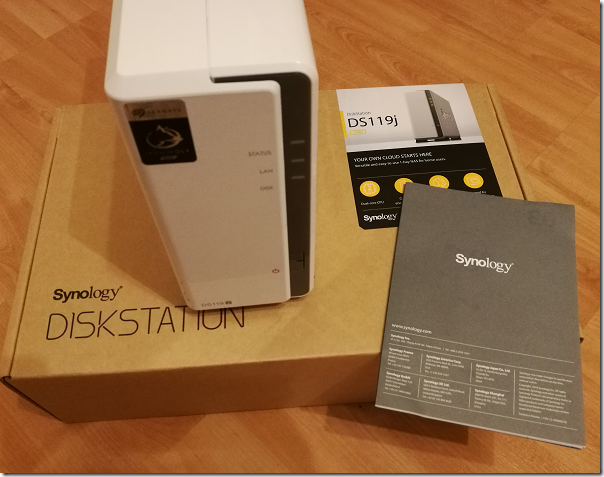

Synology has released a new budget NAS, the DS119j, describing it as “An ideal first NAS for the home".

It looks similar to the DS115j which it probably replaces – currently both models are listed on Synology’s site. What is the difference? The operating system is now 64-bit, the CPU now a dual-core ARMv8, though still at 800 MHz, and the read/write performance slightly bumped from 100 MB/s to 108 MB/s, according to the documentation.

I doubt any of these details will matter to the intended users, except that the more powerful CPU will help performance – though it is still underpowered, if you want to take advantage of the many applications which this device supports.

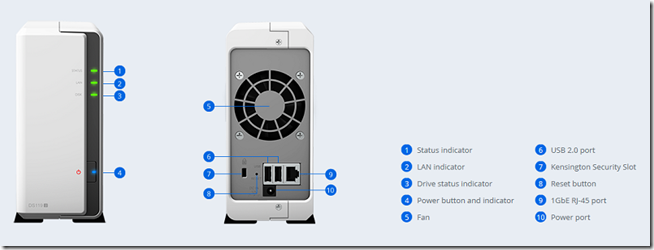

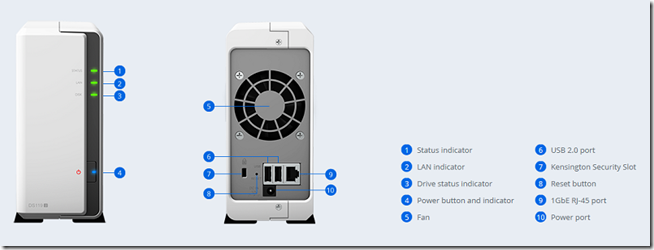

What you get is the Diskstation, which is a fairly slim white box with connections for power, 1GB Ethernet port, and 2 USB 2.0 ports. Disappointing to see the slow USB 2.0 standard used here. You will also find a power supply, an Ethernet cable, and a small bag of screws.

The USB ports are for attaching USB storage devices or printers. These can then be accessed over the network.

The DS119j costs around £100.

Initial setup

You can buy these units either empty, as mine was, or pre-populated with a hard drive. Presuming it is empty, you slide the cover off, fit the 3.5" hard drive, secure it with four screws, then replace the cover and secure that with two screws.

What disk should you buy? A NAS is intended to be always on and you should get a 3.5" disk that is designed for this. Two common choices are the WD (Western Digital) Red series, and Seagate IronWolf series. At the time of writing, both a 4TB WD Red and a 4TB IronWolf are about £100 from Amazon UK. The IronWolf Pro is faster and specified for a longer life (no promises though), at around £150.

What about SSD? This is the future of storage (though the man from Seagate at Synology’s press event says hard drives will continue for a decade or more). SSD is much faster but on a home NAS that is compromised by accessing it over a network. It is much more expensive for the same amount of storage. You will need a SATA SSD and a 3.5" adapter. Probably not the right choice for this NAS.

Fitting the drive is not difficult, but neither it is as easy as it could be. It is not difficult to make bays in which drives can be securely fitted without screws. Further, the design of the bay is such that you have to angle a screwdriver slightly to turn the screws. Finally, the screw holes in the case are made entirely of plastic and it would be easy to overtighten then and strip the thread, so be careful.

Once assembled, you connect the drive to a wired network and power it on. In most home settings, you will attach the drive to a network port on your broadband router. In other cases you may have a separate network switch. You cannot connect it over wifi and this would anyway be a mistake as you need the higher performance and reliability of a cable connection.

To get started you connect the NAS to your network and therefore to the internet, and turn it on. In order to continue, you need to find it on the network which you can do in one of several ways including:

– Download the DS Finder app for Android or iOS.

– Download Synology Assistant for Windows, Mac or Linux

– Have a look at your DHCP manager (probably in your router management for home users) and find the IP address

If you use DS Finder you can set up the Synology DiskStation from your phone. Otherwise, you can use a web browser (my preferred option). All you need to do to get started is to choose a username and password. You can also choose whether to link your DiskStation with a Synology account and create a QuickConnect ID for it. If you do this, you will be able to connect to your DiskStation over the Internet.

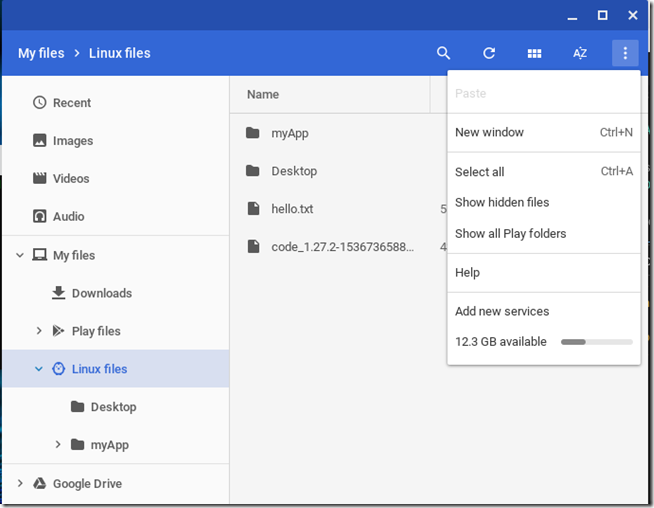

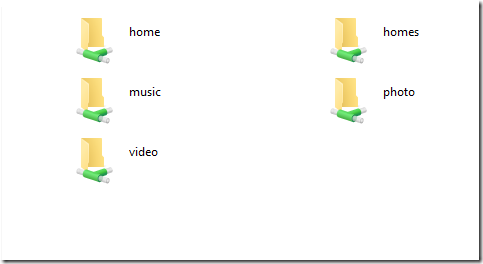

The DiskStation sets itself up in a default configuration. You will have network folders for music, photo, video, and another called home for other documents. Under home you will also find Drive, which behaves like a folder but has extra features for synchronization and file sharing. For full use of Drive, you need to install a Drive client from Synology.

If you attach a USB storage device to a port on the DS119j, it shows up automatically as usbshare1 on the network. This means that any USB drive becomes network storage, a handy feature, though only at USB 2.0 speed.

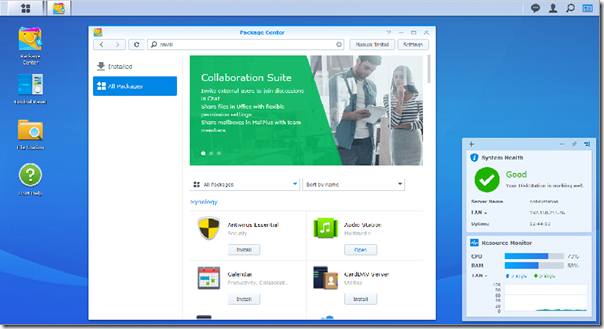

Synology DSM (Disk Station Manager)

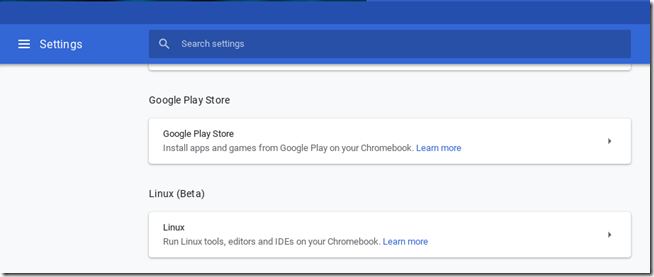

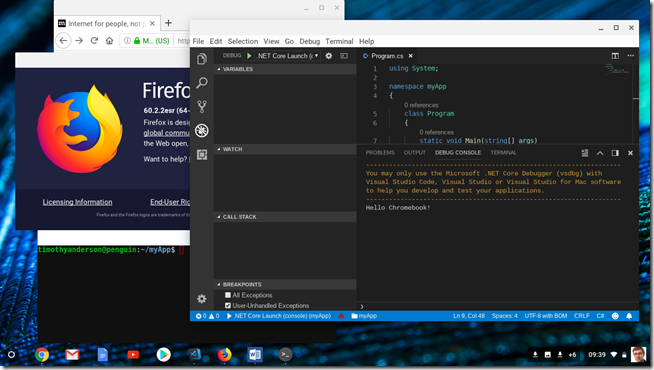

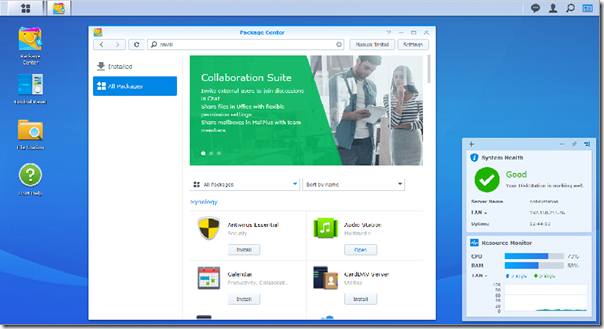

Synology DSM is a version of Linux adapted by Synology. It is mature and robust, now at version 6.2. The reason a Synology NAS costs much more than say a 4TB WD Elements portable USB drive is that the Synology is actually a small server, focused on storage but capable of running many different types of application. DSM is the operating system. Like most Linux systems, you install applications via a package manager, and Synology maintains a long list of packages encompassing a diverse range of functions from backup and media serving through to business-oriented applications like running Java applications, a web server, Docker containers, support ticket management, email, and many more.

DSM also features a beautiful windowed user interface all running in the browser.

The installation and upgrade of packages is smooth and whether you consider it as a NAS, or as a complete server system for small businesses, it is impressive and (compared to a traditional Windows or Linux server) easy to use.

The question in relation to the DS119j is whether DSM is overkill for such a small, low-power device.

Hyper Backup

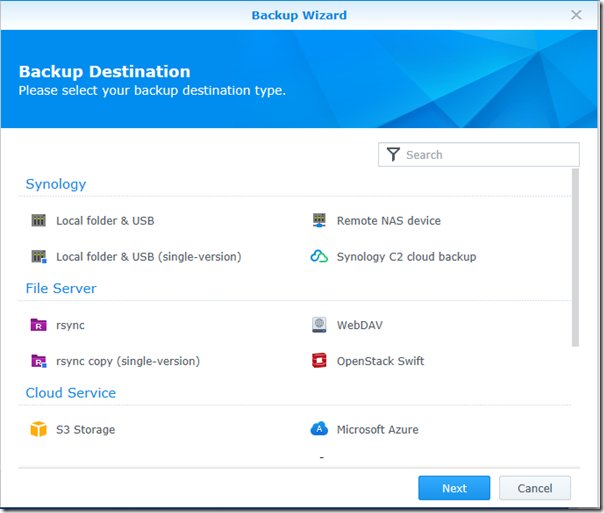

Given that this NAS only has a single drive, it is particularly important to back up any data. Synology includes an application for this purpose, called Hyper Backup.

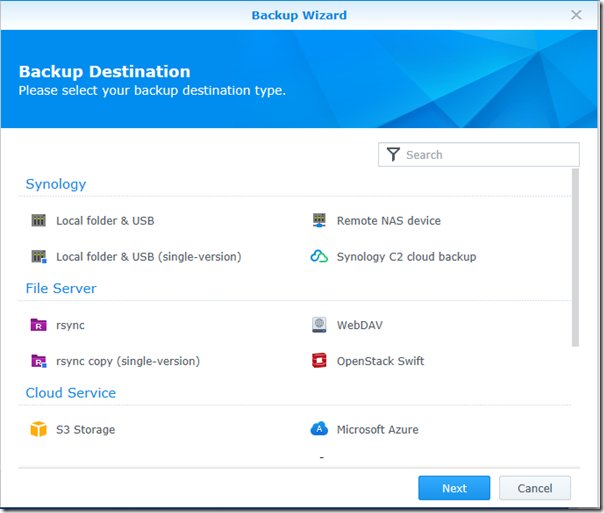

Hyper Backup is very flexible and lets you backup to many destinations, including Amazon S3, Microsoft Azure, Synology’s own C2 cloud service, or to local storage. For example, you could attach a large USB drive to the USB port and backup to there. Scheduling is built in.

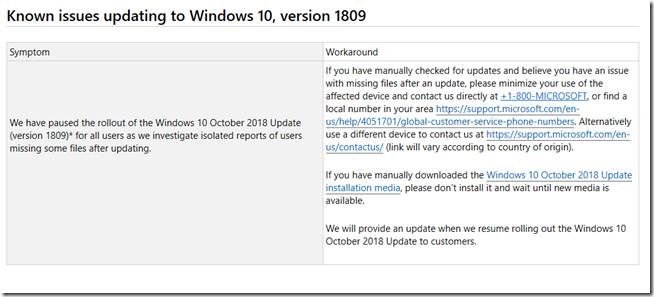

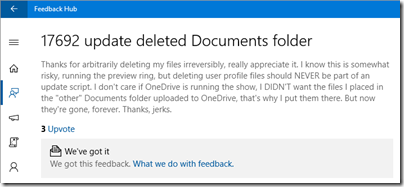

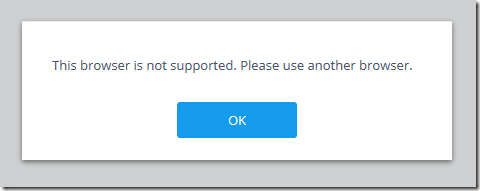

I had a quick look at the Synology C2 service. It did not go well. I use the default web browser on Windows 10, Edge, and using Hyper Backup to Synology C2 just got me this error message.

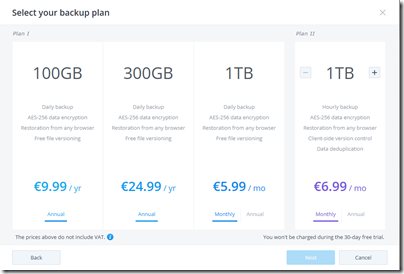

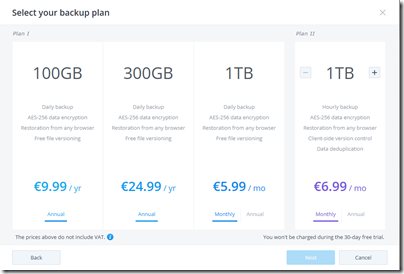

I told Edge to pretend to be Firefox, which worked fine. I was invited to start a free trial. Then you get to choose a plan:

Plans start at €9.99 + VAT for 100GB for a year. Of course if you fill your 4TB drive that will not be enough. On the other hand, not everything needs to be backed up. Things like downloads that you can download again, or videos ripped from disks, are not so critical, or better backed up to local drives. Cloud backup is ideal though for important documents since it is an off-site backup. I have not compared prices, but I suspect that something like Amazon S3 or Microsoft Azure would be better value than Synology C2, though integration will be smooth with Synology’s service. Synology has its own datacentre in Frankfurt so it is not just reselling Amazon S3; this may also help with compliance.

An ideal first NAS?

The DS119j is not an ideal NAS for one simple reason: it has only a single bay so does not provide resilient storage. In other words, you should not have data that is stored only on this DiskStation, unless it is not important to you. You should ensure that it is backed up, maybe to another NAS or external drive, or maybe to cloud storage.

Still, if you are aware of the risks with a single drive NAS and take sensible precautions, you can live with it.

I like Synology DSM which makes the small NAS devices great value as small servers. For home users, they are great for shared folders, media serving (I use Logitech Media Server with great success), and PC backup. For small business, they are a strong substitute for the role which used to be occupied by Microsoft’s Small Business Server as well as being cheaper and easier to use.

If you only want a networked file share, there are cheaper options from the likes of Buffalo, but Synology DSM is nicer to use.

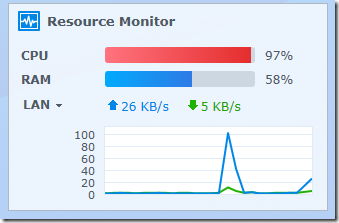

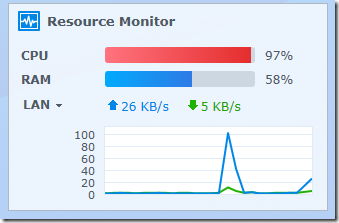

If you want to make fuller use of DSM though, this model is not the best choice. I noticed the CPU often spiked just using the control panel and package manager.

I would suggest stretching to at least the DS218j, which is similar but has 2 bays, 500MB of RAM and a faster CPU. Better still, I like the x86-based Plus series – but a 2-bay DS218+ is over £300. A DS218j is half that price and perhaps the sweet spot for home users.

Finally, Synology could do better with documentation for the first-time user. Getting started is not too bad, but the fact is that DSM presents you with a myriad of options and applications and a better orientation guide would be helpful.

Conclusion? OK, but get the DS218j if you can.