For several years the story with Office 365 was that email (essentially hosted Exchange) works great but OneDrive cloud storage, not so good. The main issues were not with the cloud storage as such, but with the sync client on Windows. It would mysteriously stop syncing and require a painful reset process to get it going again.

Microsoft squashed a lot of bugs and eventually released a much-improved “Next generation sync client” (NGSC) based on consumer OneDrive rather than Groove technology.

In the 2017 Windows 10 Fall Creators Update Microsoft also introduced Files on Demand, a brilliant feature that lists everything available but downloads only the files that you use.

The combination of the new sync client and Files on Demand means that life has got better for OneDrive users. It is not yet perfect though, and recently I came across another issue. This is where you get a strange “Upload blocked” message when attempting to save a document to the OneDrive location on your PC. Everything works fine if you go to the OneDrive site on the web; but this is not the way most users want to work.

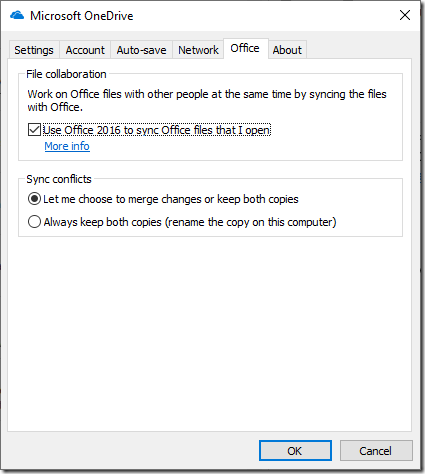

The most popular fix for this problem is to go into OneDrive settings (right-click the little cloud icon to the right of the taskbar and choose Settings). Then find the Office tab and uncheck “Use Office 2016 to sync Office files that I open.” But don’t do that yet!

If you check this thread you will see that over a thousand users clicked to say they had the same problem, and over 400 clicked to say that the solution helped them. Significant numbers for one thread.

But what does this option do? It appears that checking the option makes big changes to the way Office files are saved. Here is the explanation:

Similar to how Office opens files, saves start with the locally synced file. After the file saves, Office will upload changes directly to the server. If Office can’t upload because the device is offline, you can keep working offline or close the file. Office will continue to save to the locally synced file, and OneDrive will handle the upload once the device gets back online. In this integration, Office works directly with the files that are currently open, enabling co-authoring in Office apps like Word on the desktop, which no competitor offers. For files that are not open in Office, OneDrive handles all syncing. This is the key difference between the old sync client integration and the NGSC, and this lets us achieve co-authoring along with the best performance and sync reliability.

We can conclude from this that the “upload blocked” message comes when Office (not OneDrive) tries to “upload changes directly to the server”. Office as well as OneDrive needs to be signed in. The place to check these settings in on the Account tab of the File menu in an Office application like Word or Excel. There is a section called Connected Services and you need to make sure this lists all the OneDrive locations you use.

I suggest that you check these settings before unchecking the “use Office 2016 to sync” option in OneDrive. However, if it still does not work and you cannot troubleshoot it, it is worth a try to get reliable OneDrive sync

If you uncheck the “User Office 2016” option you will lose a couple of features:

- Real-time co-authoring with the desktop application

- Merge changes to resolve conflicts

The first of these features is amazing but many people rarely use it. It depends on the way you and your organization work. The second is to my mind a bit hazardous anyway.