What has rescued Microsoft in the cloud era? It seems to me that Office 365, rather than Azure, is its most strategic product. Users do not like too much change; and back when Office 365 was introduced in 2011 it offered an easy way for businesses small and large to retire their Exchange servers while retaining Outlook with all its functionality (Outlook works with other mail servers but with limited features). You also got SharePoint online, cloud storage, and in-browser versions of Word, Excel and PowerPoint.

There was always another aspect to Office 365 though, which is that it allowed you to buy the Office desktop applications as a subscription. Unless you are the kind of person (or business) that happily runs old software, the subscription is better value than a permanent license, especially for small businesses. Currently Office 365 Business Premium gets you Outlook, Word, Excel, PowerPoint, OneNote and Access, as well as hosted Exchange and SharePoint etc, for £9.40 per month. Office Home and Business (which does not include Access) is £250, or about the same as two years subscription, and can only be installed on one PC or Mac, versus 5 PCs or Macs, 5 tablets and 5 mobile devices for the subscription product.

The subscription product is called Office 365, and the latest version of the desktop suite is called Office 2019. Microsoft would much rather you bought the subscription, not only because it delivers recurring revenue, but also because Office 365 is a great upselling opportunity. Once you are on Office 365 and Azure Active Directory, products like Dynamics 365 are a natural fit.

Microsoft’s enthusiasm for the subscription product has resulted in a recent “Twins Challenge” campaign which features videos of identical twins trying the same task in both Office 365 and Office 2019. They are silly videos and do a poor job of selling the Office 365 features. For example, in one video the task is to “fill out a spreadsheet with data about all 50 states” (US centric or what?).

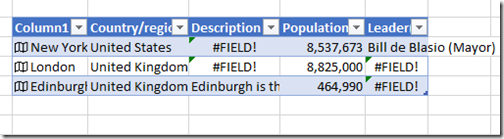

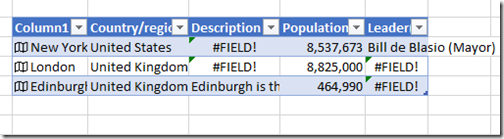

In the video, the Office 365 guy is done in seconds thanks to Excel Data Types, a new feature which uses online data from the Bing search engine to provide intelligent features like entering population, capital city and so on. It seems though that the twins were pre-provided with a spreadsheet that had a list of the 50 states, as Excel cannot enter these automatically. And when I tried my own exercise with a few capital cities I found it frustrating because not much data was available, and the data is inconsistent so that one city has fields not available for another city. So my results were not that great.

I’m also troubled to see data like population chucked into a spreadsheet with no information on its source or scope. Is that Greater London (technically a county) or something less than that? What year? Whose survey? These things matter.

Perhaps even more to the point, this is not what most users do with Office. It varies of course; but a lot of people type documents and do simple spreadsheets that do not stress the product. They care about things like will it print correctly, and if I email it, will the recipient be able to read it OK. Office to be fair is good in both respects, but Microsoft often struggles to bring new features to Office that matter to a large proportion of users (though every feature matters to someone).

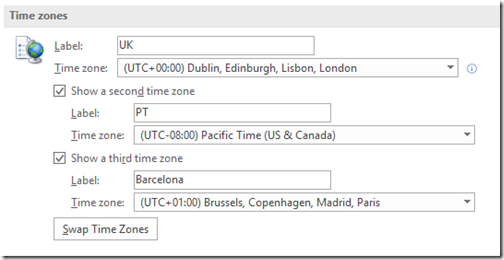

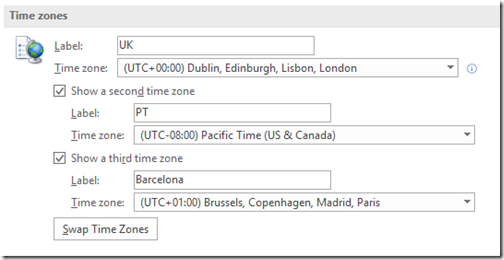

It is interesting to browse through the new features in Office 2019, listed here. LaTeX equation support, nice. And a third time zone in Outlook, handy if you discover it in the convoluted Outlook UI (and yes, discoverability is a problem):

It is worth noting though that for document editing the free LibreOffice is excellent and good enough for a lot of purposes. You do not get Outlook though, and Calc is no Excel. If you mostly do word processing though, do look at LibreOffice, it is better in some respects than Word (style support, for example).

I use Office constantly and like all users, I do have a list of things I would like fixed or improved, that for the most part seem to be completely different from what the Office team focuses on. There are even longstanding bugs – see the recent comment. Ever had an email in Outlook, clicked Reply, and found that the the formatting and background of the original message affects your reply text as well and the only way to fix it is to remove all formatting? Or been frustrated that Outlook makes it so hard to make interline comments in a reply with sensible formatting? Or been driven crazy by Word paragraph numbering and indentation when you want to have more than one paragraph within the same numbered point? Little things; but they could be better.

Then again there is Autosave (note quite different from autorecover), which is both recent and a fantastic feature. Unfortunately it only works with OneDrive. The value of this feature was brought home to me by an anecdote: a teenager who lost all the work in their Word document because they had not previously encountered a Save button (Google docs save automatically). This becomes what you expect.

So yes, Office does improve, and for what you get it is great value. Will Office 2019 users miss lots of core features? No. In most cases though, the Office 365 subscription is much better value.