I like this book. I know I like it because I find myself wanting to quote from it frequently. It is a book that almost every software developer should read, even if you disagree with parts of it – which is likely, because it is opinionated. The authors always give reasons for their opinions though, which means that if you disagree, you need to articulate why that is; or they may even change your mind. In consequence you find yourself learning as you read.

The authors are software theoreticians, but they are also practitioners; in fact they are practitioners first and theoreticians afterwards. This means they are pragmatic rather than dogmatic. Here is an example. Chapter 13 discusses software dependencies, and page 372 covers circular dependencies, “probably the nastiest dependency problem.” A circular dependency is when component A depends on component B, and component B also depends on component A.

A bad idea; but the authors write:

Surprisingly, we have seen successful projects with circular dependencies in their build systems. You may argue with our definition of “successful” in this case, but there was working code in production, which is enough for us.

As an aside, this kind of dry humour is characteristic, as also evident in remarks like this:

We are certain that, occasionally, manually intensive releases work smoothly. We may well have been unlucky in having mostly seen the bad ones.

The subject of the book is Continuous Delivery. So what is that? Well, if Continuous Integration is about ensuring that your software always builds, then Continuous Delivery is about ensuring that your software always deploys. The final form, as it were, of Continuous Delivery is Continuous Deployment, where you are so confident of your automated build and deploy process that any checked-in code that passes its tests can be deployed immediately. I was confused about the difference between Continuous Delivery and Continuous Deployment so I wrote a post about it; it turns out that there is not much difference.

The principle behind Continuous Delivery is that software is not done until it is released. If the release process is long, arduous and infrequent, then you are not really doing Agile development. A section of chapter 1 is devoted to release anti-patterns, and these form an excellent rationale for taking an interest in Continuous Delivery.

My guess is that anyone who has been involved in professional software development will wince a little while reading through these anti-patterns, thinking “that is what we used to do” or even “that is what we do”.

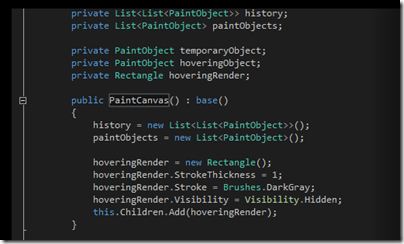

That said, Humble and Farley do not fall into the trap of merely writing about how not to do it. Rather, they address in some detail the kinds of problems you will face if you decide to embrace the Continuous Delivery methodology. The key ingredient in Continuous Delivery is that pretty much everything must be automated, otherwise it is too difficult to do. But how do you automate something like Acceptance Testing? That is the subject of chapter 8. How do you automate a deployment at all? That is the subject of chapter 6. The authors are not on a higher plane than the rest of us, and much of the advice is straightforward, even at the level of “Always use relative paths,” which is a tip in chapter 6.

The authors talk a lot about testing, as you would expect, but there is also extensive discussion of software configuration management, describing different approaches such as centralised and distributed version control and even specific tools. The chapter on Advanced Version Control is a particularly good read. Humble and Farley articulate the point that branching and merging is antithetical to Continuous Integration and therefore Continuous Delivery:

If different members of the team are working on separate branches or streams then by definition they’re not continuously integrating (p 390)

Does this mean branches are a bad idea? Not always, say the authors, but they also state:

Our strong recommendation is to crate long-lived branches only on release … new work is always committed to the trunk (p 392)

The reason is not only to enable Continuous Integration, but also because merging is complex and error-prone.

Software configuration management is not easy, but it is a relatively mature aspect of software development. This is less true of what you might call infrastructure configuration management; yet infrastructure dependencies such as versions and configurations of the operating system or web server are a common reason for deployment failures. Several chapters discuss this problem in detail. In principle, the authors say:

The desired state of your infrastructure should be specified through version-controlled configuration.

This leads to some thoughtful discussion of how to achieve this.

Another theme, as you would expect, is that development and operations people need to be working together and not in isolation. To some extent this is a DevOps book.

A great book then; but there are flaws. One is that there is some repetition because of the way the book is organised. This is good if you are inclined to read chapters in isolation, but not so good if you are reading straight through. In practice I did not find it too annoying, but it is there.

Another issue is that while the authors do cover Microsoft .NET to some extent, this is usually in the form of a brief mention and there is more focus on Java. This may be in part because of their preference for open source. It is still a good read for .NET developers, because the principles are platform-agnostic, but Microsoft platform developers may find it irritating at times. Team Foundation Server, say the authors, is “essentially an inferior knock-off of Perforce” (p 386).

The discussion of specific tools is a strength but also a weakness, in that the tools will change over time and the book will become dated.

This is not the last word on Continuous Delivery, but it is an enjoyable and thought-provoking read. Recommended.