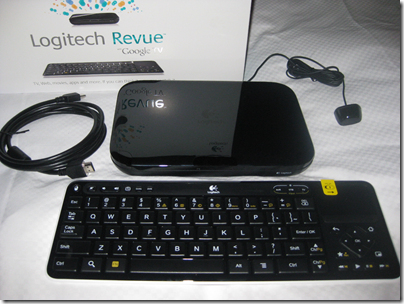

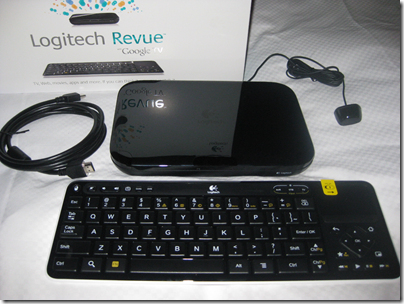

I received a Google TV as an attendee at the Adobe MAX conference earlier this year; to be exact, a Logitech Revue. It is not yet available or customised for the UK, but with its universal power supply and standard HDMI connections it works OK, with some caveats.

The main snag with my evaluation is that I use a TV with built-in Freeview (over-the-air digital TV) and do not use a set top box. This is bad for Google TV, since it wants to sit between your set top box and your TV, with an HDMI in for the set top box and an HDMI out to your screen. Features like picture-in-picture, TV search, and the ability to choose a TV channel from within Google TV, depend on this. Without a set-top box you can only use Google TV for the web and apps.

I found myself comparing Google TV to Windows Media Center, which I have used extensively both directly attached to a TV, and over the network via Xbox 360. Windows Media Center gets round the set top box problem by having its own TV card. I actually like Windows Media Center a lot, though we had occasional glitches. If you have a PC connected directly, of course this also gives you the web on your TV. Sony’s PlayStation 3 also has a web browser with Adobe Flash support, as does Nintendo Wii though it is more basic.

What you get with Google TV is a small set top box – in my case it slipped unobtrusively onto a shelf below the TV, a wireless keyboard, an HDMI connector, and an IR blaster. Installation is straightforward and the box recognised my TV to the extent that it can turn it on and off via the keyboard. The IR blaster lets you position an infra-red transmitter optimally for any IR devices you want to control from Google TV – typically your set-top box.

I connected to the network through wi-fi initially, but for some reason this was glitchy and would lose the connection for no apparent reason. I plugged in an ethernet cable and all was well. This problem may be unique to my set-up, or something that gets a firmware fix, so no big deal.

There is a usability issue with the keyboard. This has a trackpad which operates a mouse pointer, under which are cursor keys and an OK button. You would think that the OK button represents a mouse click, but it does not. The mouse click button is at top left on the keyboard. Once I discovered this, the web browser (Chrome, of course) worked better. You do need the OK button for navigating the Google TV menus.

I also dislike having a keyboard floating around in the living room, though it can be useful especially for things like Gmail, Twitter or web forums on your TV. Another option is to control it from a mobile app on an Android smartphone.

The good news is that Google TV is excellent for playing web video on your TV. YouTube has a special “leanback” mode, optimised for viewing from a distance that works reasonably well, though amateur videos that look tolerable in a small frame in a web browser look terrible played full-screen in the living room. BBC iPlayer works well in on-demand mode; the download player would not install. Overall it was a bit better than the PS3, which is also pretty good for web video, but probably not by enough to justify the cost if you already have a PS3.

The bad news is that the rest of the Web on Google TV is disappointing. Fonts are blurry, and the resolution necessary to make a web page viewable from 12 feet back is often annoying. Flash works well, but Java seems to be absent.

Google also needs to put more thought into personalisation. The box encouraged me to set up a Google account, which will be necessary to purchase apps, giving me access to Gmail and so on; and I also set up the Twitter app. But typically the living room is a shared space: do you want, for example, a babysitter to have access to your Gmail and Twitter accounts? It needs some sort of profile management and log-in.

In general, the web experience you get by bringing your own laptop, netbook or iPad into the room is better than Google TV in most ways apart from web video. An iPad is similar in size to the Google TV keyboard.

Media on Google TV has potential, but is currently limited by the apps on offer. Logitech Media Player is supplied and is a DLNA client, so if you are lucky you will be able to play audio and video from something like a NAS (network attached storage) drive on your network. Codec support is limited.

In a sane, standardised world you would be able to stream music from Apple iTunes or a Squeezebox server to Google TV but we are not there yet.

One key feature of Google TV is for purchasing streamed videos from Netflix, Amazon VOD (Video on Demand) or Dish Network. I did not try this; they do not work yet in the UK. Reports are reasonably positive; but I do not think this is a big selling point since similar services are available by many other routes.

Google TV is not in itself a DVR (Digital Video Recorder) but can control one.

All about the apps

Not too good so far then; but at some point you will be able to purchase apps from the Android marketplace – which is why attendees at the Adobe conference were given boxes. Nobody really knows what sort of impact apps for TV could have, and it seems to me that as a means of running apps – especially games – on a TV this unobtrusive device is promising.

Note that some TVs will come with Google TV built-in, solving the set top box issue, and if Google can make this a popular option it would have significant impact.

It is too early then to write it off; but it is a shame that Google has not learned the lesson of Apple, which is not to release a product until it is really ready.

Update: for the user’s perspective there is a mammoth thread on avsforum; I liked this post.