Last week I was in Las Vegas for my first visit to Amazon’s annual developer conference re:Invent. There were several announcements, the biggest being a new relational database service called RDS Aurora – a drop-in replacement for MySQL but with 3x write performance and 5x read performance as well as resiliency benefits – and EC2 Container Service, for deploying and managing Docker app containers. There is also AWS Lambda, a service which runs code in response to events.

You could read this news anywhere, but the advantage of being in Vegas was to immerse myself in the AWS culture and get to know the company better. Amazon is both distinctive and disruptive, and threes things that its retail operation and its web services have in common are large scale, commodity pricing, and customer focus.

Customer focus? Every company I have ever spoken to says it is customer focused, so what is different? Well, part of the press training at Amazon seems to be that when you ask about its future plans, the invariable answer is “what customers demand.” No doubt if you could eavesdrop at an Amazon executive meeting you would find that this is not entirely true, that there are matters of strategy and profitability which come into play, but this is the story the company wants us to hear. It also chimes with that of the retail operation, where customer service is generally excellent; the company would rather risk giving a refund or replacement to an undeserving customer and annoy its suppliers than vice versa. In the context of AWS this means something a bit different, but it does seem to me part of the company culture. “If enough customers keep asking for something, it’s very likely that we will respond to that,” marketing executive Paul Duffy told me.

That said, I would not describe Amazon as an especially open company, which is one reason I was glad to attend re:Invent. I was intrigued for example that Aurora is a drop-in replacement for an open source product, and wondered if it actually uses any of the MySQL code, though it seems unlikely since MySQL’s GPL license would require Amazon to publish its own code if it used any MySQL code; that said, the InnoDB storage engine code at least used to be available under a dual license so it is possible. When I asked Duffy though he said:

We don’t … at that level, that’s why we say it is compatible with MySQL. If you run the MySQL compatibility tool that will all check out. We don’t disclose anything about the inner workings of the service.

This of course touches on the issue of whether Amazon takes more from the open source community than it gives back.

Senior VP of AWS Andy Jassy

Someone asked Senior VP of AWS Andy Jassy, “what is your strategy of contributing to the open source ecosystem”, to which he replied:

We contribute to the open source ecosystem for many years. Zen, MySQL space, Linux space, we’re very active contributors, and will continue to do so in future.

That was it, that was the whole answer. Aurora, despite Duffy’s reticence, seems to be a completely new implementation of the MySQL API and builds on its success and popularity; could Amazon do more to share some of its breakthroughs with the open source community from which MySQL came? I think that is arguable; but Amazon is hard to hate since it tends to price so competitively.

Is Amazon worried about competition from Microsoft, Google, IBM or other cloud providers? I heard this question asked on several occasions, and the answer was generally along the lines that AWS is too busy to think about it. Again this is perhaps not the whole story, but it is true that AWS is growing fast and dominates the market to the extent that, say, Azure’s growth does not keep it awake at night. That said, you cannot accuse Amazon of complacency since it is adding new services and features at a high rate; 449 so far in 2014 according to VP and Distinguished Engineer James Hamilton, who also mentioned 99% usage growth in EC2 year on year, over 1,000,000 active customers, and 132% data transfer growth in the S3 storage service.

Cloud thinking

Hamilton’s session on AWS Innovation at Scale was among the most compelling of those I attended. His theme was that cloud computing is not just a bunch of hosted servers and services, but a new model of computing that enables new and better ways to run applications that are fast, resilient and scalable. Aurora is actually an example of this. Amazon has separated the storage engine from the relational engine, he explained, so that only deltas (the bits that have changed) are passed down for storage. The data is replicated 6 times across three Amazon availability zones, making it exceptionally resilient. You could not implement Aurora on-premises; only a cloud provider with huge scale can do it, according to Hamilton.

Distinguished Engineer James Hamilton

Hamilton was fascinating on the subject of networking gear – the cards, switches and routers that push bits across the network. Five years ago Amazon decided to build its own, partly because it considered the commercial products to be too expensive. Amazon developed its own custom network protocol stack. It worked out a lot cheaper, he said, since “even the support contract for networking gear was running into 10s of millions of dollars.” The company also found that reliability increased. Why was that? Hamilton quipped about how enterprise networking products evolve:

Enterprise customers give lots of complicated requirements to networking equipment producers who aggregate all these complicated requirements into 10s of billions of lines of code that can’t be maintained and that’s what gets delivered.

Amazon knew its own requirements and built for those alone. “Our gear is more reliable because we took on an easier problem,” he said.

AWS is also in a great position to analyse performance. It runs so much kit that it can see patterns of failure and where the bottlenecks lie. “We love metrics,” he said. There is an analogy with the way the popularity of Google search improves Google search; it is a virtuous circle that is hard for competitors can replicate.

Closing reflections

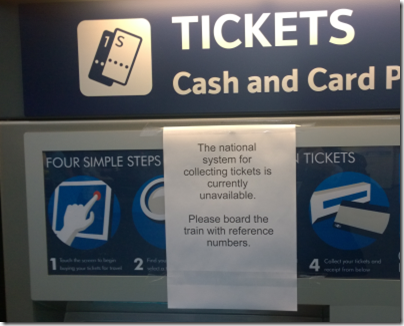

Like all vendor-specific conferences there was more marketing that I would have liked at re:Invent, but there is no doubting the excellence of the platform and its power to disrupt. There are aspects of public cloud that remain unsettling; things can go wrong and there will be nothing you can do but wait for them to be fixed. The benefits though are so great that it is worth the risk – though I would always advocate having some sort of plan B and off-cloud (or backup with another cloud provider) if that is feasible.