Microsoft’s Build developer conference starts today in Seattle.

Ahead of Build though, it is worth noting that this Build is different in feel than previous events. The first Build was in 2011 and it was focused on Windows 8, released there in preview.Historically it has always been a Windows-focused event, though of course with some sessions on Microsoft’s wider platform.

Microsoft is changing, and the key document for those interested in the company’s direction is this one from 29th March 2018 – the most significant strategic move since the June 2015 “aligning engineering to strategy” announcement that dismantled the investment in Windows Phone.

In the March announcement CEO Satya Nadella explains that the Windows and Devices Group (WDG) has become the Experiences and Devices Group – no longer just Windows. Former WDG chief Terry Myerson is leaving Microsoft, while Rajesh Jha steps up to run the new team.

I regard this new announcement as a logical next step following the departure of Steven Sinofsky in November 2012 (the beginning of the end for Windows 8) and the end of Windows Phone announced in June 2015. Sinofsky’s vision was for Windows to be reinvented for a new era of computing devices based on touch and mobile. This strategy failed, for numerous reasons which this is not the place to re-iterate. Windows 10, by contrast, is about keeping the operating system up to date as a business workhorse and desktop operating system, a market that will slowly decline as other devices take over things that we used to do with PCs, but which will also remain important for the foreseeable future.

Windows, let me emphasise, is neither dead nor dying. We still need PCs to do our work. The always-enthusiastic Joe Belfiore is now in charge of Windows and we will continue to see a stream of new features added to the operating system, though increasingly they will work in tandem with new software for iOS and Android. However, Windows can no longer be an engine of growth at Microsoft.

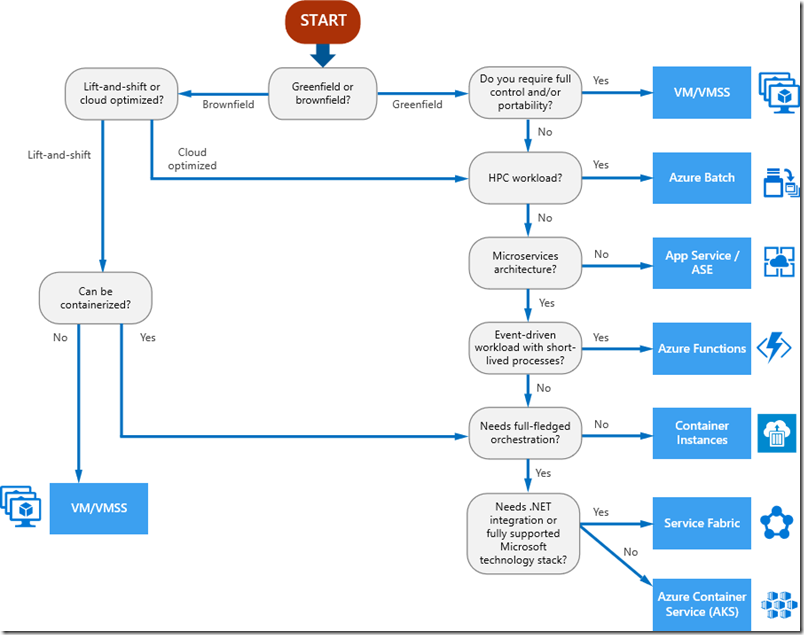

Microsoft has positioned itself to succeed despite the decline of the PC, primarily through cloud services. It has made huge investments in cloud infrastructure – that is, datacenters and connectivity – as well as in the software to make that infrastructure useful, from low-level server and network virtualisation to a large range of high level services (which is where the biggest profits can be made).

The company’s biggest cloud success is not Azure as such, but rather Office 365, now running a substantial proportion of the world’s business email, and building on that base with a growing range of collaboration and storage services. It is a perfect upsell opportunity, which is why the company is now talking up “Microsoft 365”, composed of Office 365, Windows 10, and Enterprise Mobility + Security (EMS).

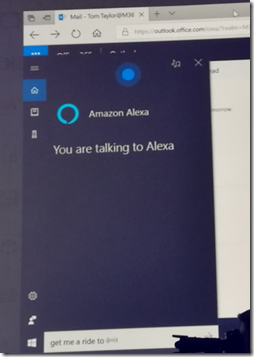

Nadella’s new mantra is “the intelligent cloud and the intelligent edge”, where the intelligent cloud is all things Office 365 and Azure, and the intelligent edge is all the computing devices that connect to it, whether as small as a Raspberry Pi running Azure IoT Edge (a small cross-platform runtime that connects to Azure services), or as large as Azure Stack (an on-premises cloud in a box that uses the Azure computing model).

We need an “intelligent edge” because it makes no sense at all to pump all of the vast and increasing amounts of data that we collect, from sensors and other inputs, directly into the cloud. That is madly inefficient. Instead, you process it locally and send to the cloud only what is interesting. Getting the right balance between cloud and edge is challenging and something which the industry is still working out. Nothing new there, you might think, as the trade-off between centralised and distributed computing has been a topic of endless debate for as long as I can remember.

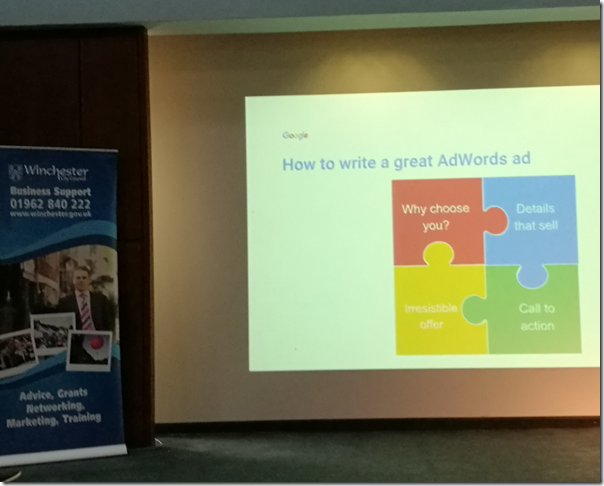

Coming back to Build, what does the above mean for developers? From Microsoft’s perspective, it is more strategic to have developers building for its cloud platform than for Windows itself; and if that means coding for Linux, iOS or Android, it matters little.

At the same time, Belfiore and his team are keen to keep Windows competitive against the competition (Mac, Linux, Chromebook). Even more important from the company’s point of view is to get users off Windows 7 and onto Windows 10, which is more strategic in every way.

Just because Microsoft wants you to do something does not make it in your best interests. That said, if you accept that a cloud-centric approach is right for most businesses, Windows 10 does make sense in lots of ways. It is more secure and, increasingly, easier to manage. Small businesses can log in directly with Azure Active Directory, and larger organisations get benefits like autopilot, now beginning to roll out as the PC OEMs ready the hardware.

The future of UWP (Universal Windows Platform) is less clear. Microsoft has invested heavily in UWP and made it an integral part of new Windows features like HoloLens and Mixed Reality. Developers on the other hand still largely prefer to work with older frameworks like Windows Presentation Foundation (WPF), and the value of UWP has been undermined by the death of Windows Phone. In addition, you can now get Store access and the install/uninstall benefits of UWP via another route, the Desktop Bridge – which is why key consumer applications like Spotify and Apple iTunes have turned up in the Store.

Finally, Build did not sell out this year; however I have heard that it has doubled in size, so these things are relative. Nevertheless, this is perhaps an indication that Microsoft still has work to do with its repositioning in the developer community. The challenge for the company is to keep its traditional Windows-focused developers on board, while also attracting new developers more familiar with non-Microsoft technologies. Anecdotally, I would say there are more signs of the former than the latter.