I’ve been mulling over the various Salesforce.com announcements here at Dreamforce, which taken together attempt to transition Salesforce.com from being a cloud CRM provider to becoming a cloud platform for generic applications. Of course this transition is not new – it began years ago with Force.com and the creation of the Apex language – and it might not be successful; but that is the aim, and this event is a pivotal moment with the announcement of database.com and the Heroku acquisition.

One thing I’ve found interesting is that Salesforce.com sees Microsoft Azure as its main competition in the cloud platform space – even though alternatives such as Google and Amazon are better known in this context. The reason is that Azure is perceived as an enterprise platform whereas Google and Amazon are seen more as commodity platforms. I’m not convinced that there is any technical justification for this view, but I can see that Salesforce.com is reassuringly corporate in its approach, and that customers seem generally satisfied with the support they receive, whereas this is often an issue with other cloud platforms. Salesforce.com is also more expensive of course.

The interesting twist here is that Heroku, which hosts Ruby applications, is more aligned with the Google/Amazon/open source community than with the Salesforce.com corporate culture, and this divide has been a topic of much debate here. Salesforce.com says it wants Heroku to continue running just as it has done, and that it will not interfere with its approach to pricing or the fact that it hosts on Amazon’s servers – though it may add other options. While I am sure this is the intention, the Heroku team is tiny compared to that of its acquirer, and some degree of change is inevitable.

The key thing from the point of view of Salesforce.com is that Heroku remains equally attractive to developers, small or large. While Force.com has not failed exactly, it has not succeeded in attracting the diversity of developers that the company must have hoped for. Note that the revenue of Salesforce.com remains 75%-80% from the CRM application, according to a briefing I had yesterday.

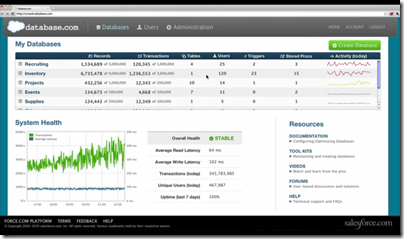

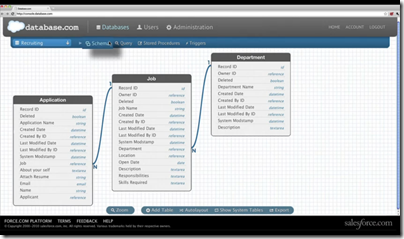

What is the benefit to Salesforce.com of hosting thousands of Ruby developers? If they remain on Heroku as it is at the moment, probably not that much – other than the kudos of supporting a cool development platform. But I’m guessing the company anticipates that a proportion of those developers will want to move to the next level, using database.com and taking advantage of its built-in security features which require user accounts on Force.com. Note that features such as row-level security only work if you use the Force.com user directory. Once customers take that step, they have a significant commitment to the platform and integrating with other Salesforce.com services such as Chatter for collaboration becomes easy.

The other angle on this is that the arrival of Heroku and VMForce gives existing Salesforce.com customers the ability to write applications in full Java or Ruby rather than being restricted to tools like Visualforce and the Apex language. Of course they could do this before by using the web services API and hosting applications elsewhere, but now they will be able to do this entirely on the Salesforce.com cloud platform.

That’s how the strategy looks to me; and it will fascinating to look back a year from now and see how it has played out. While it makes some sense, I am not sure how readily typical Heroku customers will transition to database.com or the Force.com identity platform.

There is another way in which Salesforce.com could win. Heroku knows how to appeal to developers, and in theory has a lot to teach the company about evangelising its platform to a new community.