I spent some time setting up RemoteApp and secure FTP for a small business which wanted better remote access without VPN. VPN is problematic for various reasons: it is sometimes blocked by public or hotel wifi providers, it is not suitable for poor connections, performance can be poor, and it means constantly having to think about whether your VPN tunnel is open or not. When I switched from connecting Outlook over VPN to connecting over HTTP, I found the experience better in every way; it is seamless. At least, it would be if it weren’t for the connection settings bug that changes the authentication type by itself on occasion; but I digress.

Enough to say that VPN is not always the best approach to remote access. There’s also SharePoint of course; but there are snags with that as well – it is powerful, but complex to manage, and has annoyances like poor performance when there are a large number of documents in a single folder. In addition, Explorer integration in Windows XP does not always work properly; it seems better in Vista and Windows 7.

FTP on the other hand can simply publish an existing file share to remote users. FTP can be horribly insecure; it is a common reason for usernames and passwords to passed in plain text over the internet. Fortunately Microsoft now offers an FTP service for IIS 7.0 that can be configured to require SSL for both password exchange and data transmission. I would not consider it otherwise. Note that this is different from the FTP service that ships with the original Server 2008; if you don’t have 2008 R2 you need a separate download.

So how was the setup? Pretty frustrating at the time; though now that it is all working it does not seem so bad. The problem is the number of moving parts, including your network configuration and firewall, Active Directory, IIS, digital certificates, and Windows security.

FTP is problematic anyway, thanks to its use of multiple ports. Another point of confusion is that FTP over SSL (FTPS) is not the same thing as Secure FTP (SFTP); Microsoft offers an FTPS implementation. A third issue is that neither of Microsoft’s FTP clients, Internet Explorer or the FTP command-line client, support FTP over SSL, so you have to use a third-party client like FileZilla. I also discovered that you cannot (easily) run a FTPS client behind an ISA Server firewall, which explained why my early tests failed.

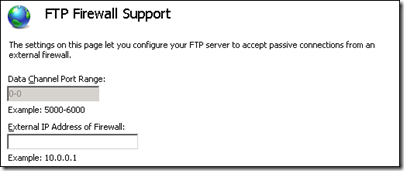

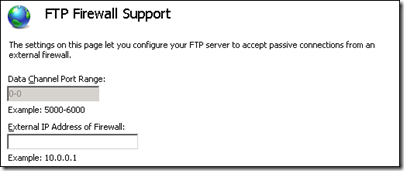

Documentation for the FTP server is reasonable, though you cannot find all the information you need in one place. I also found the configuration perplexing in places. Take this dialog for example:

The Data Channel Port Range is disabled with no indication why – the reason is that you set it for the entire IIS server, not for a specific site. But what is the “External IP Address of Firewall”? The wording suggests the public IP address; but the example suggests an internal, private address. I used the private address and it worked.

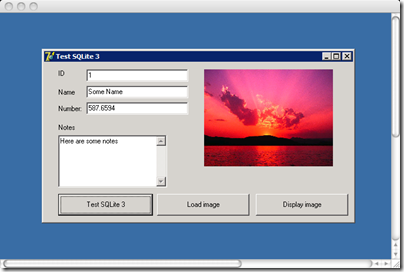

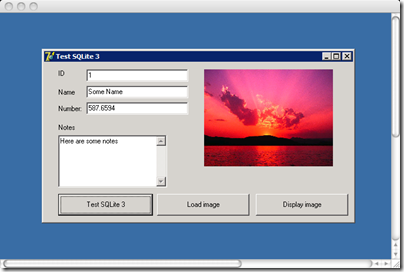

As for RemoteApp, it is a piece of magic that lets you remote the UI of a Windows application, so it runs on the server but appears to be running locally. It is essentially the same thing as remote desktop, but with the desktop part hidden so that you only see the window of the running app. One of the attractions is that it looks more secure, since you can give a semi-trusted remote user access to specified applications only, but this security is largely illusory because under the covers it is still a remote log-in and there are ways to escalate the access to a full desktop. Open a RemoteApp link on a Mac, for example, and you get the full desktop by default, though you can tweak it to show only the application, but with a blank desktop background:

Setup is laborious; there’s a step by step guide that covers it well, though note that Terminal Services is now called Remote Desktop Services. I set up TS Gateway, which tunnels the Terminal Server protocol through HTTPS, so you don’t have to open any additional ports in your firewall. I also set up TS Web Access, which lets users navigate to a web page and start apps from a list, rather than having to get hold of a .RDP configuration file or setup application.

If you must run a Windows application remotely, RemoteApp is a brilliant solution, though note that you need additional Client Access Licenses for these services. Nevertheless, it is a shame that despite the high level of complexity in the configuration of TS Gateway, involving a Connection Authorization Policy and a Resource Authorization Policy, there is no setting for “only allow users to run these applications, nothing else”. You have to do this separately through Software Restriction Policies – the document Terminal Services from A to Z from Cláudio Rodrigues at WTS.Labs has a good explanation.

I noticed that Rodrigues is not impressed with the complexity of setting up RemoteApp with TS Gateway and so on on Windows Server 2008 R2:

So years ago (2003/2004) we had all that sorted out: RDP over HTTPS, Published Applications, Resource Based Load Balancing and so on and no kidding, it would not take you more than 30 minutes to get all going. Simple and elegant design. More than that, I would say, smart design.

Today after going through all the stuff required to get RDS Web Access, RDS Gateway and RDS Session Broker up and running I am simply baffled. Stunned. This is for sure the epitome of bad design. I am still banging my head in the wall just thinking about how the setup of all this makes no sense and more than that, what a steep learning curve this will be for anyone that is now on Windows Server 2003 TS.

What amazes me the most is Microsoft had YEARS to watch what others did and learn with their mistakes and then come up with something clean. Smart. Unfortunately that was not the case … Again, I am not debating if the solution at the end works. It does. I am discussing how easy it is to setup, how smart the design is and so on. And in that respect, they simply failed to deliver. I am telling you that based on 15+ years of experience doing nothing else other than TS/RDS/Citrix deployments and starting companies focused on TS/RDS development. I may look stupid indeed but I know some shit about these things.

Simplicity and clean design are key elements on any good piece of software, what someone in Redmond seems to disagree.

My own experience was not that bad, though admittedly I did not look into load balancing for this small setup. I agree though: you have to do a lot of clicking to get this stuff up and running. I am reminded of the question I asked a few months back: Should IT administration be less annoying? I think it should, if only because complexity increases the risk of mistakes, or of taking shortcuts that undermine security.