Microsoft is busy improving Windows Subsystem for Linux (WSL), the compatibility layer that lets you run Linux on Windows. WSL is not an emulator. It accesses the same file system and you can launch Windows applications from WSL, and vice versa. It also runs actual Linux binaries.

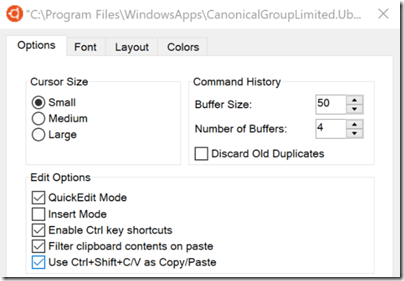

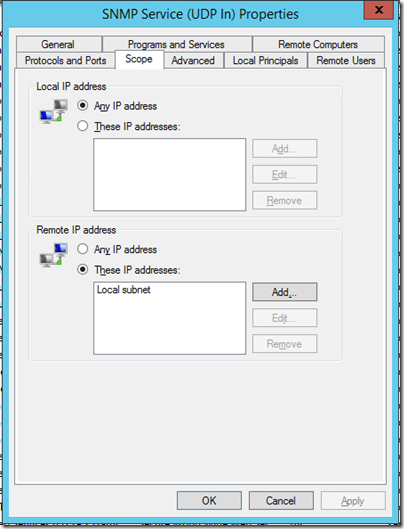

The latest announcements cover copy/paste between Linux and Windows, and a tabbed console. Both enhancements are in the skip-ahead insider version of Windows 10, which means they are unlikely to be in the one about to be released, currently known as Spring Creators Update (but rumoured to be getting a name change). In other words, you may have to wait around six months for this to be generally available.

These are not huge changes, but overall WSL is a big deal. Why is Microsoft doing it? One Betanews commenter says:

I still can’t figure out who this whole "Linux-on-Windows" thing is meant for. Developers who work on both platforms maybe? I guess it would be handy for people who just want to try out Linux before migrating to it, but that’s the last thing Microsoft would want to promote.

Microsoft has in fact stated the primary purpose of WSL:

This is primarily a tool for developers — especially web developers and those who work on or with open source projects. This allows those who want/need to use Bash, common Linux tools (

sed,awk, etc.) and many Linux-first tools (Ruby, Python, etc.) to use their toolchain on Windows.

There is a bit more to it. Developers are small in number relative to general users, but disproportionately influential, since they make the applications the rest of us run, and if the applications are not there or are inferior, the ecosystem starts to fail and the operating system declines.

I am not sure when it was that developers started to prefer Macs, but I noticed this trend many years ago, perhaps from the time that OS X moved to x86 (2006). This was not just about preferring the Mac user interface. In 2008 Apple opened up iOS, its mobile OS, to third-party applications, and a Mac was required for iOS development (this is still the case). It has long been relatively easy to run a Windows emulator on a Mac, but not vice versa, so for developers who want to support multiple target platforms from one computer, the Mac makes sense.

OS X / macOS is a Unix-like operating system, based on BSD (Berkeley Software Distribution). This means that moving between Linux and Mac is relatively smooth, from a developer perspective. The same tools are generally available. The internet runs mostly on Linux so the Mac has an advantage there as well.

In some cases this is more than just inconvenience. Windows has a long-standing issue with path lengths. MAX_PATH is defined as 260 characters. This limitation can be mostly removed if you have Windows 10 build 1607 or higher. Nevertheless, path issues have made Windows awkward for developing with Java, Node.js, and other languages or frameworks which typically use deeply nested directories. Open source developers perhaps did not care as much about these issues because they were mostly using Mac or Linux.

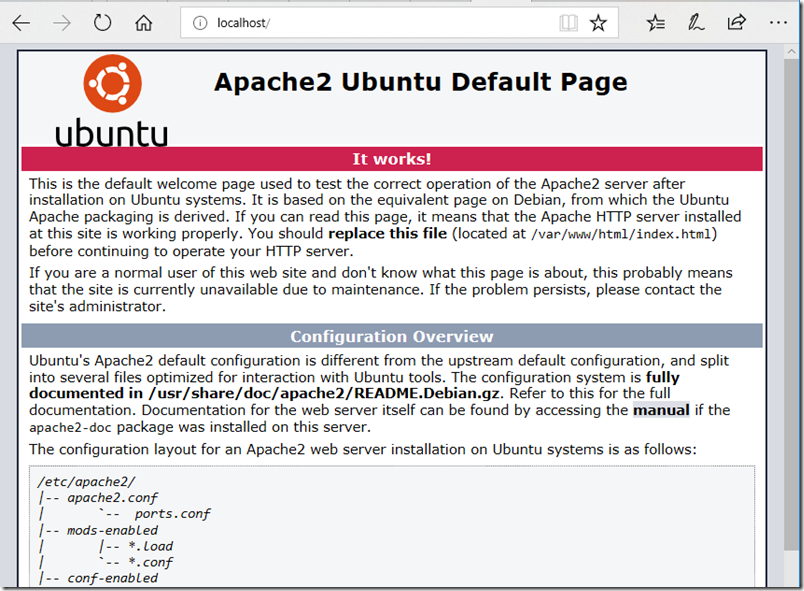

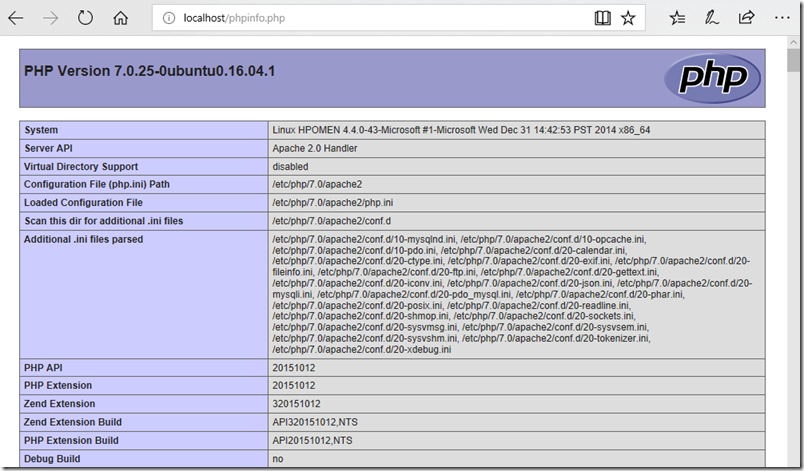

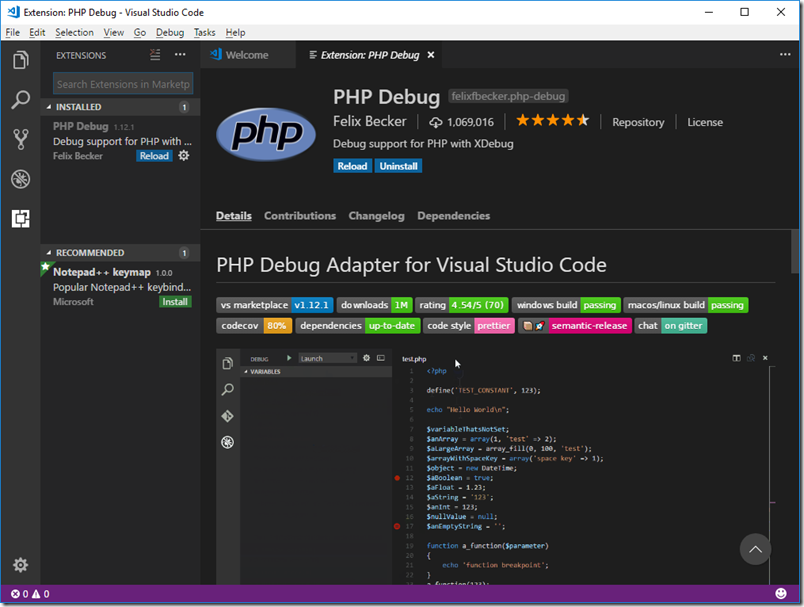

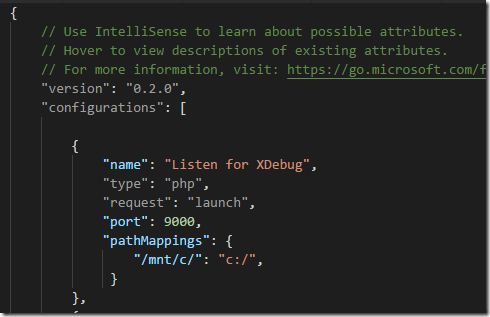

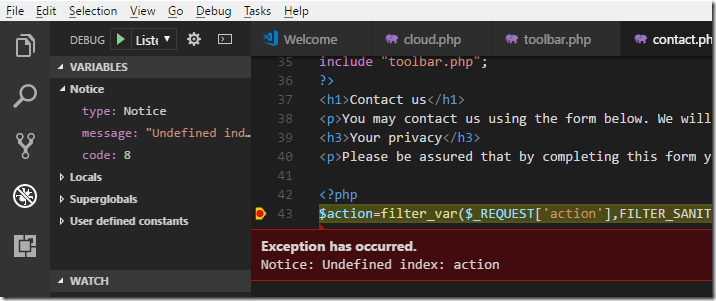

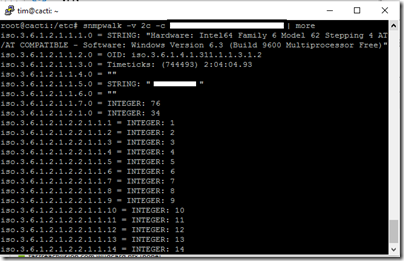

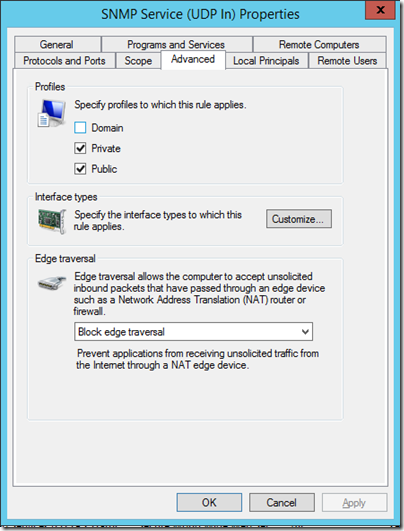

Microsoft has responded by improving Windows as a platform on which to develop applications. Visual Studio now targets Mac, iOS and Android as well as Windows. MAX_PATH has been alleviated as far as possible. WSL however goes much further. You can install and run Linux development tools and utilities such as gcc, perl, sed, awk, grep, wget, openssl, perl and more. There is no MAX_PATH issue. You can run the Linux build of Apache, PHP, MySQL and more. I used WSL to debug a PHP application and explained how here.

WSL is not perfect. Not everything is implemented. You can check the current issues here. Still, it is genuinely useful and mitigates the advantages of Mac or Linux for developers.

Microsoft has also added WSL to Windows Server. Why? The main focus here seems to be on administrators. There are times when it is handy to run a Linux command or script on Windows Server. It is not intended for production use as a server, though there is now support for background tasks; however it is still per-session so you would need to keep a user logged on in order to run, for example, a web server. More important, Microsoft has not designed WSL for production use as a server platform so it might not be as optimized or reliable as you require.

Implications of WSL

Where is this going? This is where it gets speculative. I will argue though that WSL is in part an admission of defeat. Windows remains an important development platform, but is now greatly outweighed by Unix-like platforms:

- Web/Internet applications

- iOS applications

- Android applications

Where Windows support is needed, developers have many cross-platform options to choose from, a popular choice today being Electron, based on Chromium (the open source foundation of Google Chrome) and Node.js.

Today there seems little chance of Windows winning back market share as a mobile operating system, and the importance of desktop applications looks destined for long slow decline.

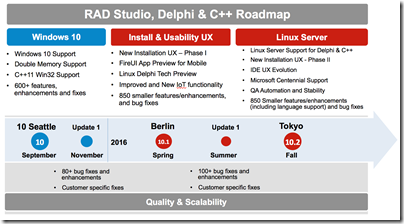

Windows Server remains a significant application platform, but Microsoft is focused more on driving developers to Azure cloud services than on Windows Server itself. SQL Server now runs on Linux, ASP.NET Core is cross-platform, and Azure has excellent support for Linux.

All of this leads me to think that WSL will continue to improve, perhaps to the point where production loads are supported on Windows Server, for example. Further, the ability to run Windows applications on Linux (which is more or less what happens in SQL Server for Linux) may become equally as important as the reverse.