I am more a developer than an IT administrator but sometimes find myself doing (and writing about) admin-type tasks. I am usually under time pressure and I find myself increasingly irritated by annoyances that take up precious time.

It seems to me that there is a hidden assumption in IT, that usability is all-important when it comes to end users, but that the admin can tolerate any amount of complexity and obscurity, provided that the end result is happy users with applications that work. The analogy I suppose is something like that of a motor car with an engineer who gets hands grubby under the bonnet, and a driver who settles back in a comfortable seat and uses only clean, smooth and simple controls to operate the vehicle.

That said, any engineer will tell you that some vehicles are easier to work on than others, and some documentation (whether paper or electronic) more precise and helpful than others. No engineer minds getting oil on their hands, but wasting time because the service manual did not mention that you have to loosen the widget before you can remove the doodah is guaranteed to annoy.

A little detail that I’ve been pondering is the Internet Explorer Enhanced Security Configuration found in server versions of the Windows operating system. This is a specially locked-down configuration of IE that is designed to save you from getting malware onto your server.

That’s a worthy goal; and another good principle is not to browse the web at all on a server. Still, as we all know the first thing you have to do on a Windows server is to install patches and drivers, some of which are not available on Windows Update. In addition, not all servers are mission-critical; I find myself setting them up and tearing them down on a regular basis for trying out new software. It may therefore happen that you open up IE to grab a patch from somewhere; and it is a frustrating experience. Javascript does not work; files do not download. The usual solution is to add the target site to Trusted Sites – thereby giving the site more trust than it really needs. The sequence goes something like this:

1. Browse to vendor’s site to find driver.

2. Notice nothing works, click Tools – Internet Options – Security, Trusted Sites, Sites button, Add.

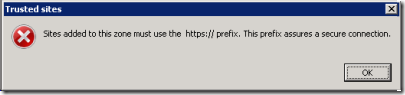

3. Click Add, forgetting to uncheck the box that says “Require server verification (https:)”.

4. Get this dialog:

5. Wonder briefly why IE did not spot that you are adding a site with an http: prefix before rather than after you clicked Add.

6. Uncheck the box, repeat the Add, go back to IE, refresh page to make scripts etc run and likely lose your progress through the site.

7. Find that the site now redirects to ftp://vendorsite.com and you have to repeat the process.

A minor issue of course; but if this is a sequence you have gone through a few times you will agree that it is annoying and not really thought through. Perhaps it is to do with Windows server having a GUI that it does not really need; on Linux or even Server Core you would use the fine wget utility having found the url of the file you need using the browser that you have running alongside your terminal window.

I also realise there are may ways round it, ranging from something to do with laptops and USB pen drives, to installing Google Chrome which only takes a few clicks, does not require admin rights, and happily downloads anything.

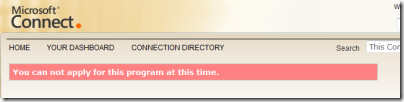

What prompts this little rant is not actually IE Enhanced Security Configuration, which is a familiar enemy, but a day figuring out the subtleties of Microsoft’s App-V, brilliant in concept but not the easiest thing to set up, thanks to verbose but unhelpful documentation, dependency on SQL Server set up in the right way that is not clearly spelt out, lack of support for Windows x64 clients except in the beta of App-V 4.6 which is available from a Microsoft Connect URL that in fact reports non-availability; you know the kind of thing:

At times like this, the system seems downright hostile. Of course this does not matter, because administrators are trained to do this, and don’t mind provided that the users are happy in the end.

But I don’t actually believe that. In Windows 7 Microsoft deliberately targeted the things that annoy users because, under pressure from Apple, it figured out that this was necessary in order to compete. The result is an OS that users generally like much better. The things that annoy admins are different, but equally affect how much they enjoy their work; and effort in this area is equally worthwhile though less visible to end-users.

In fairness, initiatives like the web platform installer show that in some areas at least, Microsoft has learned this lesson. There is, however, plenty still to do, especially in these somewhat neglected areas like App-V.

My final reflection: when Microsoft came out with Windows NT Server back in 1993 I expect that being easier to use than Unix was one of the goals. Perhaps it was, then; but Windows soon developed its own foibles that were as bad or worse.