When Microsoft first started talking about Roslyn, the .NET compiler platform, one of the features described was the ability to take some Visual Basic code and “paste as C#”, or vice versa.

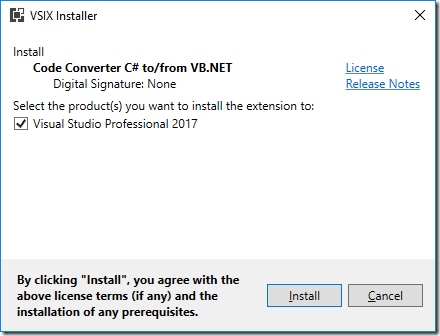

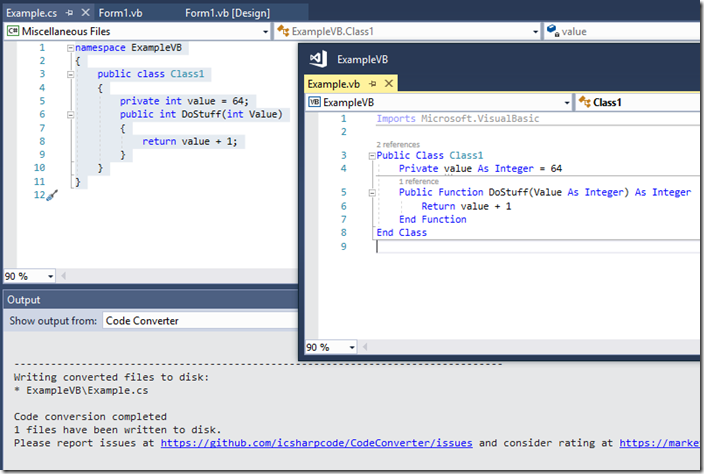

Some years later, I wondered how easy it is to convert a VB project to C# using Roslyn. The SharpDevelop team has a nice tool for this, CodeConverter, which promises to “Convert code from C# to VB.NET and vice versa using Roslyn”. You can also find this on the Visual Studio marketplace. I installed it to try out.

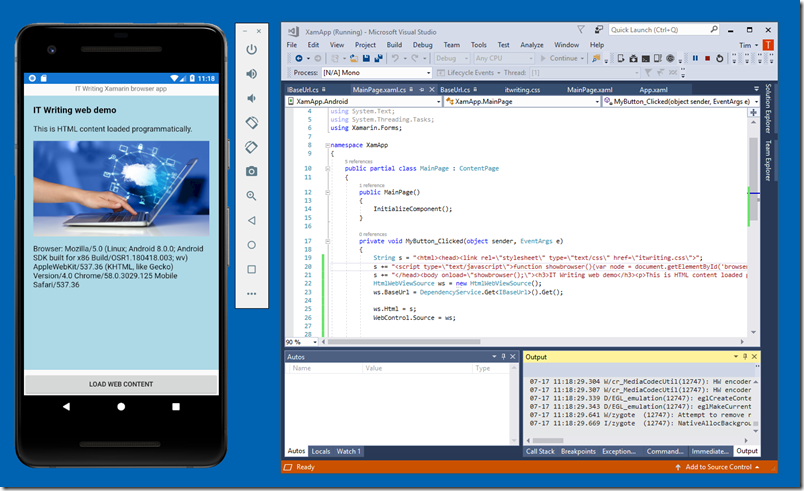

Why would you do this though? There are several reasons, the foremost of which is cross-platform support. The Xamarin framework can use VB to some extent, but it is primarily a C# framework. .NET Core was developed first for C#. Microsoft has stated that “with regard to the cloud and mobile, development beyond Visual Studio on Windows and for non-Windows platforms, and bleeding edge technologies we are leading with C#.”

Note though that Visual Basic is still under active development and history suggests that your Windows VB.NET project will continue running almost forever (in IT terms that is). Even Visual Basic 6.0 applications still run, though you might find it convenient to keep an old version of Windows running for the IDE.

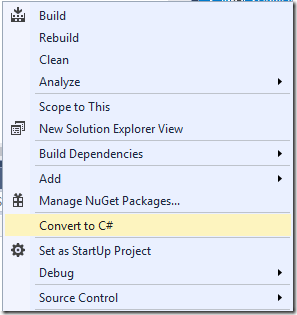

Still, if converting a project is just a right-click in Visual Studio, you might as well do it, right?

I tried it, on a moderately-size VB DLL project. Based on my experience, I advise caution – though acknowledging that the converter does an amazing job, and is free and open source. There were thousands of errors which will take several days of effort to fix, and the generated code is not as elegant as code written for C#. In fact, I was surprised at how many things went wrong. Here are some of the issues:

The tool makes use of the Microsoft.VisualBasic namespace to simplify the conversion. This namespace provides handy VB features like DateDiff, which calculates the difference between two dates. The generated project failed to set a reference to this assembly, generating lots of errors about unknown objects called Information, Strings and so on. This is quick to fix. Less good is that statements using this assembly tend to be more convoluted, making maintenance harder. You can often simplify the code and remove the reference; but of course you might introduce a bug with careless typing. It is probably a good idea to remove this dependency, but it is not a problem if you want the quickest possible port.

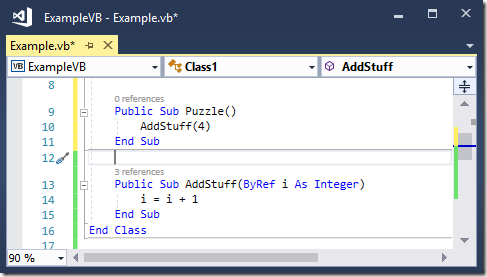

Moving from a case-insensitive language to a case-sensitive language is a problem. Visual Studio does a good job of making your VB code mostly consistent with regard to case, but that is not a fix. The converter was unable to fix case-sensitivity issues, and introduced some of its own (Imports System.Text became using System.text and threw an error). There were problems with inheritance, and even subtle bugs. Consider the following, admittedly ugly and contrived, code:

Here, the VB coder has used different case for a parameter and for referencing the parameter in the body of the method. Unfortunately another variable with the different case is also accessible. The VB code and the converted C# code both compile but return different results. Incidentally, the VB editor will work very hard to prevent you writing this code! However it does illustrate the kind of thing that can go wrong and similar issues can arise in less contrived cases.

C# is more strict than VB which causes errors in conversion. In most cases this is not a bad thing, but can cause headaches. For example, VB will let you pass object members ByRef but C# will not. In fact, VB will let you pass anything ByRef, even literal values, which is a puzzle! So this compiles and runs:

Another example is that in VB you can use an existing variable as the iteration variable, but in C# foreach you cannot.

Collections often go wrong. In VB you use an Item property to access the members of a collection like a DataReader. In C# this is omitted, but the converter does not pick this up.

Overloading sometimes goes wrong. The converter does not always successfully convert overloaded methods. Sometimes parameters get stripped away and a spurious new modifier is added.

Bitwise operators are not correctly converted.

VB allows indexed properties and properties with parameters. C# does not. The converter simply strips out the parameters so you need to fix this by hand. See https://stackoverflow.com/questions/2806894/why-c-sharp-doesnt-implement-indexed-properties if the language choices interest you.

There is more, but the above gives some idea about why this kind of conversion may not be straightforward.

It is probably true that the higher the standard of coding in the original project, the more straightforward the conversion is likely to be, the caveat being that more advanced language features are perhaps more likely to go wrong.

Null strings behave differently

Another oddity is that VB treats a String set to null (Nothing) as equivalent to an empty string:

Dim s As String = Nothing

If (s = String.Empty) Then ‘TRUE in VB

MsgBox(“TRUE!”)

End If

C# does not:

String s = null;

if (s == String.Empty) //FALSE in C#

{

//won’t run

}

Same code, different result, which can have unfortunate consequences.

Worth it?

So is it worth it? It depends on the rationale. If you do not need cross-platform, it is doubtful. The VB code will continue to work fine, and you can always add C# projects to a VB solution if you want to write most new code in C#.

If you do need to move outside Windows though, conversion is worthwhile, and automated conversion will save you a ton of manual work even if you have to fix up some errors.

There are two things to bear in mind though.

First, have lots of unit tests. Strange things can happen when you port from one language to another. Porting a project well covered by tests is much safer.

Second, be prepared for lots of refactoring after the conversion. Aim to get rid of the Microsoft.VisualBasic dependency, and use the stricter standards of C# as an opportunity to improve the code.