I am a duplicate bridge player in my spare time and enjoyed playing in my local club once or twice a week. That was before COVID-19 and then, in March this year, lockdown. Bridge clubs were no longer able to meet. There are more important things in the world; but bridge is both a lot of fun and a welcome distraction from weightier matters, and my thoughts soon turned to what we could do to continue playing in these new circumstances.

The answer was to play online; but while there are plenty of ways to play bridge online, the existing systems were not designed with the idea of being a way for bridge clubs to meet in a new context. If anything, the reverse is true: online bridge site were designed for people who could not easily get to a club or wanted to play at any time with whoever else happened to be available. Clubs like my own, by contrast, wanted to replicate their face-to-face meetings with an online equivalent. A further complication back in March was that the biggest online bridge site, called Bridgebase, was immediately overloaded and declared that it was unwilling to allow new people to qualify as directors, people allowed to run online bridge sessions.

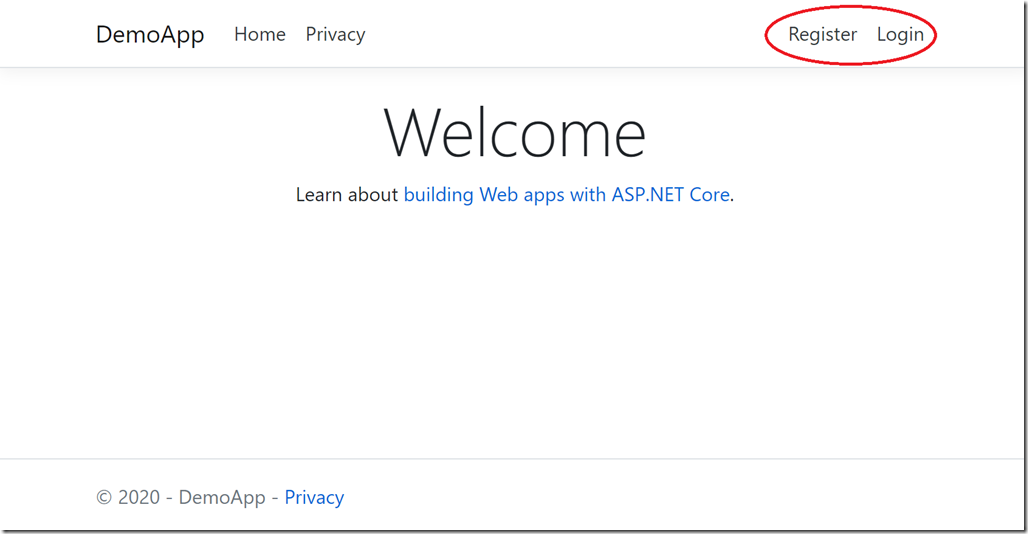

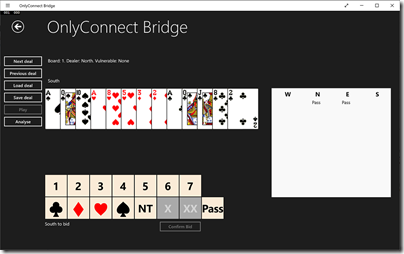

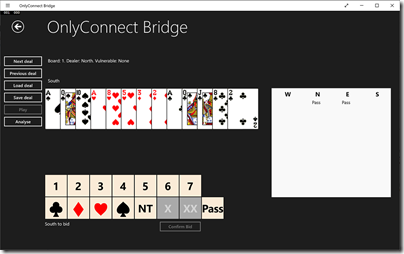

My immediate instinct was to build a new site for playing bridge. I was not quite starting from scratch. Back in the early days of Windows 8, I started work on a bridge game for Microsoft’s new and as it turned out ill-fated platform. I had got some way with it; I had created a bridge engine that understood about cards and hands and tricks and shuffling and scoring and all the various elements that go into playing bridge. It was written in C# and what is now UWP XAML. It is designed of course for a solo player. Here is the bidding screen:

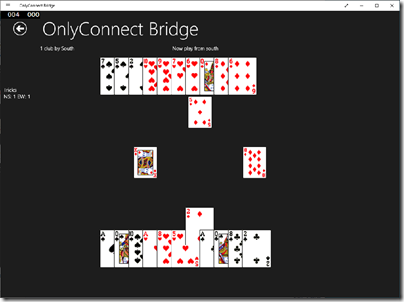

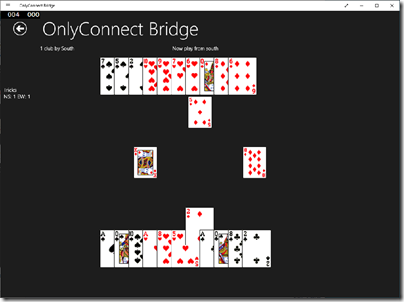

and the play screen:

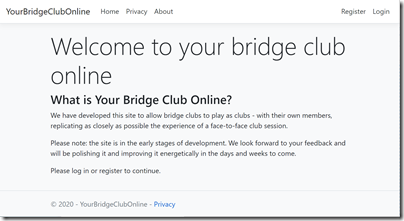

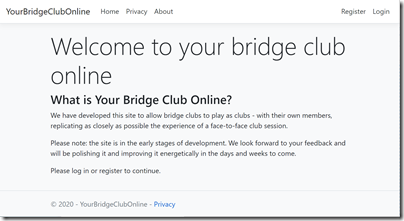

This is how it looks on Windows 10; it looked a bit better on Windows 8 though it would not win any prizes for design. My software could play bridge though; the reason I never finished it was that I never cracked getting the AI working. But for human to human play that did not matter. A weekend or two coding, I thought, and I could have a website up and running so our club could play bridge online. I made an immediate start, registering the domain name YourBridgeClubOnline.co.uk.

Well, three months later and here we are.

It is, I have to say, still under development. But it works and we have been able to play bridge again, as a club.

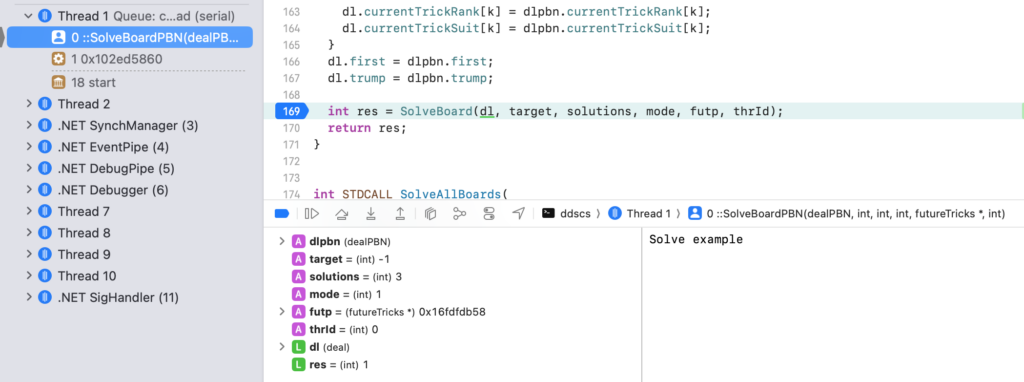

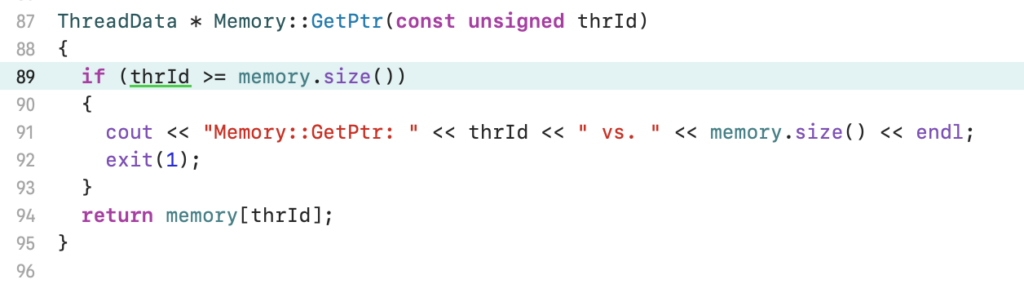

What took you so long? Ha! Much of my old bridge engine code remains untouched and has proved useful; it all runs fine on .NET Core. Even the (useless) AI has been handy, as I can test the mechanics of play without involving others. But I had, of course, wildly underestimated the problem of converting a game for solo play on Windows, to a multi-player web application. There is much to think about:

The UI. I am not a designer (I am sure you can tell) but spent ages puzzling over how to get a workable user interface in the browser for everything from tablets to desktops. Not smartphones yet but it is coming. I decided early on to take a view on compatibility. No Internet Explorer. JavaScript fetch API is required. When time is against you, it is easier to say, just use another browser, than to waste too much time supporting old browsers.

Messaging – both the API kind, and the chat kind. I am using C#, ASP.NET Core and SignalR. In general it works well. SignalR uses WebSockets as first preference, but falls back to Server Sent Events or long polling where necessary. In my first experiments I did my own polling and switching to SignalR was a great relief.

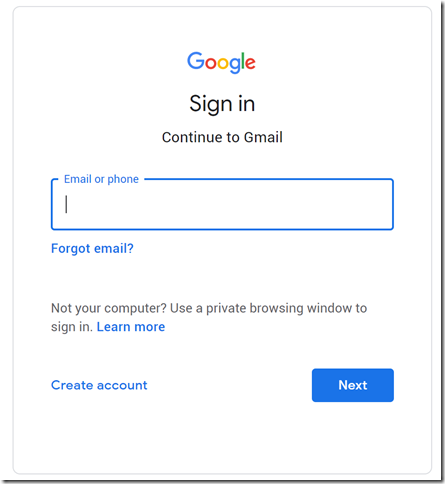

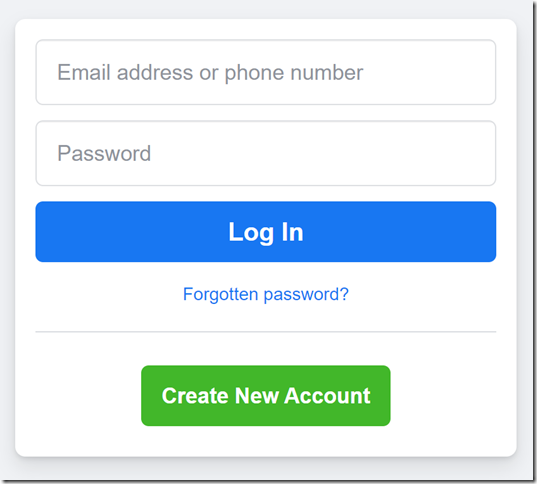

Registration and login. I am using the stuff that comes in the box, ASP.NET Core Identity. It has saved me a ton of work. It’s a bit annoying and not too well documented. I don’t really like using GUIDs for the primary key, for example, and I believe there is way to avoid it, but it isn’t top priority when you are going for Minimum Viable Product.

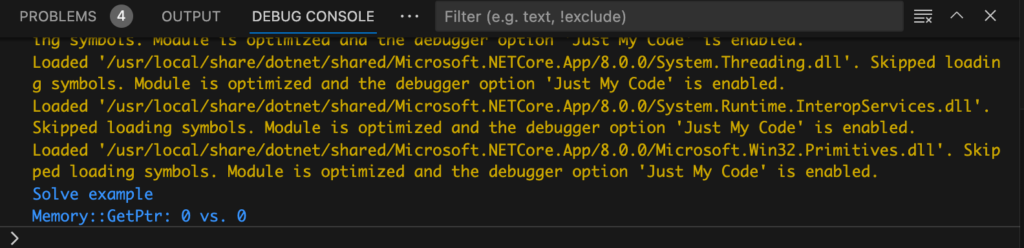

JavaScript. I’ve written tons of it and I don’t even like the language. I have a new respect for it though. The thing is, it is very fast and there is nothing you cannot do. The worst thing is the friction of doing some debugging in the browser, and some in Visual Studio. I am thinking of switching to VS Code for development since it works nicely with ASP.NET Core and is better for JavaScript than Visual Studio.

Scoring. My Windows software could score a hand of bridge. But duplicate is different; you have to compare the scores with others who played the same hands and work out the percentages, then export the results to standard formats for display on club websites and submission to the English Bridge Union. It was more work than I had expected and I am not done yet; the system only understands Pairs at the moment, not Teams (a different way of scoring).

Directing. Someone has to manage an online bridge session, settle any arguments, and fix errors like cards played by accident. It all needs coding and there was nothing like it in the Windows version.

Movements. Imagine you have 28 people playing bridge (or 14 pairs). They need to all play the same hands, but never play the same hand twice, and it has to be so arranged that each pair plays against other pairs in a defined sequence so it is balanced and fair. We call this the movement. Online, you have a bit more flexibility because you don’t need to share physical cards: everyone can play the same hand at the same time if you like. It is still quite fiddly though, and I did not do any of this in the old Windows version. I saved some time by writing an import function to enable re-use of movements made for EBUScore, a widely used scoring and bridge session management application. There is more to do though.

Claims. This is where, half way through the hand, a player says, “There’s no point in playing on, I’m obviously going to win all the remaining tricks.” A trick is a sequence of four cards played one from each hand, which is won by one of the pairs. This statement is called a claim, and has to be agreed by the other players. Getting this working was more difficult than I had expected – because built into my bridge engine was the idea that you could score by counting the tricks each side had won. But claimed tricks are never played. With hindsight, I should have allowed for this from the beginning.

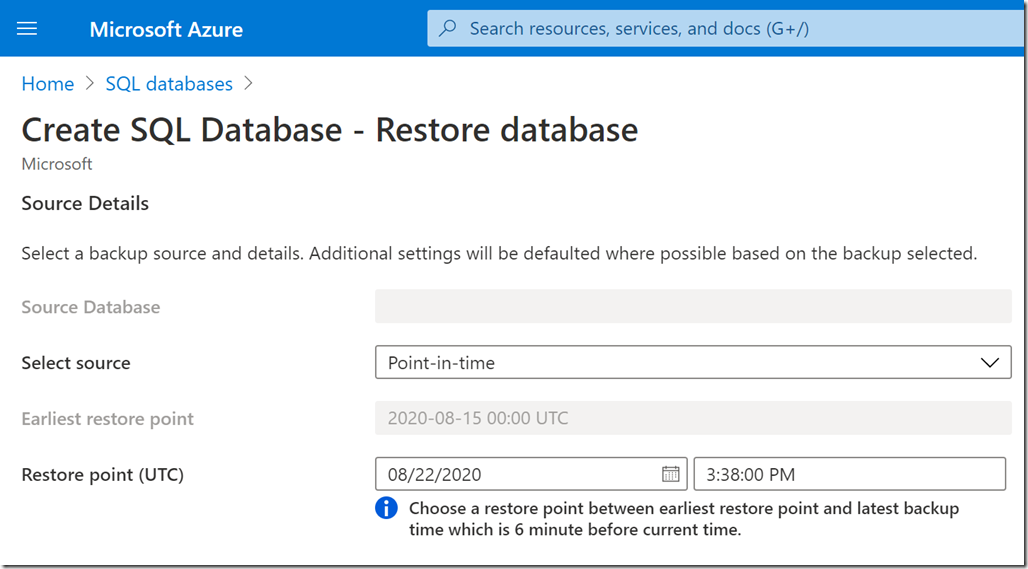

Database. Every detail of play has to be stored on the server. I am using Dapper and SQL Server currently, though it is possible that PostgreSQL would work just as well. I started with Entity Framework Core, still there as it is used by ASP.NET Core Identity, but I am happier with Dapper.

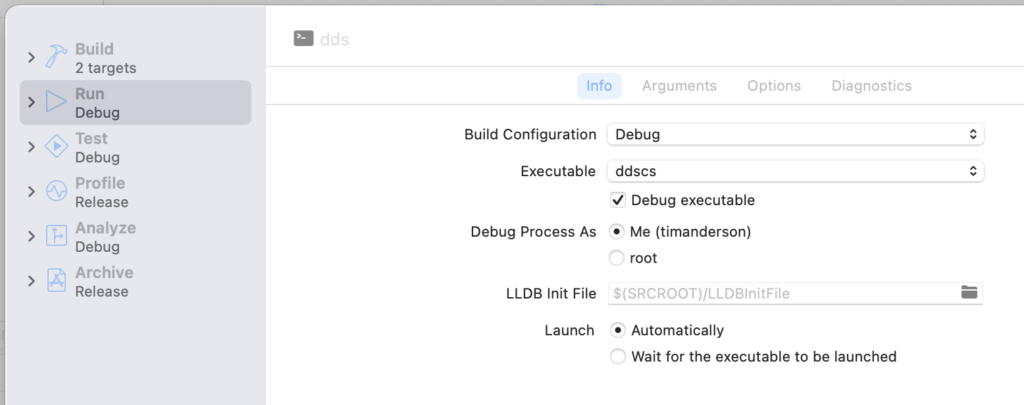

Things that worked well

Three months is longer than I had thought it would take to get to a playable system, but I suppose as a spare time project it is not too bad. It would not be possible without the likes of ASP.NET Core and Dapper and SignalR doing so much for you. C# is a delight for coding. I am also using an Azure App Service for all this test and development and that has worked well. I am deploying to a Linux container of course; but the nice thing about App Service is that it will scale to a considerable extent without the hassle of Kubernetes. If the project succeeds and needs to scale up, there is an Azure SignalR service ready and waiting. I was nevertheless interested to see that AWS now offers .NET Core on Elastic Beanstalk, complete with some nice Visual Studio integration. Trying it there would be an interesting experiment, though I’m not sure AWS is so savvy about SignalR.

Open Source?

Could this have been done quicker by making it open source and seeking collaborators early on? Will it become open source? I need help for sure, though I also feel the code needs some cleaning up before it is fit to share more widely. You will recall though that I had started out thinking that it would be a small matter to convert my solo bridge game to an online multiplayer web application. I figured it would be better to get something working and then ask for help. But I am open to offers! Note: this is not a commercial project.

Rewarding

Most of the software projects I have been involved in have been business applications. Bridge is a lot more fun. I do see software development as a creative act. I recall starting work on the bridge game back in 2011 (I think); starting a new blank project in Visual Studio and thinking, hmm, I had better write a class to represent a pack of cards. From that beginning I ended up with an application that could play bridge, after a fashion, and now one that multiple people can play concurrently. It is rewarding and I will not regret the time spent on it, irrespective of how much actual use it gets.