I assisted a small company in migrating from Small Business Server 2011 to Office 365.

SBS 2011 was the last full edition of Small Business Server, with Exchange included. It still works fine but is getting out of date, and Microsoft has no replacement other than full Exchange and multiple servers at far greater cost, or Office 365.

There must be hundreds of thousands of businesses who have done this or will do it, and you would expect Microsoft’s procedures to be pretty smooth by now. I have done this before, but not for a couple of years, so was interested to see how it now looks.

The goal here is to migrate email (I am not going to cover SharePoint or other aspects of migration here) in such a way that no email or other Oulook data in lost, and that users have a smooth transition from using an internal mail server to using Office 365.

What you do first is to set up the Office 365 tenant and add the email domain, for example yourbusiness.co.uk. You do not complete the DNS changes immediately, in particular the MX record that determines where incoming mail is sent.

Now you have a few choices. In the new Office 365 Admin center, in the Users section, there is a section called Data Migration, which has an option for Exchange. “We will … guide you through the rest of the migration experience,” it says.

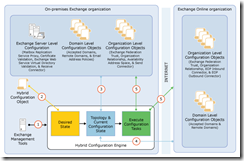

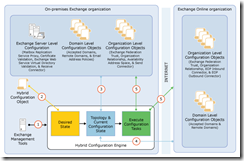

If you select Exchange you are offered the Office 365 Hybrid Configuration Wizard. You do not want to use this for Small Business Server. It sets up a hybrid configuration with Exchange Federation Trust, for a setup where Office 365 and on-premises Exchange co-exist. Click on this image if you want to know more. I have no idea if it would work but it is unnecessarily complicated.

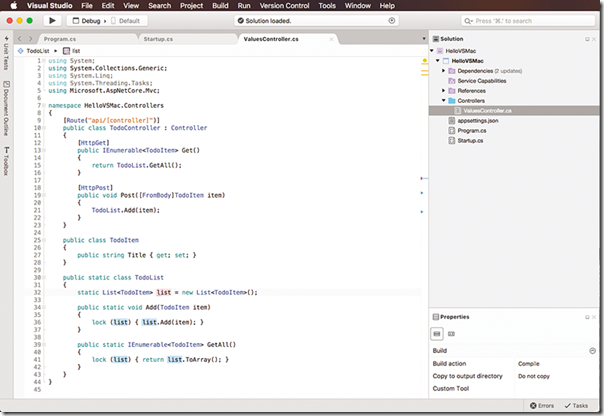

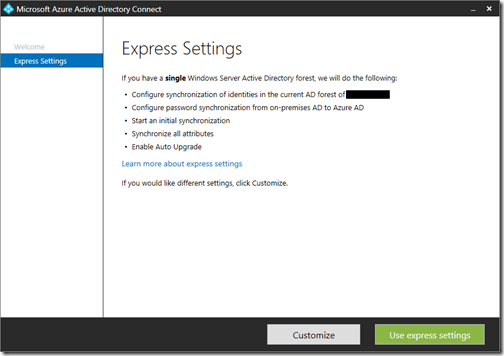

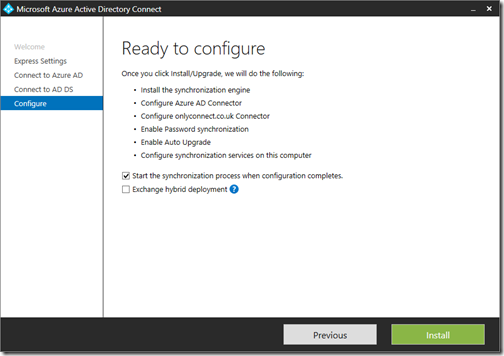

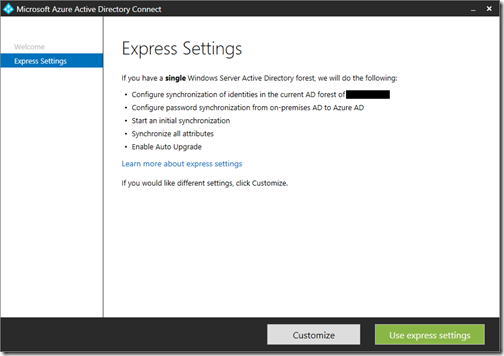

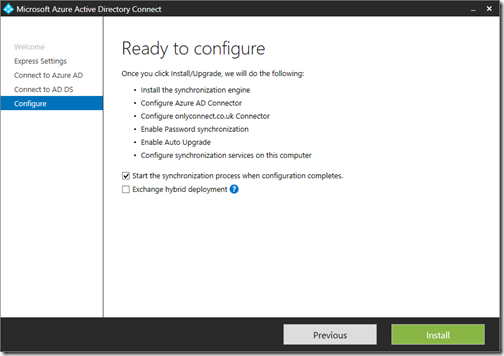

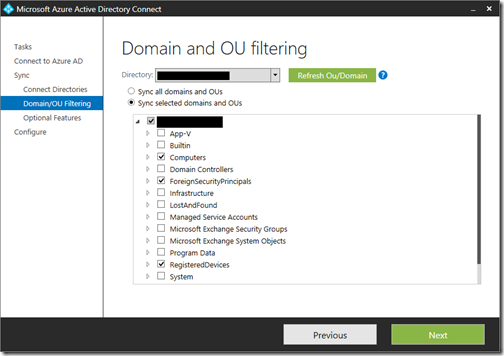

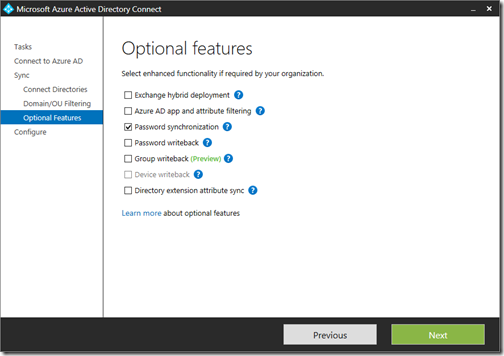

No, what you should do is go down the page and click “Exchange Online migration and deployment guidance for your organisation”. Now we have a few options, the main relevant ones being Cutover and Hybrid 2010. Except you cannot use Hybrid 2010 if you have a single-server setup, because this requires directory synchronization. And you cannot install DirSync, nor its successor Azure AD Connect, on a server that is a Domain Controller.

So in most SBS cases you are going to do a Cutover migration, suitable for “fewer than 2000 mailboxes” according to Microsoft. The SBS maximum is 75 so you should be fine.

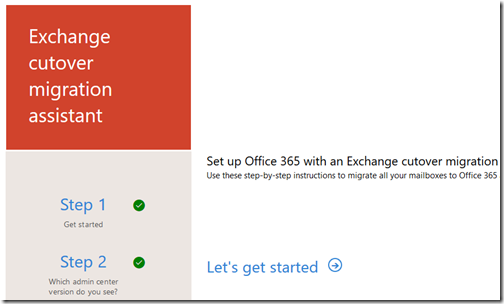

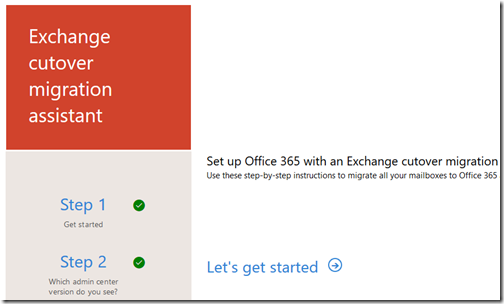

Click Cutover Migration and you get to a nice migration assistant with 15 steps. Let’s get started.

So I did, and while it mostly works there are some gotchas and I am not impressed with the documentation. It has a combination of patronising “this is going to be easy” instructions with links that dump you into other documents that are more general, or do not cover your exact situation, particularly in the case of the mysterious “Create mail-enabled users” of which more below.

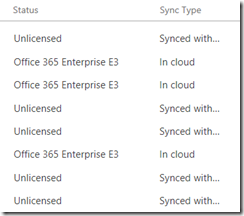

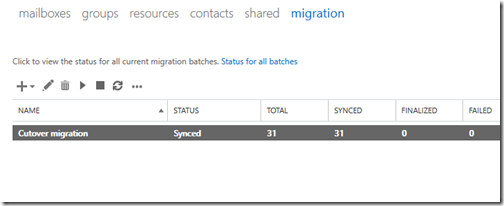

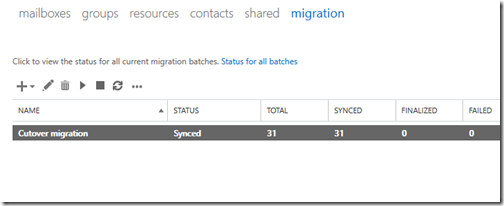

Steps 1-5 went fine and than I was on step 6, Migrate your mailboxes. This guides you to the Migration Batch tool. This tool connects to your SBS Exchange, creates Office 365 users for each Exchange mailbox if they do not already exist, and then copies all the contents of those mailboxes to the new mailboxes in Office 365.

While this tool is useful, I found I had what seemed to me obvious questions that the documentation, such as it is, does not address. One is, what do you do if one or more mailboxes fail to sync, or sync with errors reported, which is common. The document just advises you to look at the log files. What if you stop and then resume a migration batch, what actually happens? What if you delete and recreate a migration batch (as support sometimes advises), do you get duplicate items? Do you need to delete the existing users? How do you get to the Finalized state for a mailbox? It would be most helpful if Microsoft would provide detailed documentation for this too, but if it does, I have not found it.

The migration can take a long time, depending of course on the size of your mailboxes and the speed of your connection. I was lucky, with just 11 users it tool less than a day. I have known this tool to run for several days; it could take weeks over an ADSL connection.

Note that even when all mailboxes are synced, mail is still flowing to on-premises Exchange, so the sync is immediately out of date. You are not done yet.

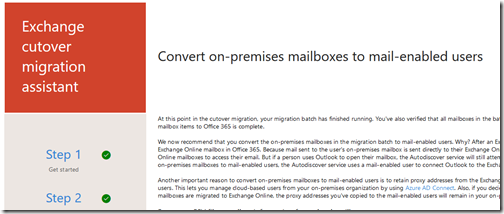

The mysteries of converting to Mail-Enabled Users

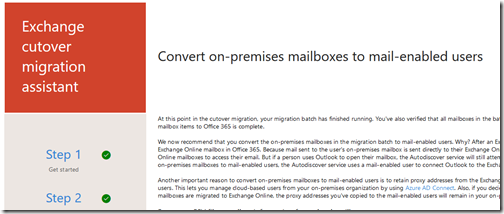

I got to Synced after only a few hiccups. Now comes the strange bit. Step 7 is called Create mail-enabled users.

There are numerous problems with this step. It does not fully explain the implications of what it describes. It does not actually work without tweaking. The documentation is sloppy.

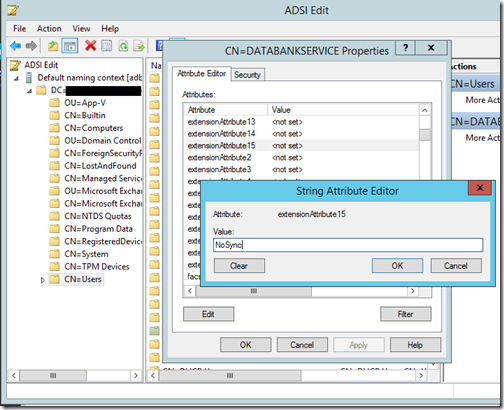

Do you need to do this step at all? No, but it does have some advantages. What it does is to remove (actually disconnect rather than delete) the on-premises mailbox from each user, and set the TargetAddress attribute in Active Directory, which tells Exchange to route mail to the TargetAddress rather than trying to deliver it locally. The TargetAddress, which is only viewable through ADSI Edit or command-line tools, should be set to the unique Office 365 email address for each users, typically username@yourbusiness.onmicrosoft.com, rather than the main email address. If I have this right (and it is not clearly explained), this means that any email that happens to arrive at on-premises Exchange, either because of old MX records, or because the on-premises Exchange is hard-coded as the target server, then it gets sent to Office 365.

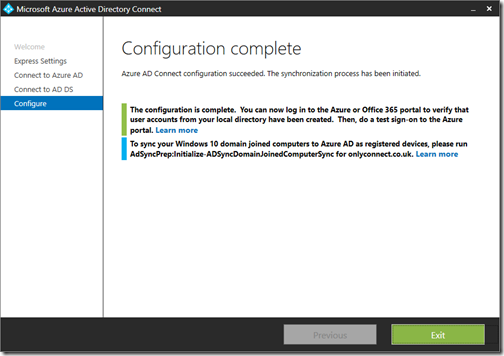

Update: there is one scenario where you absolutely DO need this step. This is if you want to use ADConnect to synch on premise AD with Office 365, after doing the mail migration. See this thread and the comment:

“To covert on-premises mailboxes to mail-enabled users is required. When you convert on-premises mailboxes to mail-enabled users (MEUs), the proxy addresses and other information from the Office 365 mailboxes are copied to the MEUs, which reside in Active Directory in your on-premises organization. These MEU properties enable the Directory Synchronization tool, which you activate and install in step 3, to match each MEU with its corresponding cloud mailbox.”

The documentation for this step explains how to create a CSV file with the primary email addresses of the users to convert (this works), and then refers you to this document for the PowerShell scripts to complete the step. You will note that this document refers to Exchange 2007, though the steps also apply to Exchange 2010, and to a Staged Exchange migration, when you are doing a Cutover. Further, the scripts are embedded in the text, so you have to copy and paste. Further, the scripts do not work if you try to follow the instructions exactly. There are several issues.

First, this step seems to be in the wrong place. You should change the MX records to route mail to Office 365, and then leave an interval of at least a few hours, before doing this step. The reason is that once you convert SBS users to mail-enabled users, the Migration tool will not be able to re-sync their mailbox. You must complete a sync immediately before doing the conversion. The only way I know to force a sync is to stop and then resume the Migration Batch. Check that all mailboxes are synced, which only takes a few minutes, before doing the conversion. You may still lose an email if it arrives in the window between the last sync and the conversion, which is why you should change the MX records first.

Second, if you run ExportO365UserInfo.ps1 in the Small Business Server Exchange Shell, it will not work, since “By default, Import-PSSession does not import commands that have the same name as commands in the current session.” This means that when the script runs mailbox commands they run against the local Exchange server rather than Office 365, unless you use the –AllowClobber parameter. I found the solution was to run this script on another machine.

Third, the script still does not work, since, in my case at least, the Migration Batch did not populate the onmicrosoft.com email address for imported users. I fixed this with a handy script.

Note that the second script, Exchange2007MBtoMEU.ps1, must be run in the SBS server Exchange Shell, otherwise it will not work.

Bearing in mind all these hazards, you might think that the whole, not strictly necessary, step of converting to mail-enabled users is not worth it. That is perfectly reasonable.

Finishing the job

Bearing in mind the above, the next steps do not altogether make sense. In particular, step 11, which says to make sure that:

“Office 365 mailboxes were synchronized at least once after mail began being sent directly to them. To do this, make sure that the value in the Last Synced Time box for the migration batch is more recent than when mail started being routed directly to Office 365 mailboxes.”

In fact, you will get errors here if you followed Step 7 to create mail-enabled users. Did anyone at Microsoft try to follow these steps?

Still, I have to say that the outcome in our case was excellent. Everything was copied correctly, and the Migration Batch tool even successfully replicated fiddly things like calendar permissions. The transition was smooth.

Note that you should not attempt to point an existing Outlook profile at the Office 365 Exchange. Instead, create a new profile. Otherwise I am not sure what happens; you probably get thousands of duplicate items.

One puzzle. I did not spot any duplicates in the synced mailboxes, but the item count increased by around 20% compared to the old mailboxes, as reported by PowerShell. Currently a mystery.

Closing words

I am puzzled that Microsoft does not have any guidance specifically for Small Business Server migrations, given how common these are, as well as by the poor and inaccurate documentation as noted above.

There are perhaps two factors at play. One is that Microsoft expects businesses of any size to use partners for this kind of work, who specialise in knowing the pitfalls. Second, the company seems so focused on enterprises that the needs of small businesses are neglected. Note, for example, the strong push for businesses to use the Azure AD Connect tool even though this requires a multi-server setup. There is a special tool in Windows Server Essentials, but this does not apply for businesses using a Standard edition of Small Business Server.

Finally, note that there are third-party tools you can use for this kind of migration, in particular BitTitan’s MigrationWiz, which may well be easier though a small cost is involved.