I’m at the QCon event in London, a multi-vendor conference aimed primarily at enterprise developers and architects.

Adam Tornhill speaks at QCon London 2017

A few notes on day one. Alasdair Allan gave a keynote on security and the internet of things; it was an entertaining and disturbing résumé of all that is wrong with the mad rush to connect everything to the internet though short on answers; our culture has to change so that organisations such as hotels, toy manufacturers, appliance vendors and even makers of medical equipment take security seriously but it is not clear how this will come about unless so many bad things happen that customers start to insist on it.

Michael Feathers spoke on strategic code deletion, part of a track on “Dark code: the legacy/tech debt dilemma.” This was an excellent session; code is added to projects more often than it is removed, and lack of hygiene in this regard has risks including security, reliability and performance. But discovering which code is safe to remove is not always trivial, and Feathers explored some of the nuances and suggested some techniques.

Steve Faulkner gave a session on serverless JavaScript, or more specifically, using Amazon Web Services (AWS) Lambda and API Gateway. Faulkner said that the API Gateway was the piece that made Lambda viable for them; he is Director of Platform Engineering at Bustle, a busy content site based in the USA. In a nutshell, moving from EC2 VMs to Lambda has yielded both financial savings and easier management. The only downside is performance; each call to a Lambda function takes a minimum of 100ms whereas the same function on a WM might take 20ms. In the end it is not critical as performance remains satisfactory.

Faulkner said that AWS is ahead of its competitors (Microsoft, Google and IBM were mentioned) but when pressed said that both Microsoft and Google offered strong alternatives. Microsoft’s Azure Functions are spoilt by the need to specify a maximum scale, rather than scaling automatically, but its routing solution is in some ways ahead of AWS, he said. Google’s Functions will be great when out of beta.

Adam Tornhill spoke on A Crystal Ball to prioritise Technical Debt, another session in the dark code track. This was my favourite of the day. Tornhill presented a relatively simple way to discover what code you should refactor now in order to avoid future issues. His method is based on looking for files with many lines of code (a way of measuring complexity) and many commits (suggesting high importance and activity), the “hotspots” in your projects. For more detail and some utilities see Tornhill’s blog.

Why do we end up with bad or risky code in our software? Tornhill said that developers often mistake organisational problems for technical problems and try unsuccessfully to fix them with tools.

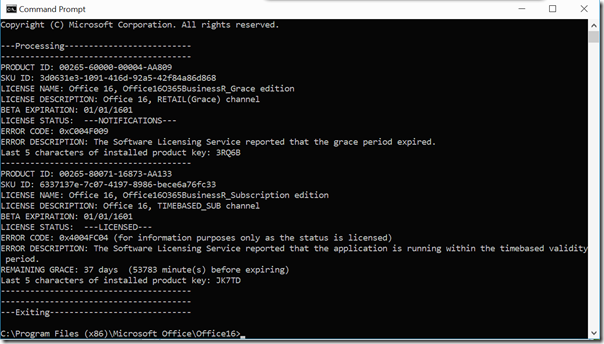

He also mentioned an example of high-risk code, the file gc.cpp which performs garbage collection in .NET Core, the next generation of Microsoft’s .NET Framework. This file is over 36,000 lines and should be refactored. There is a discussion on the subject here. It exactly bears out Tornhill’s point. A developer proposes to refactor the file, back in March 2015. Microsoft’s Karel Zikmund defends the status quo:

Why it is this way? … Partly historical reasons (it is this way since the start). Partly because devs working on it didn’t feel the urge to refactor it. Partly because splitting of gc.cpp is non-trivial and risky and because it does not bring too big value (ramp up in the code base can be gained also in the combination of reading BOTR and debugging the code). Why it is staying this way? … Cost/benefit/risk ratio is IMO not in favor of a change here.

Few additional thoughts:

Am I happy that there is only 1 large file? No, but it doesn’t hurt me much either.

Do I see the disadvantages of large file? Yes, but I don’t think they are huge. More like minor annoyances with easy workarounds.

And to turn it around: Do you see the risk of any changes here? Do you see the cost of extra careful code reviews to mitigate the risk?

Strictly technically, we truly believe this is a formatting change. If it was simple to split it up and if it would be low risk and if it would be very easy to review, it might be worth the ‘minor’ improvements mentioned above … but I don’t see that combo happening (not on a noticeable scale in gc.cpp).

On a personal note: I also trust CLR team that if all these three things were true, the refactoring would have happened long time ago.

Note that some of this code goes back beyond .NET Core to the .NET Framework, the “historical reasons” that Zikmund mentions. We can see that the factors preventing change are as much organisational as technical.

Finally I attended a session on Microsoft’s Cognitive Services. Note this was in the “Sponsored solution track”. Microsoft also has a stand here focused on its Cognitive Services.

There is not much Microsoft Platform content at QCon and it seems under-represented, though many of the sessions are applicable to developers on any platform. I am not sure of all the reasons for this; there used to be an Advanced .NET track at QCon. It does reflect some overall development trends as well as the history and evolution of QCon itself. That said, there is a session on SQL Server on Linux so the company is not completely invisible here.

As for the session, it was a reasonable overview of Microsoft’s expanding Cognitive Services APIs, which covers things like image recognition, speech recognition and more. I would have liked more depth and would have preferred to hear from a practitioner, in other words, “we built an application on Cognitive Services and this is what we learned.” I am not altogether clear why the company is pushing this so hard, except that it is a driver for developers to use Azure. I asked about how developers should deal with the problem of uncertainty*, in other words, that Cognitive Services does not deliver absolute results but rather draws conclusions with a confidence score – eg it might be pretty sure that an image contains a human face, fairly sure that it is male, and somewhat confident that the age of the person is mid forties. When the speaker demoed speech recognition it went pretty well except that “Start” was transcribed as “Stop.” This stuff is difficult.

Looking forward now to Day Two: Containers, Machine Learning, and more.

*More concisely expressed as “Systems are moving from the deterministic to the probabilistic” by Stephen Whitworth, who is now speaking on Machine Learning.