Microsoft has released .NET Core 2.0, a major update to its open source, cross-platform version of the .NET runtime and C# language.

New features include implementation of .NET Standard 2.0 (a way of targeting code to run under multiple .NET platforms), new platform support including Debian Stretch, macOS High Sierra and Suse Linux Enterprise Server 12 SP2. There is preview support for both Linux and Windows on ARM32.

.NET Core 2.0 now supports Visual Basic as well as C# and F#. The version of C# has been bumped to 7.1, including async Main method support, inferred tuple names and default expressions.

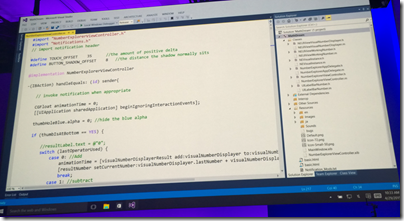

Microsoft has also released Visual Studio 2017 15.3, which is required if you want to use .NET Core 2.0. New Visual Studio features include Azure Stack support, C’# 7.1 support, .NET Framework 4.7 support, and other new features and fixes.

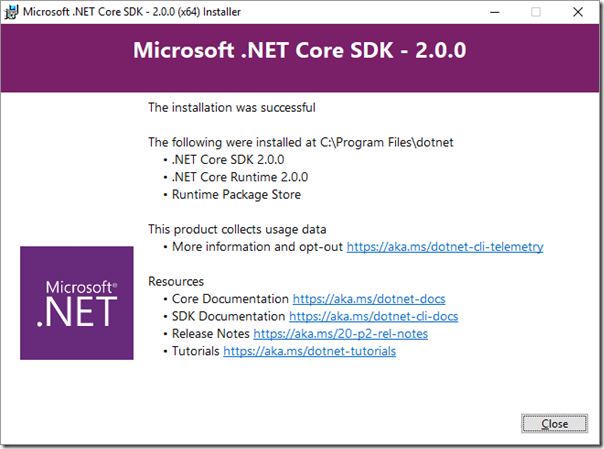

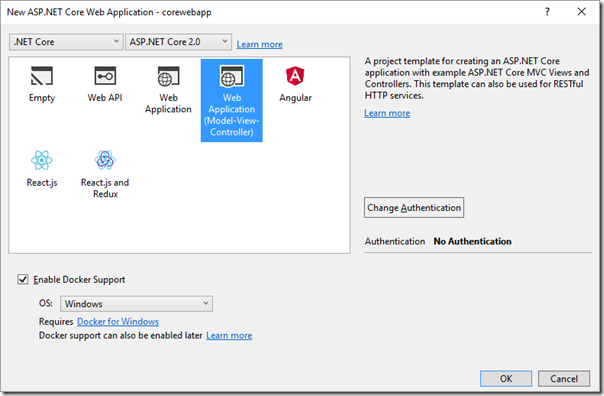

I updated Visual Studio and downloaded the new .NET Core 2.0 SDK and was soon up and running.

Note the statement about “This product collects usage data” of which more below.

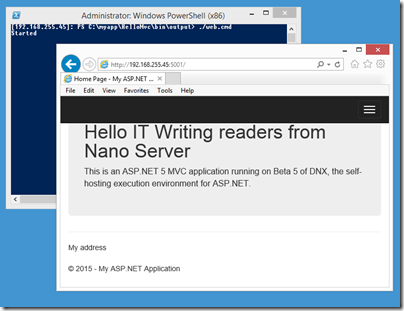

The sample ASP.NET MVC application worked first time.

How is .NET Core doing? The whole .NET picture is desperately confusing and I get the impression that most .NET developers, while they may have paid some attention to what is happening, have concluded that the safe path is to continue with the Window-only .NET Framework.

At the same time, .NET Core is strategically important to Microsoft. Cross-platform support means that C# has a life on the Mac and on Linux, which is vital to its health considering the popularity of the Mac amongst developers, and of Linux as a deployment platform for web applications. Visual Studio for Mac has also been updated and supports .NET Core 2.0 in the new version.

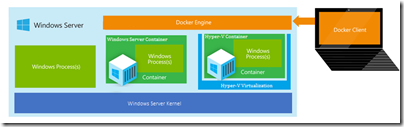

Another key piece is the container trend. .NET Core is ideal for container deployment, and the only version of .NET supported in Windows Nano Server. If you want to embrace microservices running in containers, while still developing with C#, .NET Core and Nano Server is the optimum solution.

Why not use .NET Core, especially since it is faster than ASP.NET? In these comparisons, .NET Core comes out as substantially faster than .NET Framework for various algorithms – 600 times faster in one case.

The main issue is compatibility. .NET Core is a subset of the .NET Framework, and being a relative newcomer, it lacks the same level of third-party support.

Another factor is that there is no support for desktop applications, though some solutions have been devised. Microsoft does have a cross-platform GUI story, in Xamarin Forms, which is now in preview for macOS alongside iOS, Android, Windows and Tizen. If Xamarin used .NET Core that would be a great solution, but it does not (though it does support .NET Standard 2.0).

One of the pieces that most concerns developers is data access. If you use .NET Core you are strongly guided towards Entity Framework Core, a fork of Microsoft’s ORM (Object-Relational Mapping) framework. Someone asked on this page, is EF Core usable? Here’s an answer from one user (11 days ago):

Answering 4 months later but people should know: Definitely not, it is still not usable unless you are doing something very trivial and/or have very small DB.

I don’t understand how it is possible for MS to ship it, act like it’s OK and sparsely here and there provide shallow information about its limitations like in this article without warning clearly and explicitly about the serious issues this “v1 product” has.

Someone may jump in and say no, it is fine; but there are undoubtedly missing pieces and I would suggest caution.

You can also access data using the Connection/Command/DataReader approach which avoids EF, and although this is more work, this is what I would be inclined to do personally since you get the best performance and flexibility. Here is an example for SQL Server.

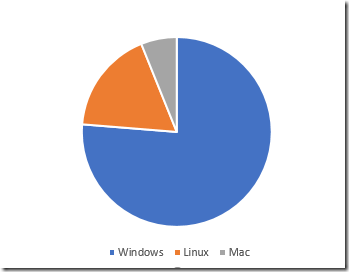

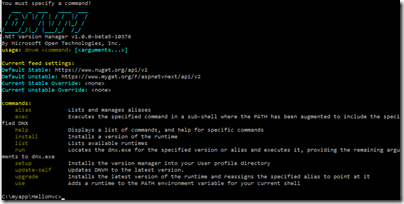

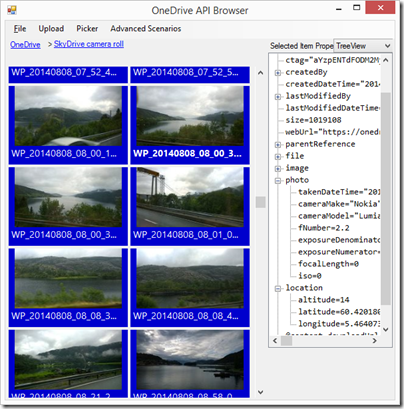

Who is using .NET Core? Controversially, Microsoft gathers telemetry from your use of the command-line tools though you can opt out by setting an environment variable. This means we have some data on .NET Core usage, though unfortunately it excludes Visual Studio usage. I downloaded the most recent dataset and imported it into a database. Here are the figures for OS family:

Total rows 5,036,981

Windows 3,841,922 (76.27%)

Linux 887,750 (17.62%)

Mac 307,309 (6.1%)

Given that this excludes Visual Studio users, who are also on Windows, we can conclude that the great majority of .NET Core developers use Windows, and only a tiny minority Mac (I do not know if Visual Studio for Mac usage is included). This is evidence that .NET Core has so far failed in its goal of persuading Mac-using developers to adopt .NET. It does show interest in deploying .NET applications to Linux, which is an obvious win in licensing costs as well as performance.

I would be interested in comments from developers on whether or not they use .NET Core and why.