The Sneaks session at Adobe MAX is always fun as well as giving some insight into what is coming from the company, though note that these are research projects and there is no guarantee that any will make it into products.

This time we also got commentary from Rainn Wilson, an actor in the US version of The Office. His best moment came during the MAX Awards just before the sneaks, when he put a little ad lib into one of the award intros:

Customers demand … that the little Adobe Acrobat update pop-up window just go away for a while, go the way of the Microsoft paper clip Clippy, the customer is demanding right now. I’m tired of clicking No No No No No.

I only read a PDF occasionally, he said.

We all know the reasons for that updater (and the one for Flash), but he is right: it is a frequent annoyance. What is the fix? There would be some improvement if Adobe were to make a deal with Microsoft and Apple to include Flash and Adobe Reader servicing in system update mechanisms like Windows Update, but beyond that it takes a different model of computing, where the operating system is better protected. It is another reason why users like Apple iOS and why Microsoft is building a locked-down Windows client for ARM.

Now, on to the sneaks.

1. Local Layer Ordering

We are used to the idea of layer ordering, but what about a tool that lets you interleave layers, with a pointer to put this part on top, this part underneath? You can do this with pieces of paper, but less easily with graphics software, at least until Local Layer Ordering makes it into an Adobe product.

2. Project rub-a-dub

The use case: you have a video with some speech, but want to re-record the speech to fix some problem. In this case it is hard to do it perfectly so that the lip synch is right. Project rub-a-dub automatically modifies the newly recorded speech to align it correctly.

3. Liquid Layout

This one is for the InDesign publishing software: it is about intelligent layout modification to deliver the same content on different screen sizes and orientation. I was reminded of the way Times Reader works, creating different numbers of columns on the fly, but this is InDesign.

4. Synchronizing crowd-sourced multi-camera video

This one struck me as a kind of video version of PhotoSynth, where multiple views of the same image are combined to make a composite. This is for video and is a bit different, in that it does not attempt to make a single video image, but does play synchronize multiple videos with a merged soundtrack. We saw a concert example, but it could be fascinating if applied to a moment of revolution, say, if many individuals capture the event on their mobiles.

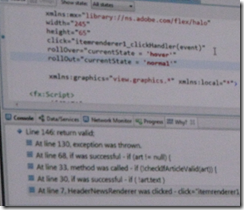

5. Smart debugging – how did my code get here?

This is a debugging tool based on a recorded trace, letting you step backwards as well as forwards through code. We have seen similar tools before, such as in Visual Studio 2010. Another facet of this one though is an English-like analysis of “how did my code get here”, which you can see if you squint at my blurry snap above.

6. Near-field communications for AIR

This demo showed near-field communications for Adobe AIR for mobile. We are most familiar with this for applications like payments, where you wave your mobile at a sensor, but it has plenty of potential for other scenarios, such as looking up product details without having to scan a barcode.

7. Pixel Nuggets: find commonality in your digital photos

The idea of this one is to identify “like” images by searching and analysing a collection. For example, you could perhaps point it at a folder with thousands of images and find all the ones which show flowers.

8. Monocle: telemetry data for Flex applications

In this demo, Deepa Subramaniam showed what I guess is a kind of profiler, showing a visualization of where your code is spending its time.

9. Video Mesh – amazing video editing

My snap does not capture this well, but it was amazing to watch. As I understand it, this is software than analyses a video to get intelligent understanding of its objects and perspective. In the example, we saw how a person walking across the front of the screen image could be made to walk more towards the rear, or behind a pillar, with correct size and perspective.

10. GPU Parallelism in Flash

This demo used a native extension to perform intensive calculations using GPU parallelism. We saw how an explosion of particles was rendered much more quickly, which of course I cannot capture in a static image, so I am showing Adam Welc’s lighthearted intro slide instead. I am a fan of general purpose computing on the GPU and would love to see this in Flash.

11. Re-focus an image

This is a feature that I’d guess will almost certainly show up in Photoshop or perhaps in a future tablet app: take an out of focus image and make it an in-focus image. The demo we saw was an image suffering from camera shake. The analysis worked out the movement path of the camera, which you can see in the small wiggly line in the right panel above, and used it to move parts of the image back so they are properly superimposed. I would guess this really only works for images out of focus because of camera shake; it will not fix incorrect lens settings. I have also seen a similar feature built into the firmware of a camera, though I am sure Photoshop can do a much better job if only because of the greater processing power available.

This was a big hit with the MAX crowd though. Perhaps most of us were thinking of photos we have taken that could do with this kind of processing.