Once upon a time all Windows development was desktop development. Then there was web development, but that was a server thing. Then in October 2012 Windows 8 arrived, and it was all about full-screen, touch control and Store-delivered applications that were sandboxed and safe to run. Underneath this there was a new platform-within-a-platform called the Windows Runtime or WinRT (or sometimes Metro). Developing for Windows became a choice: new WinRT platform, or old-style desktop development, the latter remaining necessary if your application needed more features than were available in WinRT, or to run on Windows 7.

Windows 8 failed and was replaced by Windows 10 (July 2015), in large part a return to the desktop. The Start menu returned, and each application again had a window. WinRT lived on though, now rebranded as UWP (Universal Windows Platform). The big selling point was that your UWP app would run on phones, Xbox and HoloLens as well as PCs. It was still locked down, though less so, and still Store-delivered.

Then Microsoft decided to abandon Windows Phone, a decision obvious to Microsoft-watchers in June 2015 when ex-Nokia CEO Stephen Elop left Microsoft, just before the launch of Windows 10, even though Windows Phone was not formally killed off until much later. UWP now had a rather small u (that is, not very universal).

In addition, Microsoft decided that locking down UWP was not the way forward, and opened up more and more Windows APIs to the platform. The distinction between UWP and desktop applications was further blurred by Project Centennial, now known as Desktop Bridge, which lets you wrap desktop applications for Store delivery.

Perhaps the whole WinRT/UWP thing was not such a good idea. A side-effect though of all the focus on UWP was that the old development frameworks, such as Windows Forms (WinForms) and Windows Presentation Foundation (WPF), received little attention – even though they were more widely used. Some Windows 10 APIs were only available in UWP, while other features only worked in WinForms or WPF, giving developers a difficult decision.

The Build 2018 event, which was on last week in Seattle, was the moment Microsoft announced that it would endeavour to undo the damage by bringing UWP and desktop development together. “We’ve taken all the UI stacks and merged them together” said Mike Harsh and Scott Hunter in a session on “Modernizing desktop apps” (BRK3501 if you want to look it up).

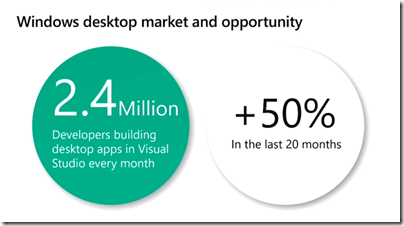

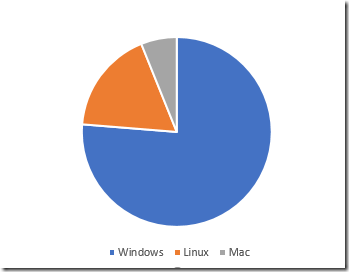

According to Harsh and Hunter, Windows desktop application development is increasing, despite the decline of the PC (note that this is hardly a neutral source).

So what was actually announced? Here is a quick summary. Note that the announced features are for the most part applicable to future versions of Windows 10. As ever, Build is for the initial announcement. So features are subject to change and will not work yet, other than possibly in pre-release form.

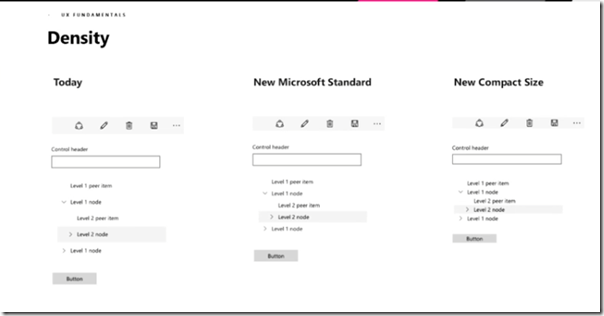

Greater information density in UWP applications. WinRT/UWP was originally designed for touch control, so with lots of white space. Most Windows users though have mouse and keyboard. The spacious UWP layout looked wrong on big desktop displays, and it made porting applications harder. The standard layout is getting less dense, and a new Compact Size, an application setting, will pack more information into the same space.

More controls for UWP. New DataGrid, Forms with data validation, Menu bar, and coming in future, Status bar, tab controls and Ribbon. The idea is to make UWP more suitable for line-of-business applications, which accounts for a large part of Windows application development overall.

New Windowing APIs for UWP. WinRT/UWP was designed for full-screen applications, not the popup-dialogs or floating windows possible in desktop applications. Those capabilities are coming though. We will get tool windows, light-dismiss windows (eg type and press Enter), and multiple windows on one thread so that they work like a single application when minimized or cycled through with alt-tab. Coming in future are topmost windows, modal windows, custom title bars, and maybe even MDI (Multiple Document Interface), though this last seems surprising since it is discouraged even in the desktop frameworks.

What many developers will care about more though is new features coming to desktop applications. There are two big announcements.

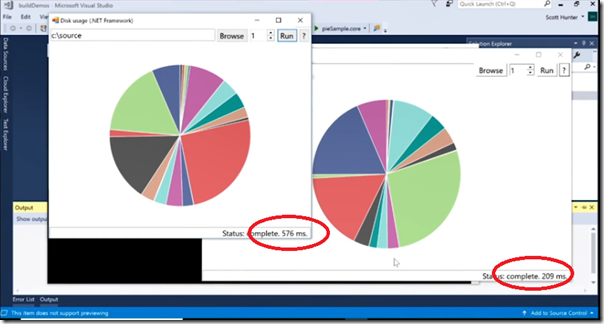

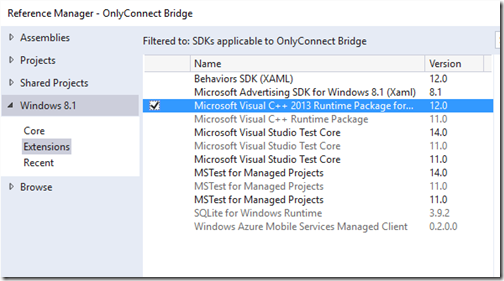

.NET Core 3.0 will support WinForms and WPF. This is big news, partly because it performs better than the Windows-only .NET Framework, but more important, because it allows side-by-side deployment of the .NET runtime. Even better, a linker will let you deliver a .NET Core desktop application as a single executable with no dependencies. What performance gain? An example shown at Build was an application which uses File APIs running nearly three times faster on .NET Core 3.0.

XAML Islands enabling UWP features in WinForms and WPF. The idea is that you can pop a UWP host control in your WinForms or WPF application, and show UWP content there. Microsoft is also preparing wrapper controls that you can use directly. Mentioned were WebView, MediaPlayer, InkCanvas, InkToolBar, Map and SwapChainPanel (for DirectX content). There will be a few compromises. The XAML host window will be rectangular (based on an HWND) which means non-rectangular and transparent content will not work correctly. There is also the Windows 7 problem: no UWP on Windows 7, so what happens to your XAML Islands? They will not run, though Microsoft is working on a mechanism that lets your application substitute compatible Windows 7 content rather than crashing.

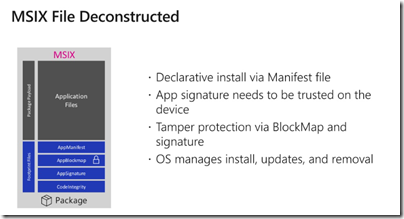

MSIX deployment. MSIX is Microsoft’s latest deployment technology. It will work with both UWP and Desktop applications, will support Windows 7 and 10, will provide for auto-updates, and will have tooling built into Visual Studio, as well as a packager for both your own and third-party applications. Applications installed with MSIX are managed and updated by Windows, have tamper protection, and are installed per-user. It seems to build upon the Desktop Bridge concept, the aim being to make Windows more manageable in the Enterprise as well as safer for all users, if Microsoft can get widespread adoption. The packaging format will also work on Android, Mac and Linux and you can check out the SDK here.

Will WPF or WinForms be updated?

The above does not quite answer the question, will WPF or Windows Forms be significantly updated, other than with the ability to use UWP content? I could not get a clear answer on this question at Build, though I was told that adding support for .NET Core 3.0 required significant changes to these frameworks so it is no longer true to say they are frozen. With regard to WPF Microsoft Corporate VP Julia Liuson told me:

“We will be looking at more controls, more capabilities. It is widely recognised that WPF is the best framework for desktop development on Windows. The fact that we’re moving on top of .NET Core 3.0 gives us a path forward.”

That said, I also heard that the team would rather write code once and use it across UWP, WPF and WinForms via XAML Islands, than write new controls for each framework. That makes sense, the difficulty being Windows 7. Microsoft would rather promote migration to Windows 10, than write new UI components that work across both Windows 7 and Windows 10.