There is a telling moment in the day two keynote at Microsoft’s Worldwide Partner Conference. “Now we’ve added Office 365”, says Corporate VP Jon Roskill. Do you guys feel the momentum?” There is a muted cheer, not the big whoop Roskill is looking for. “Now let’s have some momentum, whoo!” he repeats. Another barely audible cheer.

Why are partners not whooping and cheering? Take a look at the Microsoft-commissioned Forrester report [PDF] on the total economic impact of Office 365. This report claims a remarkable payback period of only 2 months for a midsize organization moving to Office 365.

Looking at the figures in more detail, Forrester claims $54,000 saved over three years in eliminated hardware, $10,000 over the period in eliminated third-party software, $25,000 saved in web conferencing (Lync Online is bundled with Office 365), and $18,000 in “internal labor and professional services” saved on planning and implementation. There is an even bigger saving in support. Here I find it hard to puzzle out exactly what Forrester is claiming. It talks about “savings of $206,350 over three years” from simplified support and outsourced administration of infrastructure, but also refers to $146,250 costs in admin and support costs for Office 365; I am not sure if the $206,350 is a net figure. Forrester also throws in $260,625 saved on reduced travel thanks to online collaboration, which strikes me as highly speculative.

I suggest therefore that you do not take Forrester’s figures too seriously; but it is still worth noting that many of the savings come from revenue that would otherwise have gone to partners. How much partner income is lost will depend on the extent to which an organization outsources its IT admin, planning, support and administration, and on the margins partners achieve on things like third-party software; but it is considerable.

Of course there are also new business opportunities for partners. Presuming the savings from Office 365 and Microsoft’s other cloud offerings are real, a cloud-oriented partner has a strong sales pitch both to existing and new customers. Partners get an ongoing commission from subscriptions.

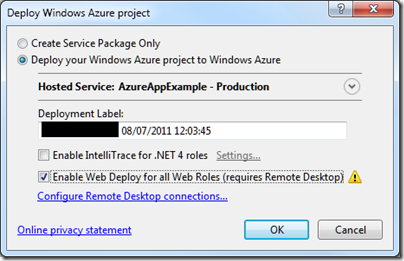

There is also an opportunity for new applications which link to cloud services. Yesterday Microsoft announced that the Windows Azure Marketplace, which used to offer data services and application building blocks, now also offers finished applications in US markets.

It is also true that Microsoft’s cloud offering is more partner-friendly than others, because it is a hybrid solution. Forrester’s report mentioned above assumes use of Active Directory Federation Services for single-sign on between on-premise and Office 365, a key feature which has been under-reported in the media coverage I have seen for Office 365. This feature, along with the fact that Microsoft’s server products like Exchange, SharePoint and Dynamics CRM can be deployed either online or as hosted services, means that there is flexibility over what is hosted and what is on-premise.

Nevertheless, it is hard to construct a reality in which the savings customers get from cloud services are real, without the further implication that total partner revenue will diminish, even though certain individual partners who take advantage of the new opportunities may end up winners.

This is true even if Microsoft succeeds in retaining all of its existing Microsoft-platform customers, rather than losing them to Google or other cloud providers. The consequences of a migration to Google, which is inherently not a hybrid platform, seem to me more severe.

Is there any way to put a positive spin on this, from a partner’s perspective? A couple of thoughts on this.

First, even if certain kinds of IT business are under threat from cloud migration, it is also true that the transforming impact of IT and the internet on businesses is far from complete. Much of what businesses currently do with IT can be greatly improved, there is still a thirst for new and improved business applications, and new technology including not only the cloud, but also massively parallel computing and of course mobile presents many new opportunities.

Second, it seems to me that partners should not be asking themselves how to maintain their business, but instead planning for change. It seems to me inevitable that the demand for skills in installing and nursing servers, deploying applications, and in maintaining and supporting clients, will diminish; and that is a good thing because these activities are IT plumbing and if they can be reduced it frees resources for other activities which have more business potential.

Behind the whooping and cheering, Microsoft’s message to partners is a tough one. Change, or die.