Microsoft has reported its latest financial results, for the quarter ending December 31st 2015.

Here are the latest figures (see end of post for what is in the segments):

Quarter ending December 31st 2015 vs quarter ending December 31st 2014, $millions

| Segment | Revenue | Change | Operating income | Change |

| Productivity and Business Processes | 6690 | -132 | 6460 | -528 |

| Intelligent Cloud | 6343 | +302 | 4977 | +272 |

| More Personal Computing | 12660 | -622 | 3542 | +528 |

| Corporate and Other | -1897 | -2222 | -1897 | -1980 |

A few points to note.

Revenue is down: Revenue overall was $million 23.8, $million 2.67 down on the same quarter in 2014. This is because cloud revenue has increased by less than personal computing has declined. The segments are rather opaque. We have to look at Microsoft’s comments on its results to get a better picture of how the company’s business is changing.

Windows: Revenue down 5% “due primarily to lower phone and Windows revenue and negative impact from foreign currency”.

Windows 10: Not much said about this specifically, except that search revenue grew 21% overall, and “nearly 30% of search revenue in the month of December was driven by Windows 10 devices.” That enforced Cortana/Bing search integration is beginning to pay off.

Surface: Revenue up 29%, but not enough to offset a 49% decline in phone revenue.

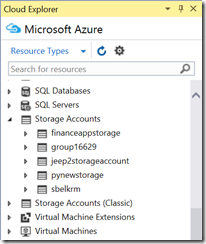

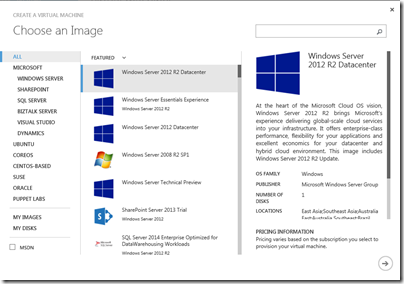

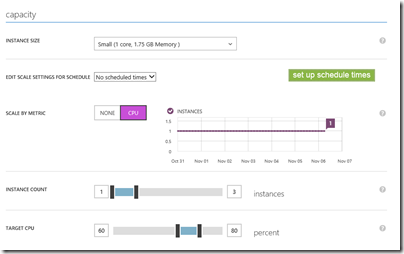

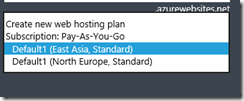

Azure: Azure revenue grew 140%, compute usage doubled year on year, Azure SQL database usage increased by 5 times year on year.

Office 365: 59% growth in commercial seats.

Server products: Revenue is up 5% after allowing for currency movements.

Xbox: Xbox Live revenue is growing (up 30% year on year) but hardware revenue declined, by how much is undisclosed. Microsoft attributes this to “lower volumes of Xbox 360” which is lame considering that the shiny Xbox One is also available.

Further observations

This is a continuing story of cloud growth and consumer decline, with Microsoft’s traditional business market somewhere in between. The slow, or not so slow, death of Windows Phone is sad to see; Microsoft’s dismal handling of its Nokia acquisition is among its biggest mis-steps and hugely costly.

CEO Satya Nadella came from the server side of the business and seems to be shaping the company in that direction, if he had any choice.

Azure and Office 365 are its big success stories. Nadella said in the earnings call that “the enterprise cloud opportunity is massive, larger than any market we’ve ever participated in.”

A reminder of Microsoft’s segments:

Productivity and Business Processes: Office, both commercial and consumer, including retail sales, volume licenses, Office 365, Exchange, SharePoint, Skype for Business, Skype consumer, OneDrive, Outlook.com. Microsoft Dynamics including Dynamics CRM, Dynamics ERP, both online and on-premises sales.

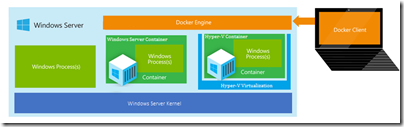

Intelligent Cloud: Server products not mentioned above, including Windows server, SQL Server, Visual Studio, System Center, as well as Microsoft Azure.

More Personal Computing: What a daft name, more than what? Still, this includes Windows in all its non-server forms, Windows Phone both hardware and licenses, Surface hardware, gaming including Xbox, Xbox Live, and search advertising.